Scream of consciousness, live-blogged development notes. No longer updated.

The devlog is now "news"🔗

I've moved the devlog to a new location - news.

why🔗

The devlog is getting huge, and it's a bit to chaotic. I'll now be live-blogging feature development in specific articles, like I did for Light Tiling, Live.

All other blurbs are news, so they can live on the news page. I'm just trying to stay organized as this project grows.

But - this stuff will stay here for posterity.

Sun 21 September, 2025🔗

I've done a rework of the API, greatly improved the documentation and created a user's manual full of examples.

User's manual🔗

The manual covers the basics, but lacks lighting examples. Obviously it's a work in progress. I'll be making sure that it's complete after getting it online. Even in its incomplete state, it has lots of workable, tested examples in it, and I hope it helps folks get up and running.

Documentation updates🔗

There's a ton more documentation coverage. I did a big audit of the current documentation and added more where needed as well as revamped the existing docs.

The latest docs are now hosted here at /docs, due to the fact that Renderling

depends on a not yet released version of spirv-std, which the Rust-GPU group is still

working on releasing.

API changes🔗

I've removed the crabslab::Id and craballoc::Hybrid* types from the public API.

I figured that it shouldn't be necessary for users to understand anything about slabs and

descriptors.

Now all the various resources (Primitive, Material, Vertices etc) adhere to a builder

pattern for configuration and updates.

Skybox is now separate from Ibl.

Up until this point, if you wanted to render a skybox, that skybox would also perform

image based lighting automatically.

I decided to decouple these now, as there are valid situations where you may not want

IBL, but do want a skybox.

Sun 31 August, 2025🔗

Light tiling is done! ^ The last part of that article was my favorite, as I got to do lots of comparison graphs of light tiling with different parameters.

WASM updates🔗

It's been a long time coming, but I finally wrote some proper WASM test infrastructure, and tests.

Running renderling on web is a very high priority for me, as I want to write games that

work everywhere. Now that there are tests that run in a headless Firefox browser, even if not

yet in CI, the WASM target shouldn't lag behind native.

Sat 2 August, 2025🔗

Sat 26 July, 2025🔗

Light tiling progresses!. This work is getting really close, then I can move on to the project plan for next year.

About that - like I mentioned last update, NLnet is giving Renderling another year of funding (and increasing the budget)! I'm stoked.

Next year's project will include contributions to Rust GPU, the gltf crate and others.

Also on the dockett is global illumination...

And I'll be working with a contributor! You might know them! I'll talk more about that later.

Mon 7 July, 2025🔗

Wow! What a long break.

I've switched jobs and moved house (still in New Zealand).

Also, Renderling got picked up for another year of funding, and got an extension on the last milestone for last year's funding!

I'll write more about all of that later. Thank you!

Sun 18 May, 2025🔗

My partner and I bought a house! Quite a distraction.

Sun 11 May, 2025🔗

Today ends my NLNet grant.

It was really cool to be a part of their program, and it has changed the way I feel about OSS.

If you ever have a project you think may help people, I highly recommend applying to one of their grant programs.

Sat 10 May, 2025🔗

Light tiling - calculating frustums, verifying indices, etc

Sat 26 Apr, 2025🔗

More light tiling work continues.

Thu 10 Apr, 2025🔗

Renderling has been selected for round 2 of 2025 NLNet funding!

This means that we're in the running for funding. I'll be putting together some details together about what the next year of development will look like.

Most likely there will be significant contributions to both Rust-GPU and wgpu.

I would also really like to get some ray marched shadows going, and finish up the occlusion culling.

Stay tuned!

Light tiling updates from March🔗

I've made some progress on the last big milestone of 2024 NLNet funding, light tiling.

You can read about it at the dedicated light tiling article:

/articles/live/light_tiling.html

Sat 22 Mar, 2025🔗

It's my partner Briana's birthday! Happy birthday, babe.

Light tiling🔗

I'm starting on light tiling. I've decided to live blog it in one fell swoop, and link it from here.

/articles/live/light_tiling.html

Sun 9 Mar, 2025🔗

Shadow map wrap up!🔗

I'm putting the finishing touches on that PR at the moment.

It looks really hairy, but it's not as big a change as it looks. GitHub's diff is

exploded by the WGSL files. GitHub's UI doesn't seem to be respecting the .gitattributes

of my project, which specifies that *.wgsl binary. There are a couple tricks to force

GitHub to decide that certain files are generated (which my WGSL files are). But those

tricks (namely to use *.wgsl linguist-generated=true) aren't working for me. I've had

a months long support ticket about it where they have me add and remove the attribute

over and over, hoping for a different result. Oh well.

Back to the shadow mapping...

Here's the result!

The obvious difference being that Blender adds a lot of ambient color to the scene. But the shadows are nearly identical!

I'm happy with the outcome.

Sampling from cubemaps in Rust code

The code for sampling from cubemaps in Rust code turned out to be not that complicated! The idea is that you first find which major axis the sampling vector is most aligned with and then you normalize the vector a bit and make sure the other components are pointing in the correct direction.

The tricky part for my work was realizing that inside the cubemap its matrices are left-handed.

The other tricky stuff was about constructing the cubemaps.

Sun 2 Mar, 2025🔗

Hand rolled cubemap sampling🔗

To support point light shadow maps I'm having to build out support for sampling from cubemaps stored in an atlas.

I've lifted out the stage rendering operation into its own struct so that it can be run from the Stage

as well as from a new struct, SceneCubemap.

The next step is to write the cubemap into the atlas and then compare/assert that sampling from the atlas returns results equal to traditional cubemap sampling in a shader.

The setup for that is a little hairy, as I'll have to write a shader to sample from the cubemap and then read that from a buffer onto the CPU.

One cool side-effect of having the ability to do cubemap sampling from the atlas is that the skybox and IBL lighting can all come from the atlas, making the entire system more "bindless". It also puts the library user in control of how much memory they're using for all textures, which will be great for constrained devices like the raspberry pi.

Onto the cubemap sampling🔗

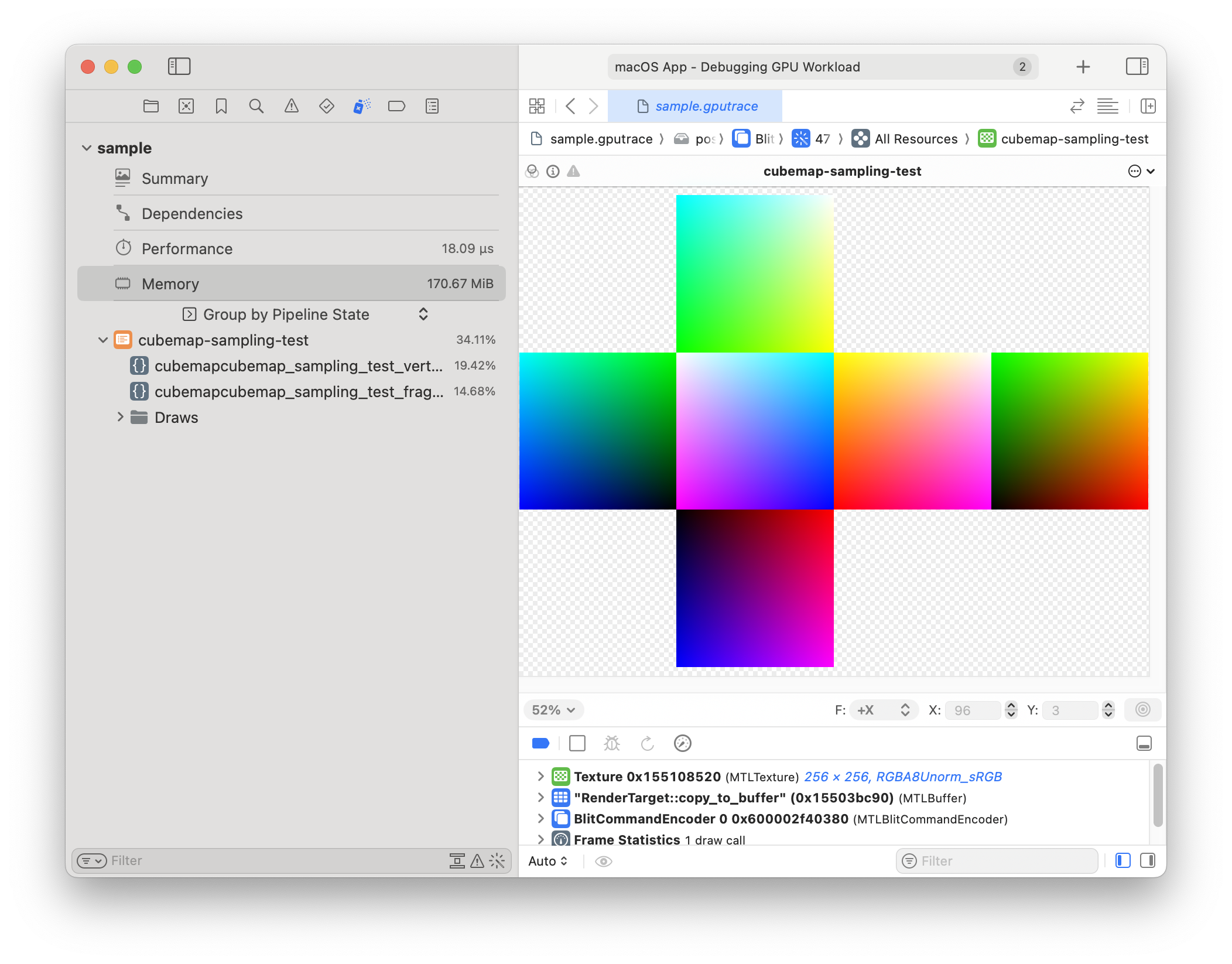

So, without much further ado, here are the shaders that we'll be working with to ensure that our hand-rolled cubemap sampling works in a way that's comparable to the GPU's own sampling:

/// Vertex shader for testing cubemap sampling. #[spirv(vertex)] pub fn cubemap_sampling_test_vertex( #[spirv(vertex_index)] vertex_index: u32, #[spirv(instance_index)] uv_id: Id<Vec3>, #[spirv(storage_buffer, descriptor_set = 0, binding = 0)] slab: &[u32], out_uv: &mut Vec3, #[spirv(position)] out_clip_coords: &mut Vec4, ) { let vertex_index = vertex_index as usize % 6; *out_clip_coords = crate::math::CLIP_SPACE_COORD_QUAD_CCW[vertex_index]; *out_uv = slab.read_unchecked(uv_id); } /// Vertex shader for testing cubemap sampling. #[spirv(fragment)] pub fn cubemap_sampling_test_fragment( #[spirv(descriptor_set = 0, binding = 1)] cubemap: &spirv_std::image::Cubemap, #[spirv(descriptor_set = 0, binding = 2)] sampler: &spirv_std::Sampler, in_uv: Vec3, frag_color: &mut Vec4, ) { *frag_color = cubemap.sample(*sampler, in_uv); }

This will render a quad that samples from one UV coordinate. I'll use this to ensure:

Native GPU cubemap sampling works as I expect it to.

The hand-rolled cubemap sampling works as I expect it to.

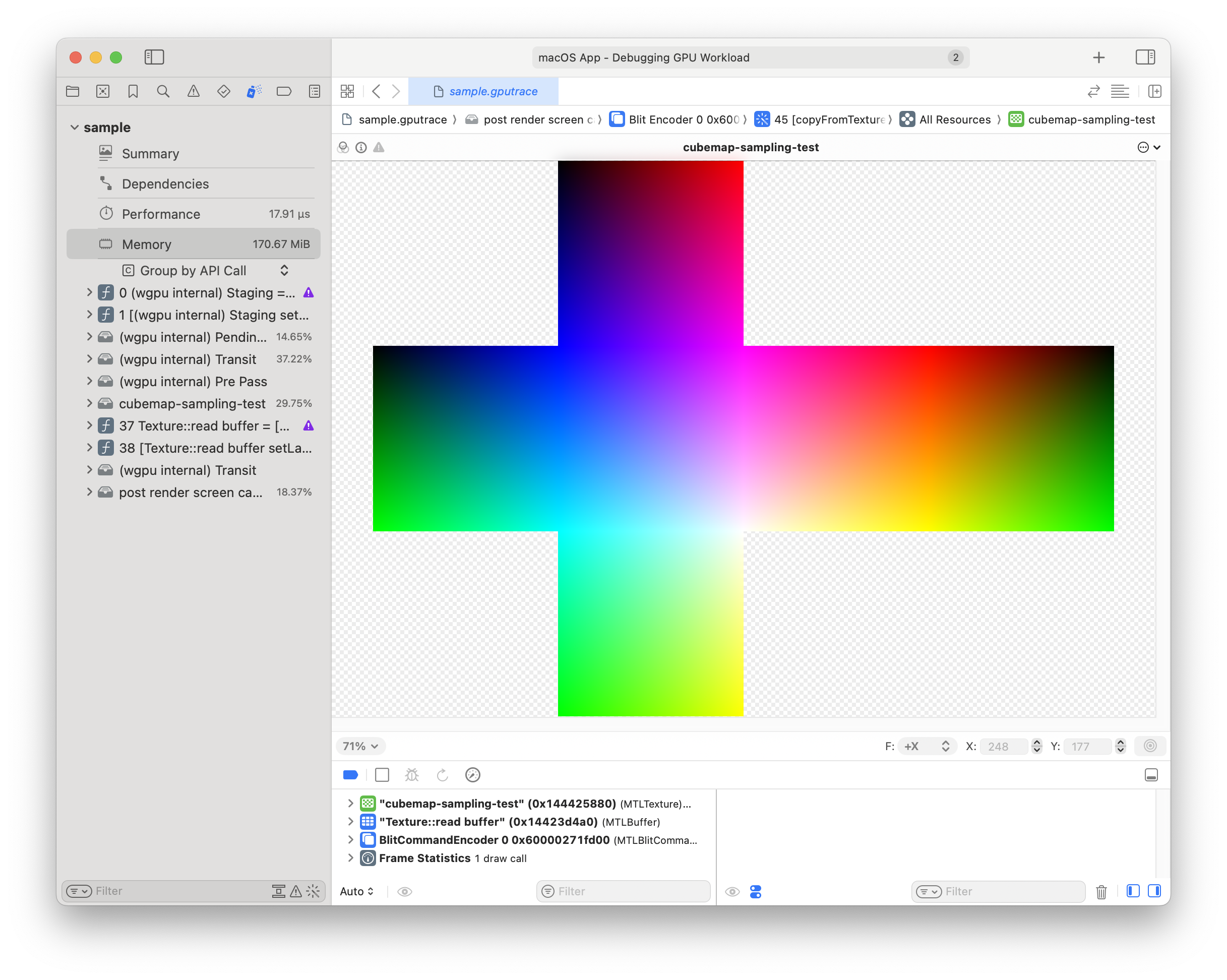

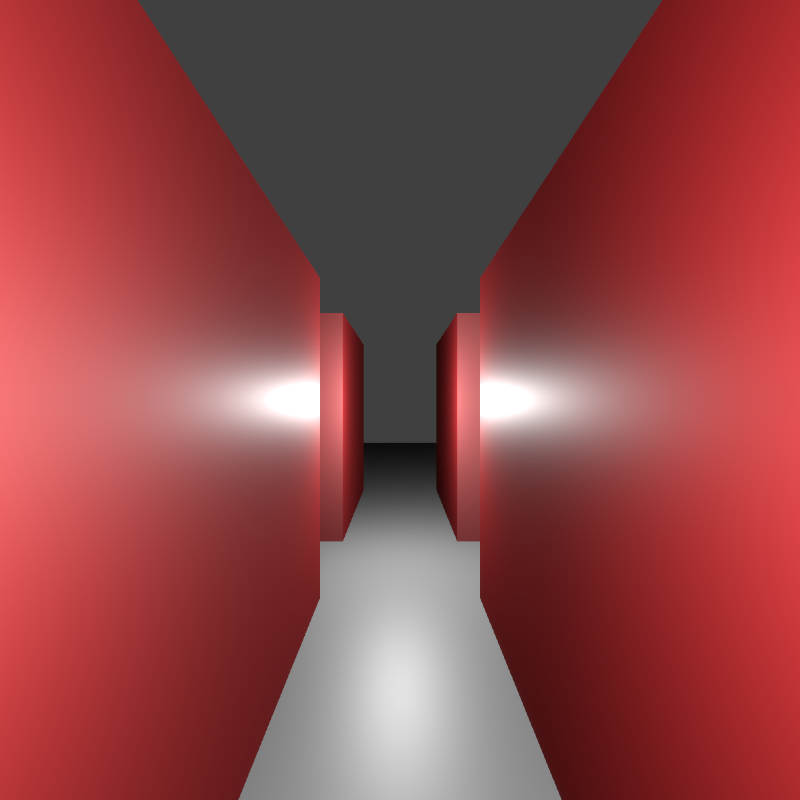

...and here is our cubemap as seen from a GPU trace:

...eh, that doesn't seem right. The sides of the cube should all blend together and look like an unfolded box.

Looks like the internal representation of a cubemap is maybe more... ...specific than I thought.

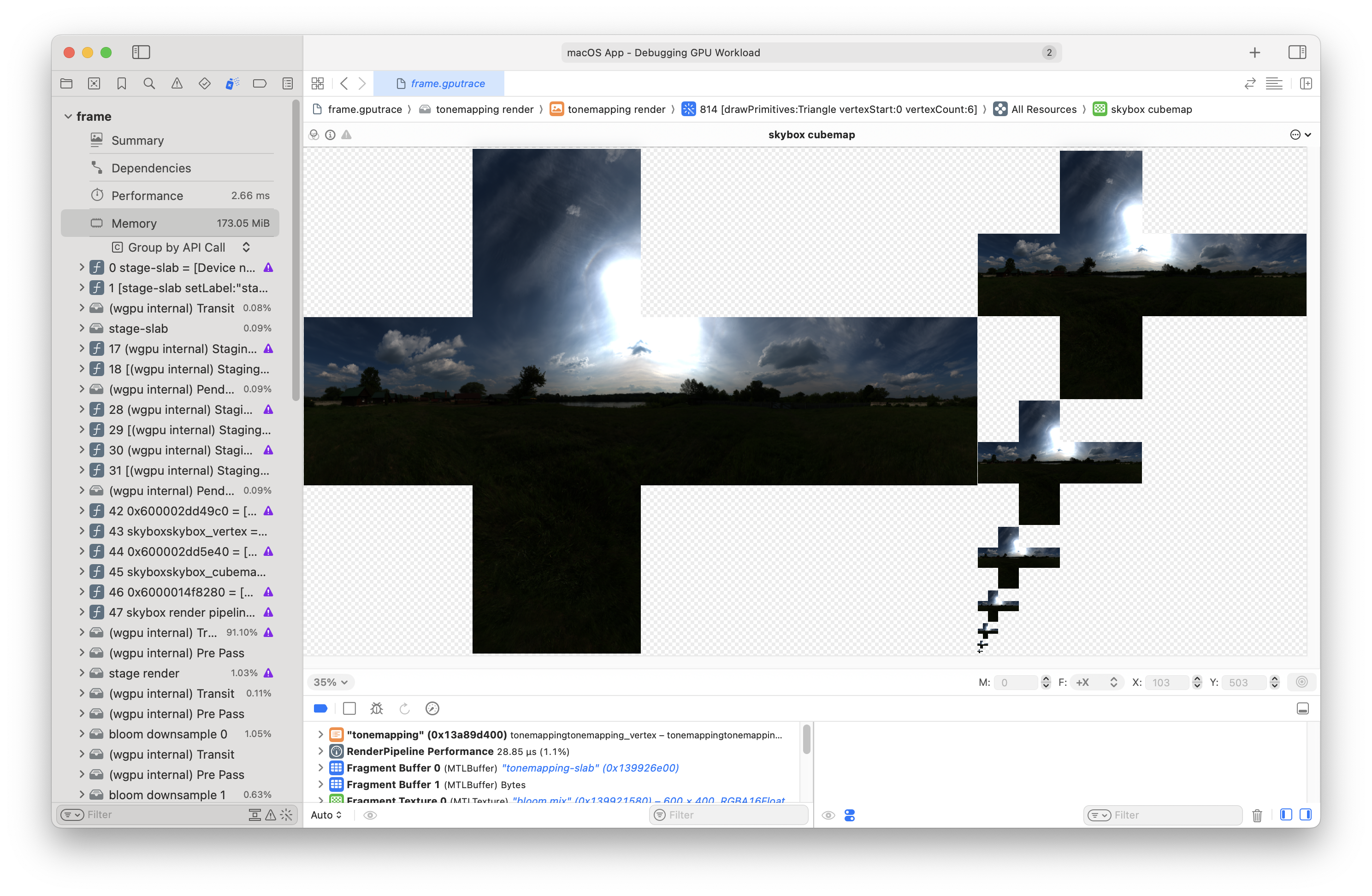

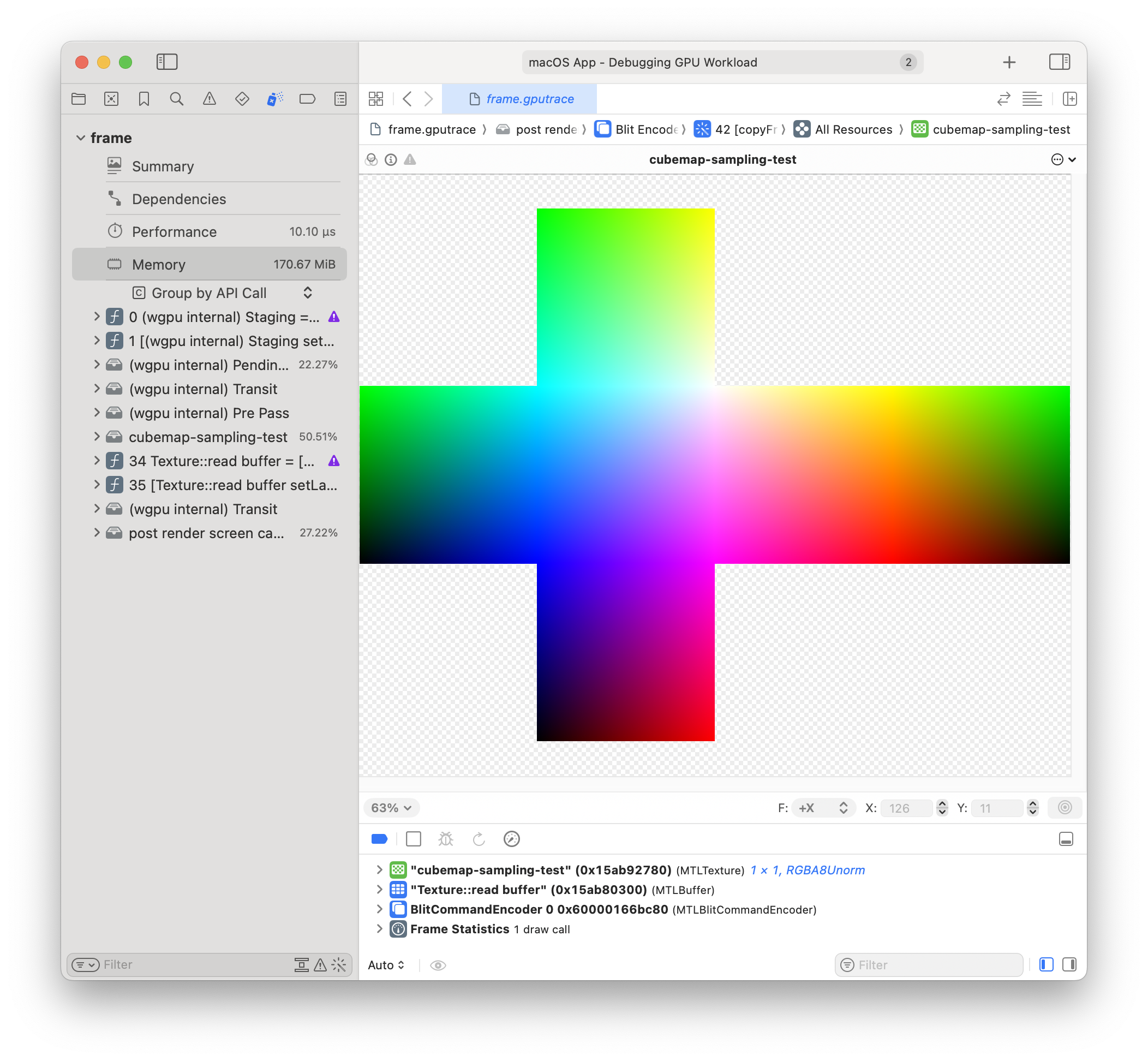

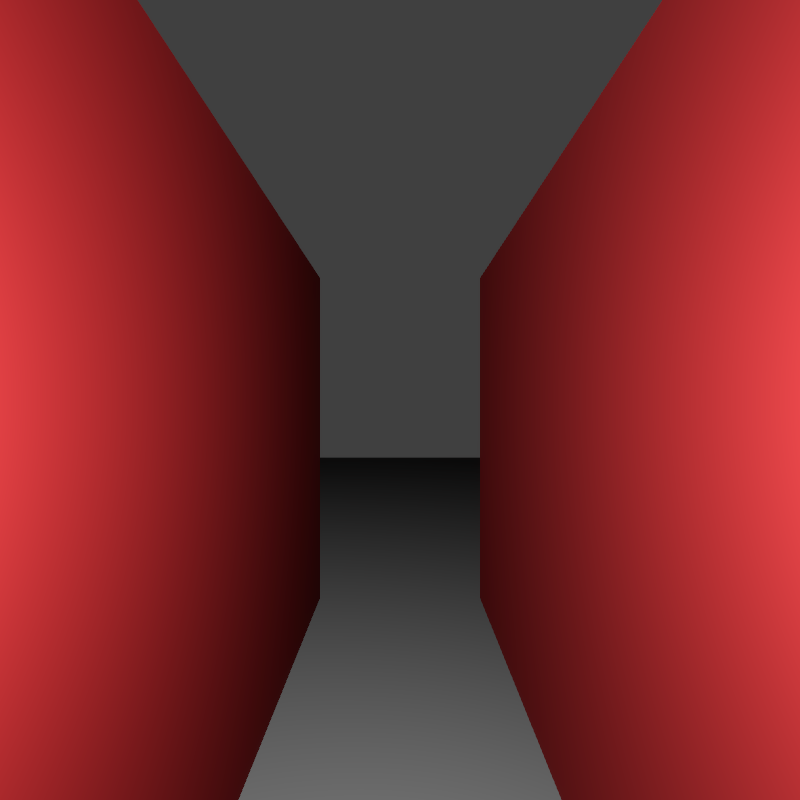

Let's look at the cubemap that I use for a skybox:

Ah, yeah. Here you can see that the sides just flow from one to another. So I think my matrices are out of whack. I bet we have to render upside down, too, to get the textures to flip.

This will probably affect the coordinates used to sample. I don't think I can expect that sampling

with (1.0, -1.0, -1.0) will produce red.

Actually - I'm going to try flipping the color's Y coords just to see, because it seems like the white point should be in the upper right instead of the lower right...

There we go! Now sampling does come out as expected.

Debugging the CPU cubemap sampling algo🔗

The sampling algorithm is going to perform these steps:

Determine the face:

Compare the absolute values of the x, y, and z components of the input

coord.The component with the largest absolute value determines the primary direction.

Select the face and calculate 2D coordinates:

For each possible face (±X, ±Y, ±Z), after determining the face, project the vector onto the plane of that face to get u, v coordinates.

These normalized coordinates are then converted to pixel coordinates for sampling from the image.

Fetch the texel value from the determined face using bilinear interpolation for smooth results.

Return the final color: With proper scaling and orientation adjustments.

With an initial implementation of the sampling algorithm I've printed out the input uv coord, the GPU sample value, the CPU sample value and then also the face index and the 2d uv coords that were determined by the algo:

uv: [1, 0, 0],

gpu: [1, 0.49803922, 0.49803922, 1]

cpu: [0.5019608, 0.5019608, 0, 1]

from: +X(0) [0.5, 0.5]

uv: [-1, 0, 0],

gpu: [0, 0.49803922, 0.5019608, 1]

cpu: [0.49803922, 0.5019608, 1, 1]

from: -X(1) [0.5, 0.5]

uv: [0, 1, 0],

gpu: [0.5019608, 1, 0.5019608, 1]

cpu: [0.49803922, 1, 0.49803922, 1]

from: +Y(3) [0.5, 0.5]

uv: [0, -1, 0],

gpu: [0.5019608, 0, 0.49803922, 1]

cpu: [0.49803922, 0, 0.5019608, 1]

from: -Y(2) [0.5, 0.5]

uv: [0, 0, 1],

gpu: [0.5019608, 0.49803922, 1, 1]

cpu: [0, 0.5019608, 0.49803922, 1]

from: +Z(4) [0.5, 0.5]

uv: [0, 0, -1],

gpu: [0.49803922, 0.49803922, 0, 1]

cpu: [1, 0.5019608, 0.5019608, 1]

from: -Z(5) [0.5, 0.5]

So, it's already kinda correct. You can see that it gets the face index correct for the input coords. It also seems that the computed 2d uv coords are correct... ...that leaves the sampling itself, or the images as copied out of the cubemap.

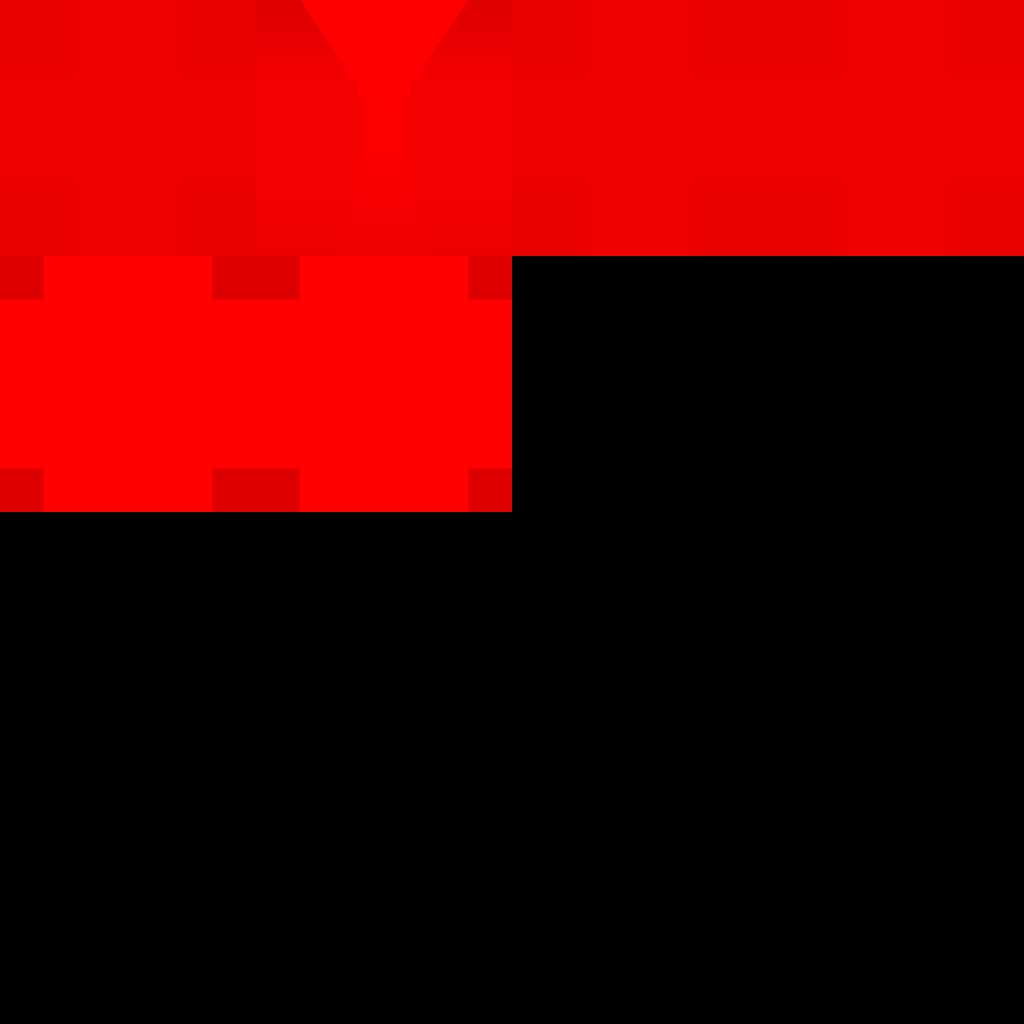

Let's look at the images as read out of our GPU cubemap:

Hrm. Well it looks like they're correct in that they definitely represent the sides of the cubemap. I can tell by comparing them to the cubemap screenshot above.

Let's just take the first case into question:

uv: [1, 0, 0],

gpu: [1, 0.49803922, 0.49803922, 1]

cpu: [0.5019608, 0.5019608, 0, 1]

from: +X(0) [0.5, 0.5]

It's getting the index correct, and the location. If we sample from the +X image at (0.5, 0.5), which is

(127.5, 127.5) in pixels, we should get something like (1.0, 0.5, 0.5, 1.0). So then why is it returning

(0.5019608, 0.5019608, 0, 1)?

...

Oh. 🤦. When constructing the CPU cubemap after reading the images from the GPU, I used this:

let cpu_cubemap = [ images.pop().unwrap(), images.pop().unwrap(), images.pop().unwrap(), images.pop().unwrap(), images.pop().unwrap(), images.pop().unwrap(), ];

...instead of this:

let cpu_cubemap = [ images.remove(0), images.remove(0), images.remove(0), images.remove(0), images.remove(0), images.remove(0), ];

Ok. So after fixing that blunder, things are working out. Now I've set a threshold for an acceptable distance between the GPU and CPU sample values and will start working on interpolation and multisampling.

But also - what happens when you pass in Vec3::ZERO as the uv coords? I bet it interpolates between

corners or something.

...

Ok, maybe I shouldn't work on multisampling just yet. It isn't completely necessary to finish shadow mapping. I can always circle back later.

...

Eh, multisampling wasn't that hard. Now I have all the cardinal directions sampled:

__uv: [1, 0, 0],

_gpu: [1, 0.49803922, 0.49803922, 1]

_cpu: [1, 0.49803922, 0.49803922, 1]

mcpu: [1, 0.49882355, 0.49882355, 1]

from: +X(0) [0.5, 0.5]

__uv: [-1, 0, 0],

_gpu: [0, 0.49803922, 0.5019608, 1]

_cpu: [0, 0.49803922, 0.5019608, 1]

mcpu: [0, 0.49882355, 0.5011765, 1]

from: -X(1) [0.5, 0.5]

__uv: [0, 1, 0],

_gpu: [0.5019608, 1, 0.5019608, 1]

_cpu: [0.5019608, 1, 0.5019608, 1]

mcpu: [0.5011765, 1, 0.5011765, 1]

from: +Y(2) [0.5, 0.5]

__uv: [0, -1, 0],

_gpu: [0.5019608, 0, 0.49803922, 1]

_cpu: [0.5019608, 0, 0.49803922, 1]

mcpu: [0.5011765, 0, 0.49882355, 1]

from: -Y(3) [0.5, 0.5]

__uv: [0, 0, 1],

_gpu: [0.5019608, 0.49803922, 1, 1]

_cpu: [0.5019608, 0.49803922, 1, 1]

mcpu: [0.5011765, 0.49882355, 1, 1]

from: +Z(4) [0.5, 0.5]

__uv: [0, 0, -1],

_gpu: [0.49803922, 0.49803922, 0, 1]

_cpu: [0.49803922, 0.49803922, 0, 1]

mcpu: [0.49882355, 0.49882355, 0, 1]

from: -Z(5) [0.5, 0.5]

It's interesting, though, that the multisample mcpu value seems to be further from the GPU value.

Let's add the corners in...

Huh! Event with the corners, sampling in a cone around the direction vector and averaging comes out worse. No need for it, then!

Oh! And it turns out that if you sample a cubemap with Vec3::ZERO, it just uses Vec3::X instead.

After thinking about it, that makes sense.

So now we have our sampling algorithm written on the CPU!

Now we can adapt it to sampling images off the slab.

Sun 23 Feb, 2025🔗

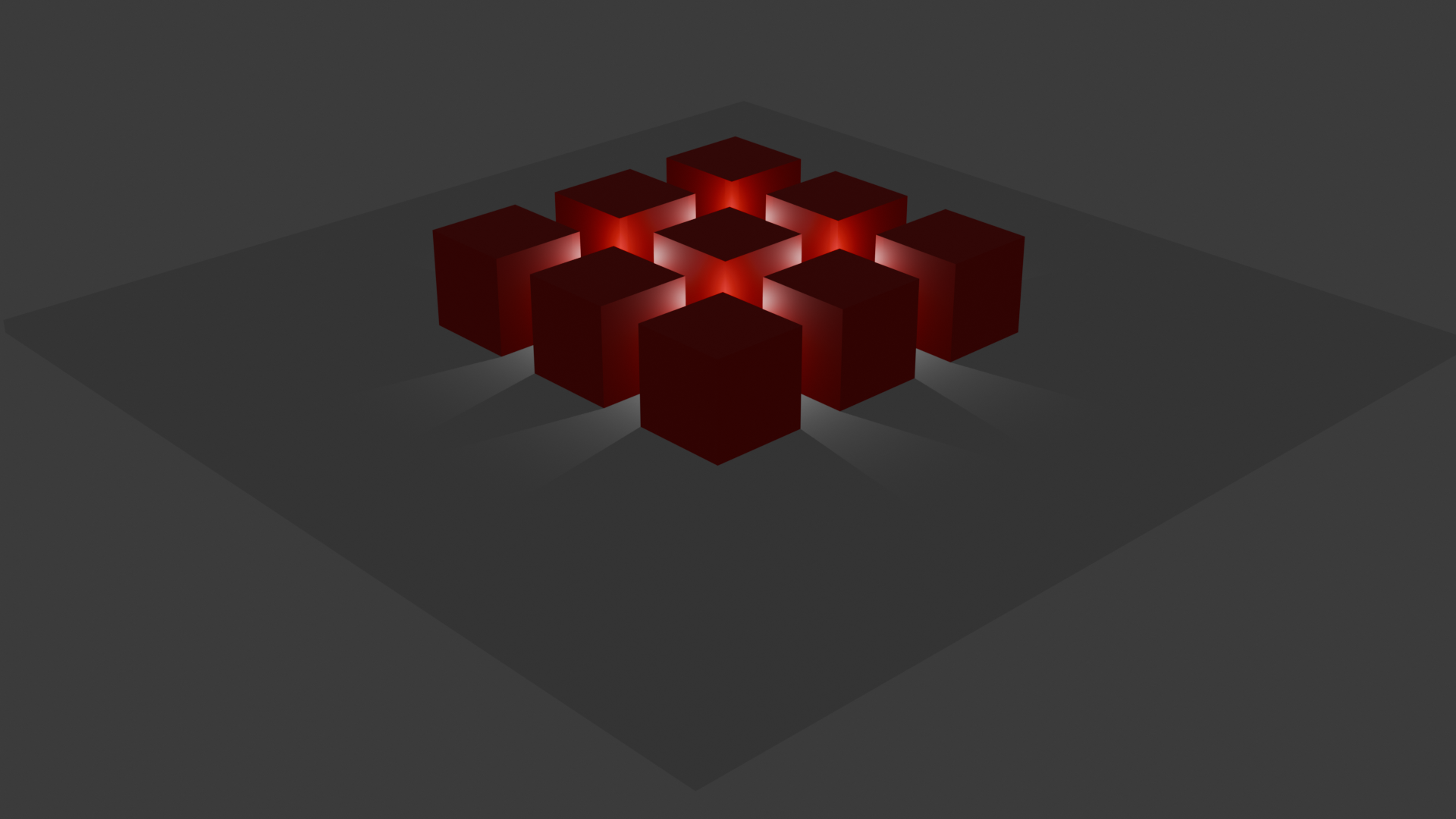

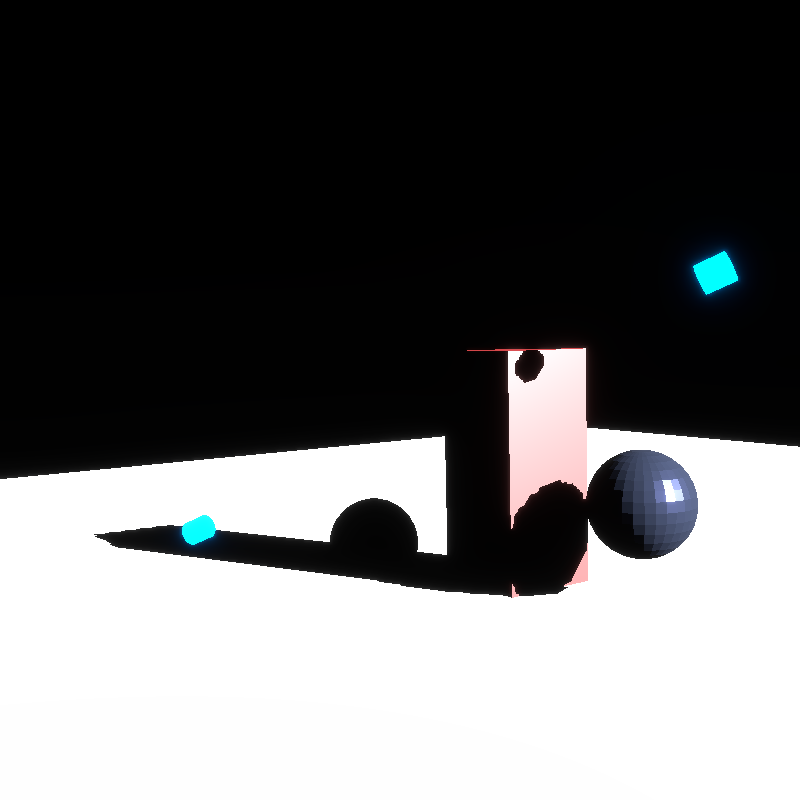

Point light shadow mapping update🔗

I've updated the shadow mapping code to include point light parsing out of GLTF files, which I had commented out while working on directional and spot lights.

Now point light shadow maps are being created, which are essentially six separate shadow maps, collected into a cube map.

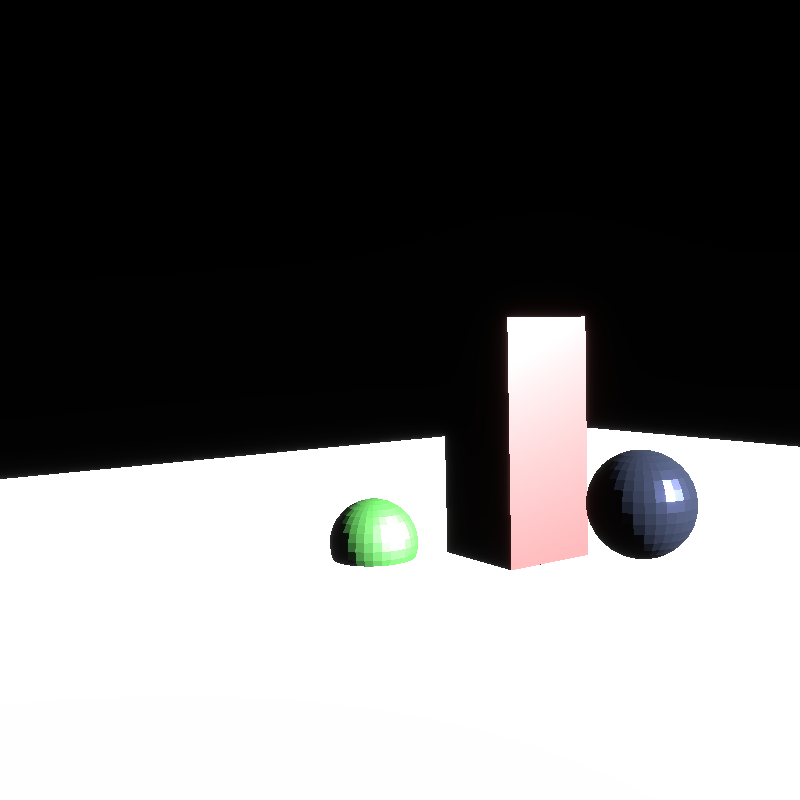

Here's an example of the six separate perspectives that make up the cube map:

These are then blitted into the shadow map atlas so they can be bound in the PBR shader together for multi-shadow-map shading:

I have a feeling there's a lot of repacking happening in the shadow map atlas... ... but that's for another time :)

The next step is to support sampling the cube maps as they are stored in the atlas.

Lucky for me we have a reference implementation in the GPU itself.

So this should be a matter of writing a series of unit tests.

Hand rolling cube-map sampling

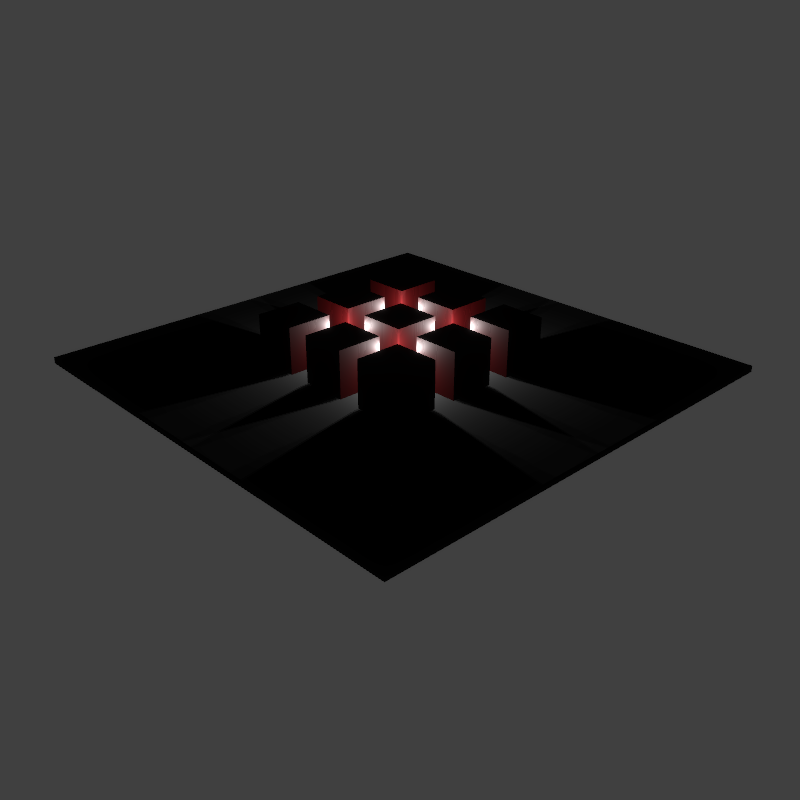

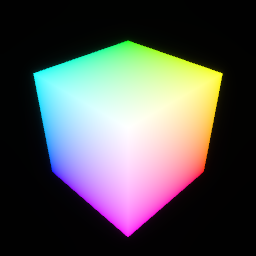

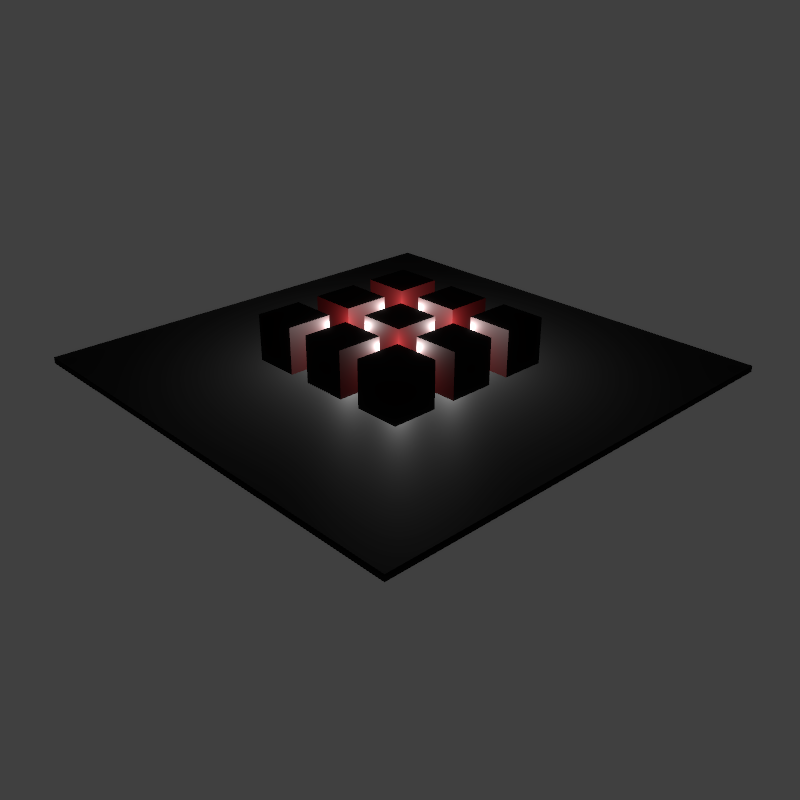

I'm going to start by creating a cubemap with known, colored corners:

This cubemap is nice because it's conceptually simple, and we should be able to verify that our sampling is correct without having to think too hard.

For example, sampling at (1.0, 1.0, 1.0) should return white, whereas sampling at

(-1.0, -1.0, -1.0) should return black.

Furthermore, sampling at (1.0, -1.0, -1.0), (-1.0, 1.0, -1.0) and (-1.0, -1.0, 1.0), should

return red, green and blue, respectively.

So this will not only allow us to verify the hand-rolled cubemap sampling we'll be writing, but it will also help us verify that the cubemap itself is put together correctly.

Let's construct the cubemap...

Sat 22 Feb, 2025🔗

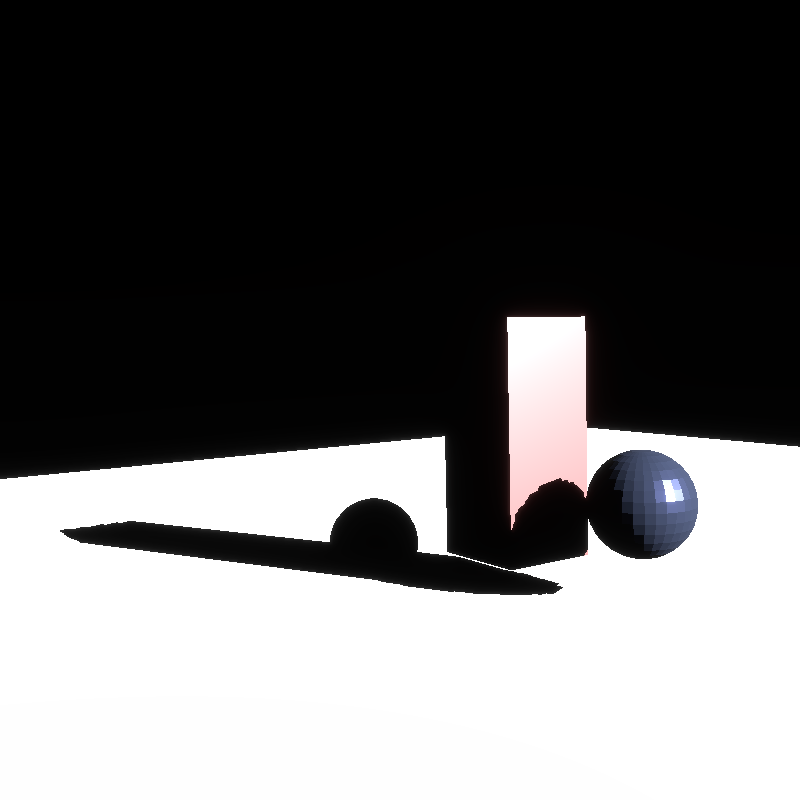

Glorious multi-shadow mapping for spot lights🔗

The only thing I needed to change (as far as shadow mapping) to support spot lights was to allow the library

user to provide a z_near and z_far for the light space projection.

I also had to fix spot lights' outgoing radiance calculations, as they were buggy to begin with, but now they're great!

Now for the last bit of shadow mapping work, support for point lights🔗

This will probably be the most complicated part, aside from all the architecture work it took to get to this point.

Point lights are special in that they cast light in all directions, which means to shadow map them we need to render depth to a cube map.

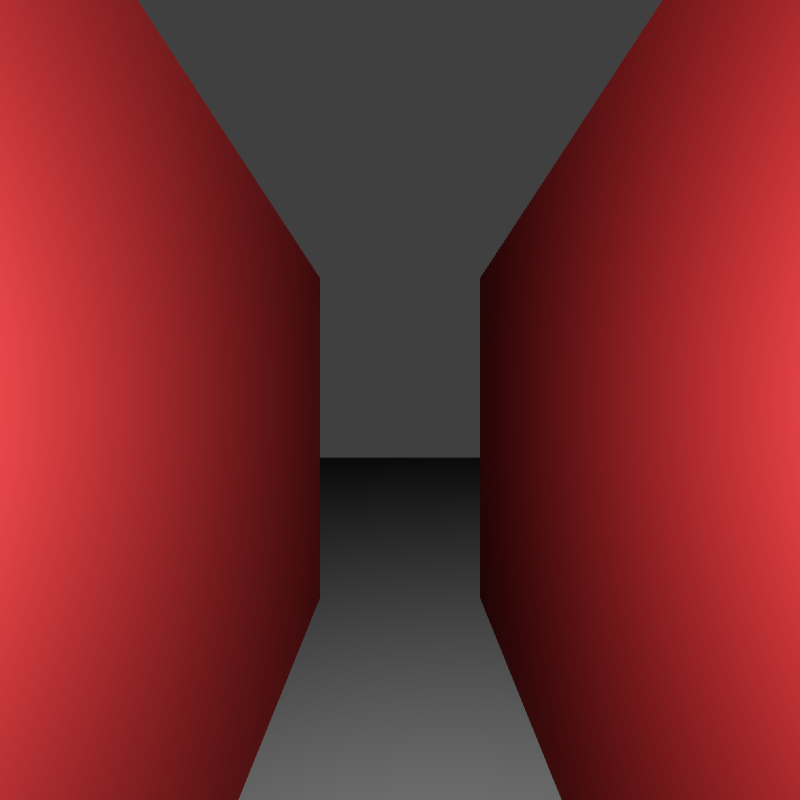

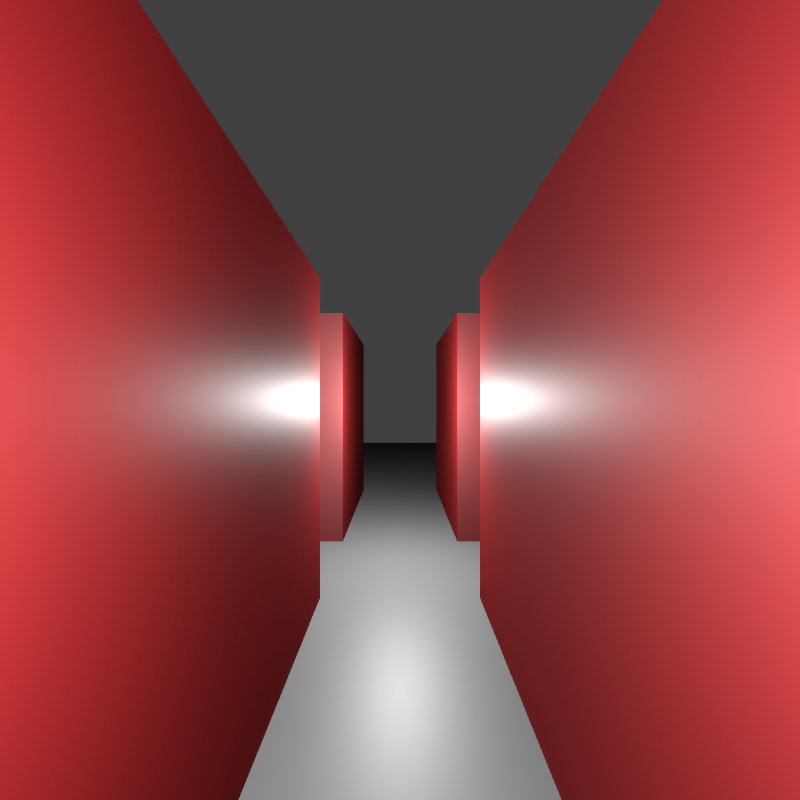

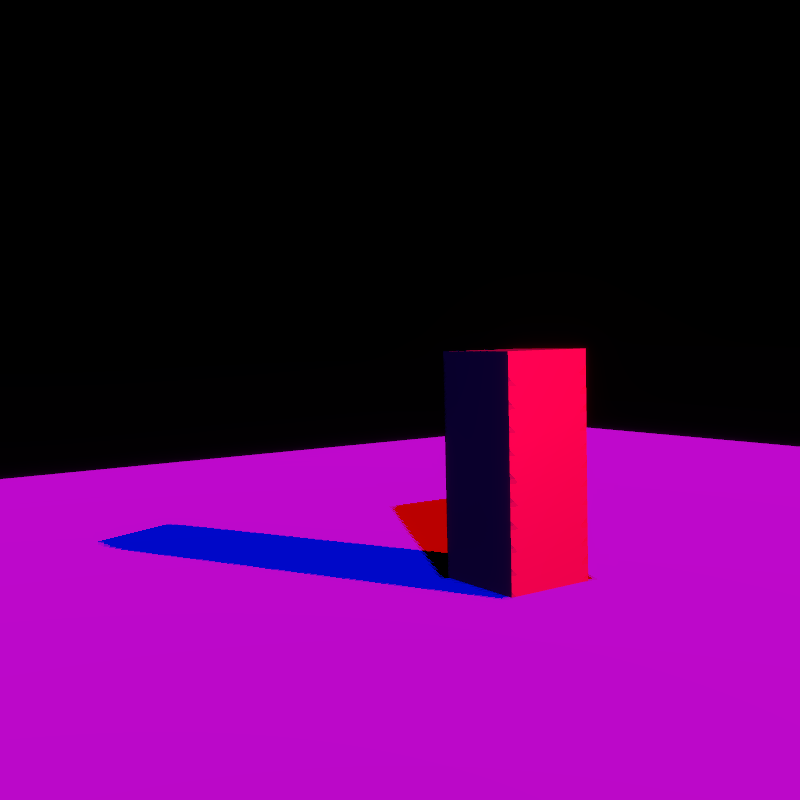

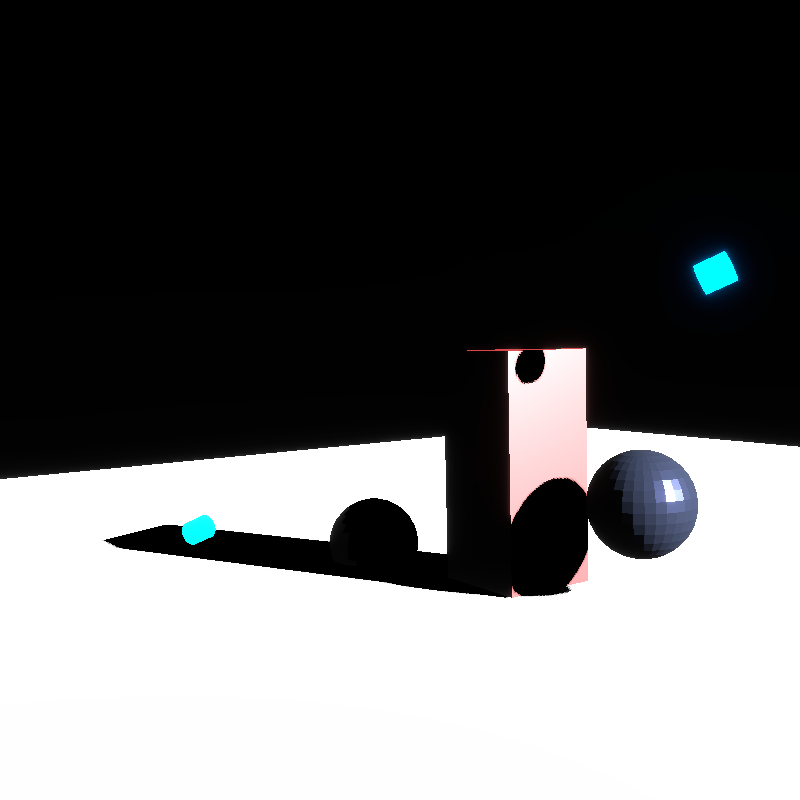

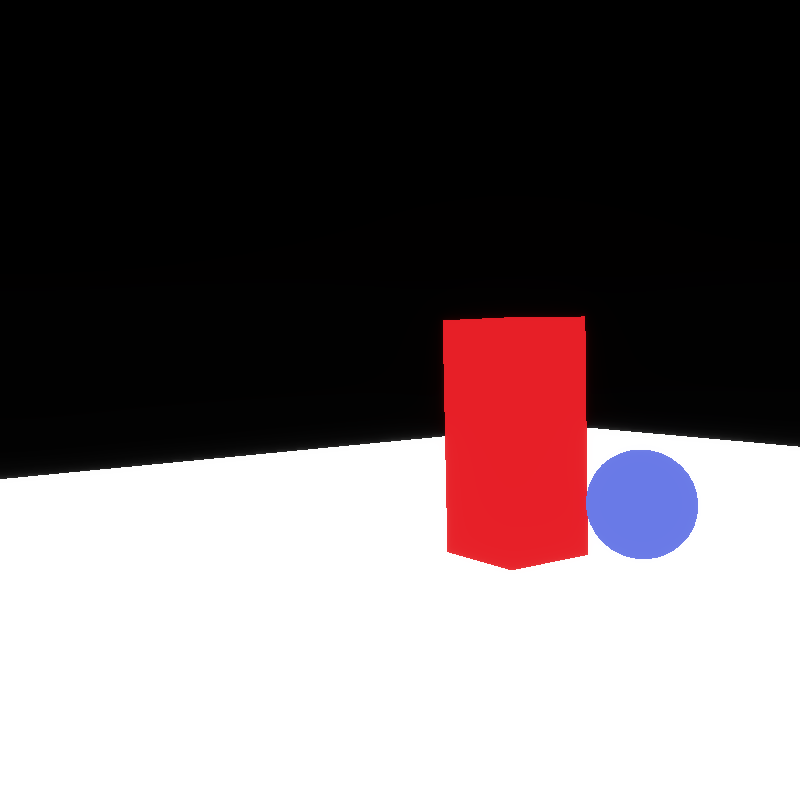

Here is our test scene as rendered in Blender with the EEVEE renderer:

And without any changes to Renderling, here's that same scene in Renderling:

Obviously the intensity is blown out. I still haven't settled on a unit for lighting. I'm roughly using candelas at the moment, but I need to focus on this at some point.

If I adjust the intensity of point lights coming from GLTF files the same way I do for spot lights we get this rendering:

That seems to make the intensity too low. But also, it looks like the point lights are lighting the base incorrectly.

Oh! It's because the base doesn't have a material, so it's defaulting to unlit.

I'll also adjust the intensity adjustment to be the same as directional lighting - that will make it more intense but let's just see how that looks:

Huh! Better. I'll roll with that for now.

Sat 15 Feb, 2025🔗

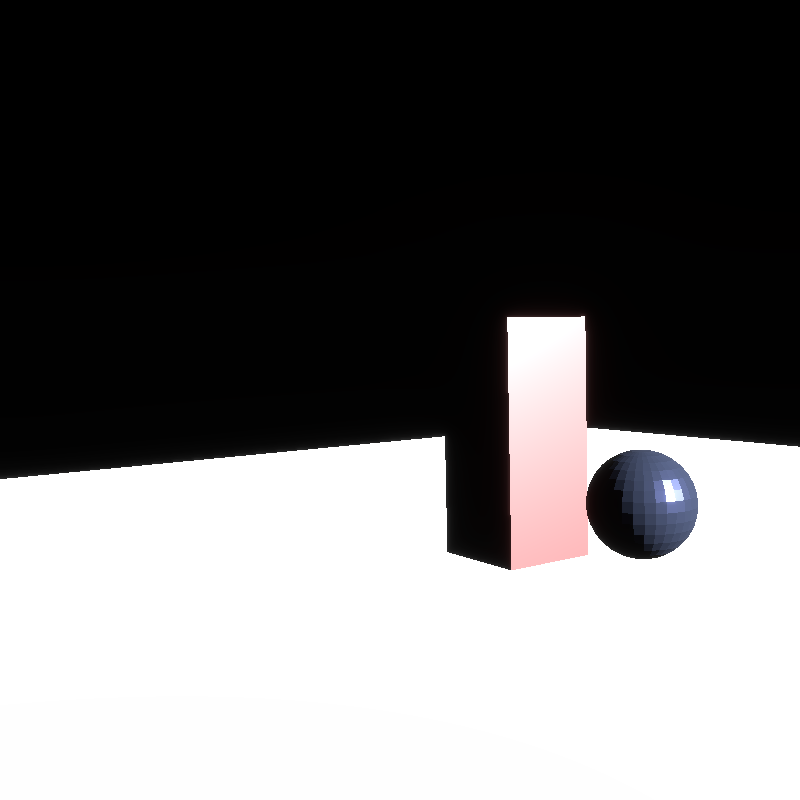

Glorious multi-shadow mapping🔗

There it is! It looks great! It's such a simple scene, but it's the culmination of so much work.

What's amazing is that I didn't change anything in Renderling since last week.

I stumbled across this rendering when modifying the test, which means the library code

under test is for the most part correct.

My guess is that there's something up with synchronization, because the change I made to the test rendered one frame without shadow mapping, and then another with shadow mapping, and the rendering with shadow mapping is what you see above.

Debugging shadow mapping synchronization issues🔗

Interestingly, it seems that merely rendering one frame of the scene first doesn't fix the issue. Instead, we must render one frame before creating the shadow maps.

If we render two frames after creating the shadow maps we see the same results as not creating any shadow maps.

That suggests that there's something special about the Stage::render function when run without shadow maps.

...

This actually might be incredibly simple, and hence why I've missed it.

The geometry slab is 64 bytes after the shadow map update, before

Stage::render.The geometry slab is 8 Kib after calling

Stage::render.

...I think this is a simple case of not synchronizing the geometry slab buffer. It probably has no geometry in it when the shadow maps are updated.

🤦

That's it!

Easy.

So this uncovers an issue with the current version of craballoc

(which manages the slabs), where there's no way to tell if the slab has any queued updates.

I'll change that now and then use that to ensure that the geometry slab gets committed before updating any

shadow maps.

...

And now it looks like everything is hunky dory:

😊

Sun 9 Feb, 2025🔗

Multiple shadow maps, hooking it all up🔗

I left off yesterday without shadows being rendered in the main renderer path.

I think this is because the shadow mapping is using the new "lighting slab" and expects everything related to lights and shadows to live there, whereas the stage/PRB shaders still think all that data is on the "geometry slab" (as I'm now calling it).

...

Ok - after running a few GPU traces on the main render function I can see that I missed a couple more writes to the slab.

After adding the necessary Ids, etc., it looks like I might have one last batch

of coordinate transformations to fix.

...

I fixed reading atlas images according to the atlas's underlying texture format.

I guess that means I've also (for the most part) finished support for different

texture formats in different Atlass.

Previously the Atlas's format had to be Rgba8.

...

Last thing to check are those sampling coordinates...

...

Ok, it looks like something really spooky happened. The stage/geometry slab is getting overwritten immediately. I'm thinking that maybe I passed the geometry slab to something that is expecting the light slab...

...

Nope! It was easier than that.

The Atlas now writes a descriptor of itself for shaders to access, but that means

the PBR descriptor must be written first, or it won't occur at index 0.

The Atlas was being created before the descriptor was getting written.

...

Et viola!

They still don't look great, but they are definitely functional.

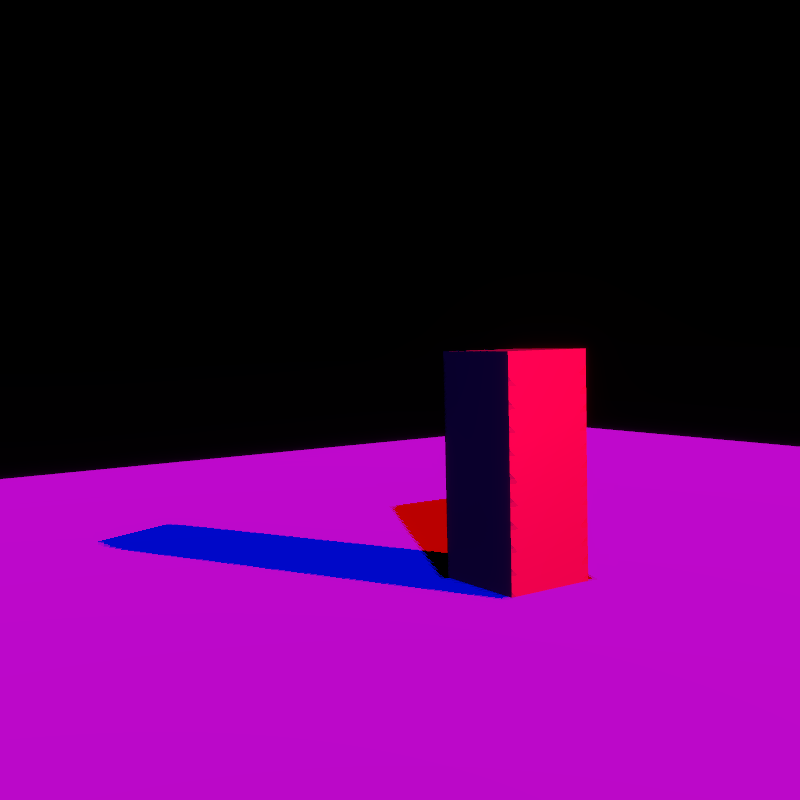

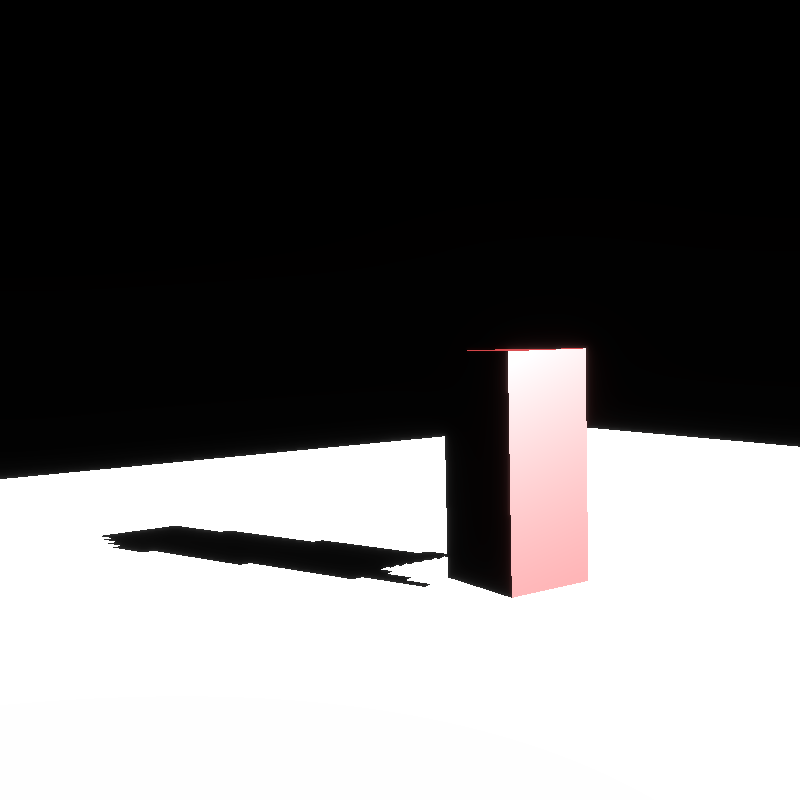

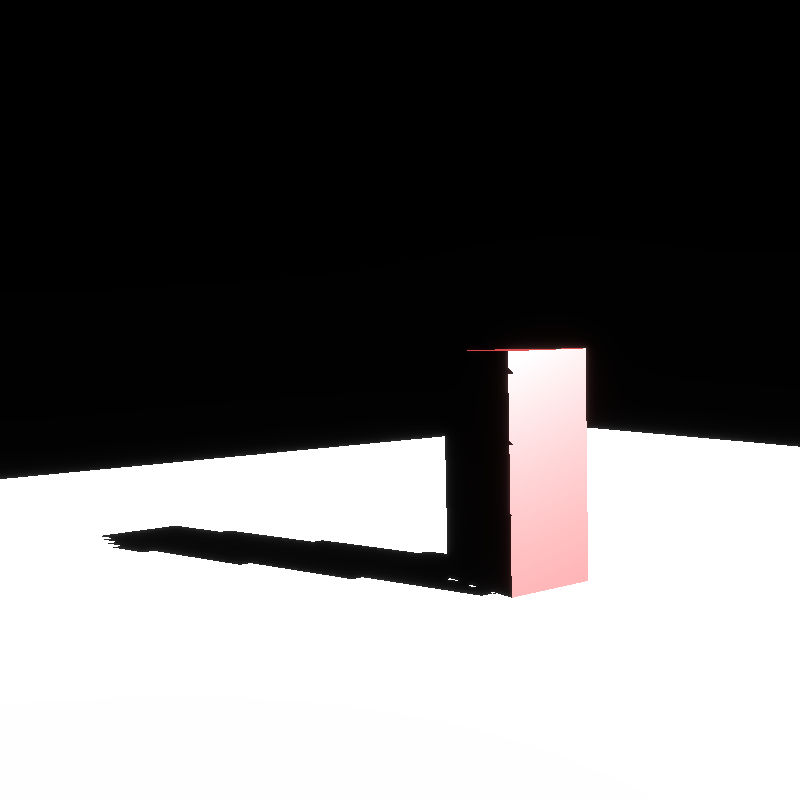

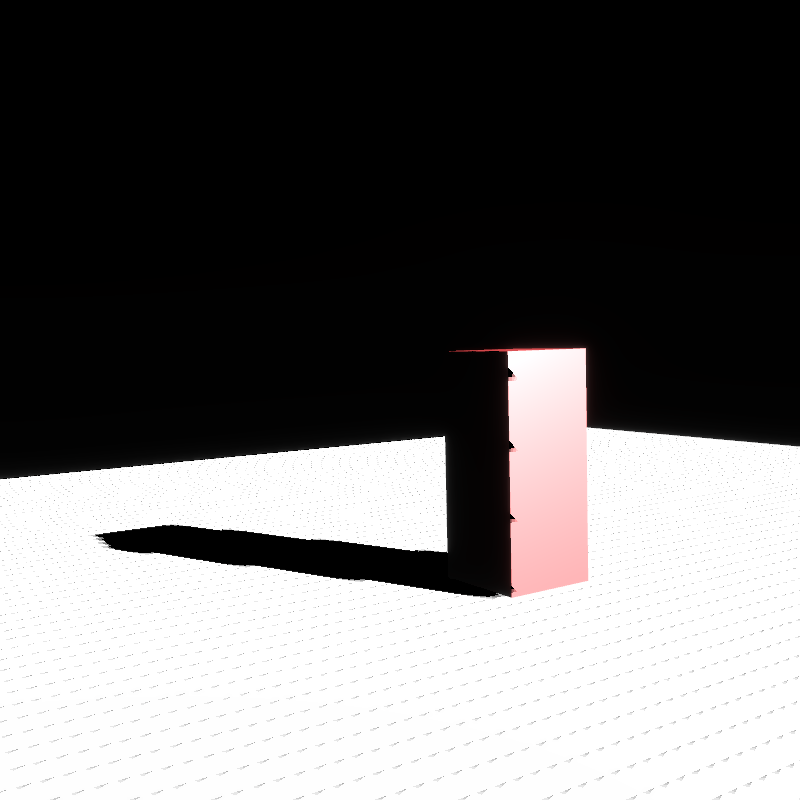

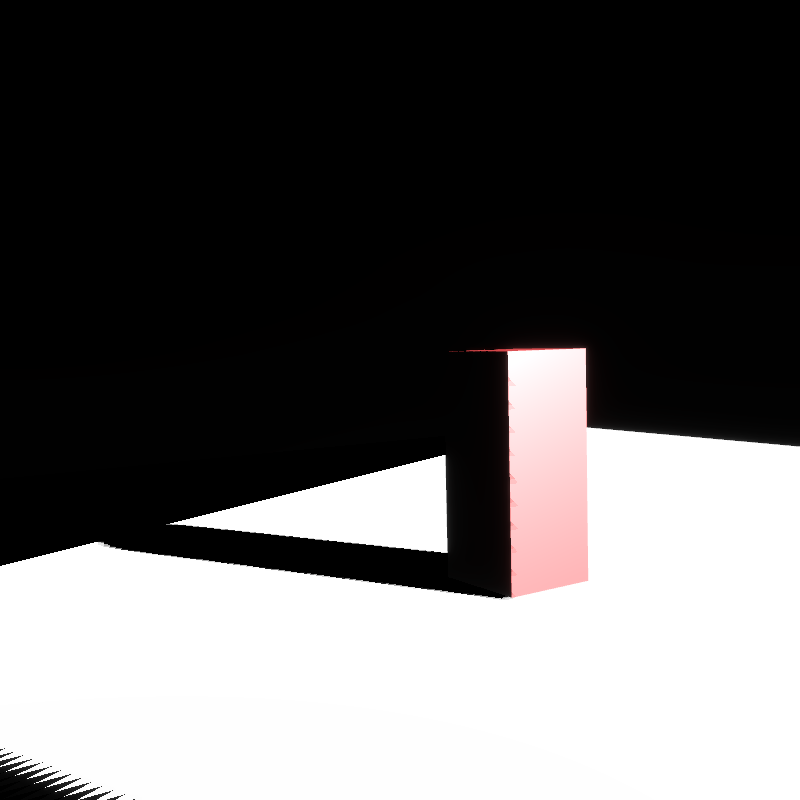

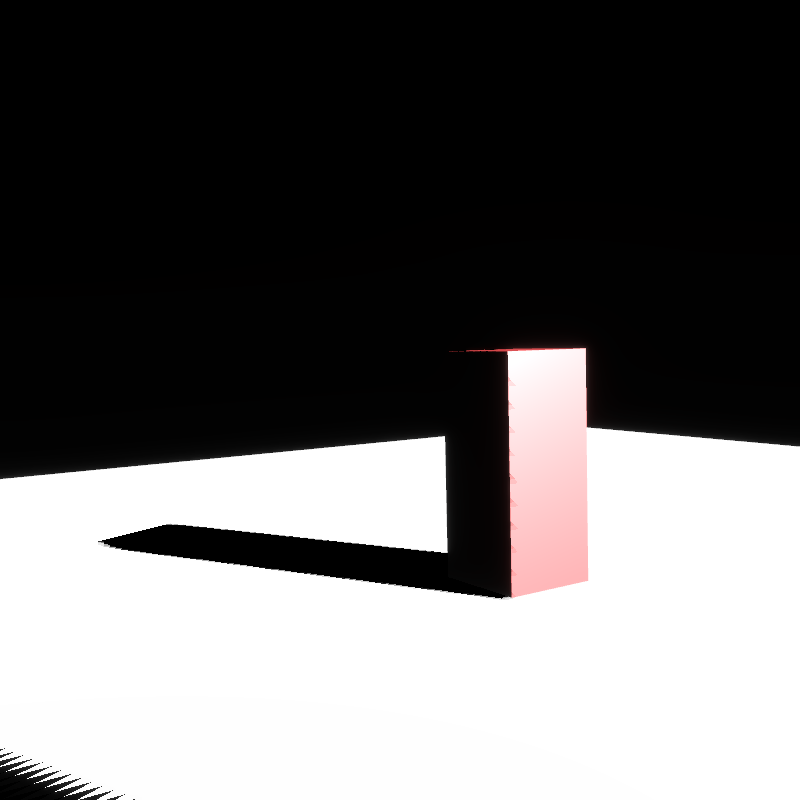

Taking a closer look at just one object🔗

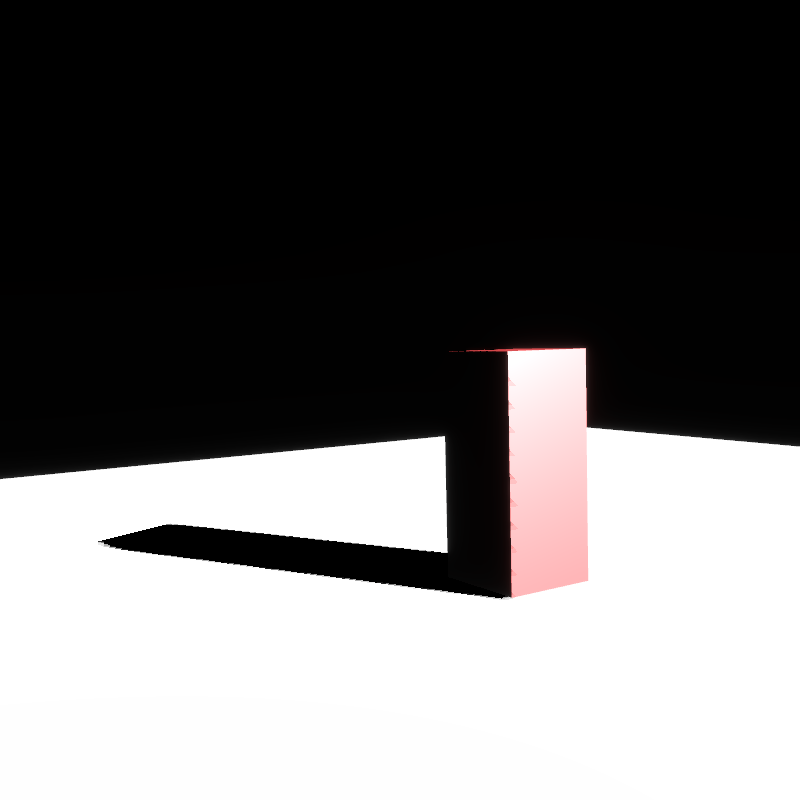

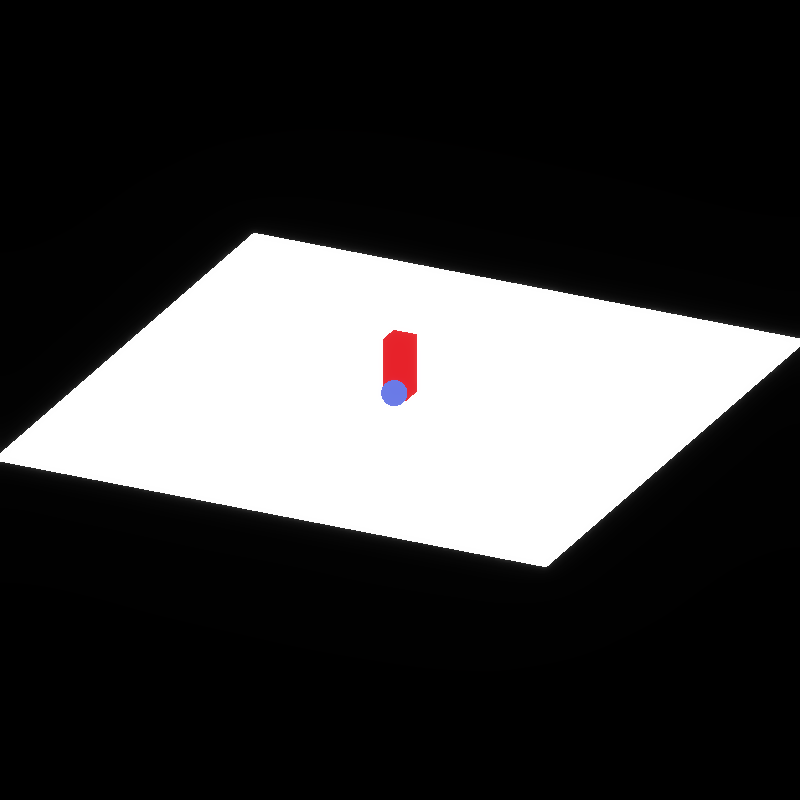

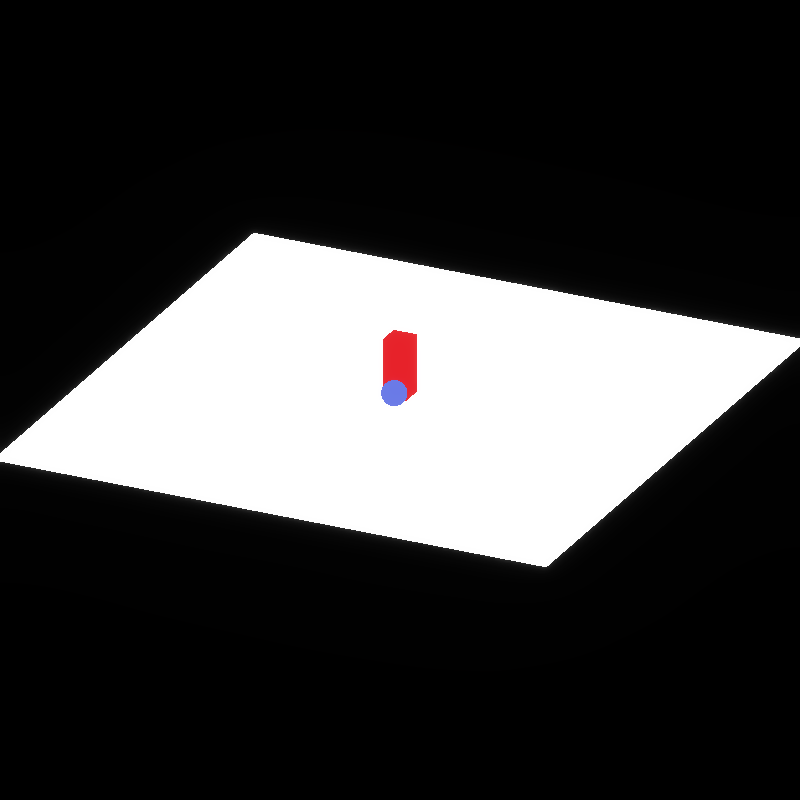

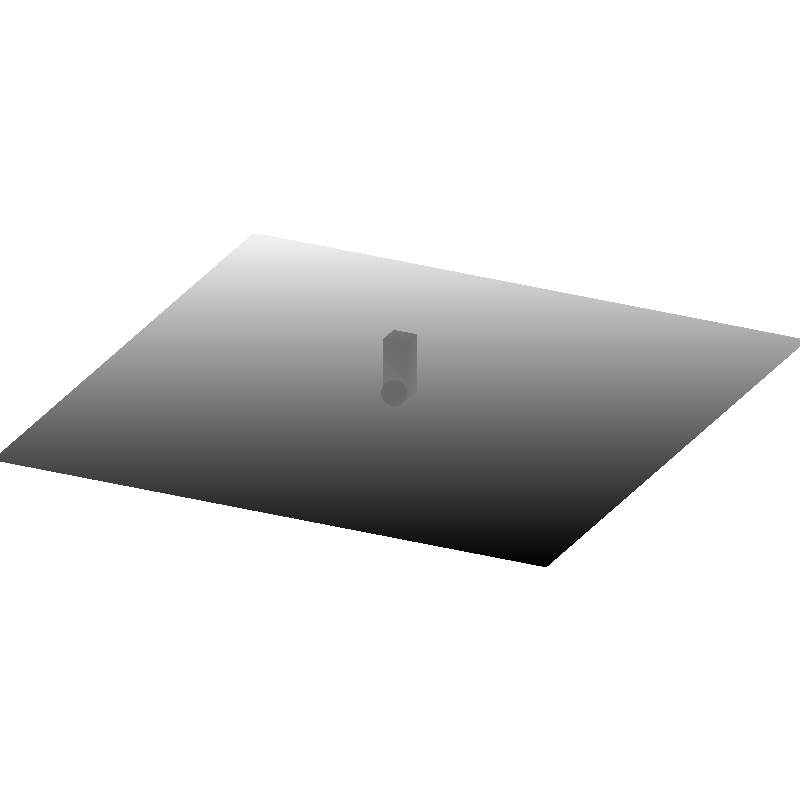

If we remove the other objects in the scene and only look at the red cuboid on the white plane, we can see that the shadow is very far away:

It feels like the shadow is disconnected - which we call "peter panning".

But is it actually peter panning? Or is this a product of having a relatively low-resolution shadow map?

I could imagine that since the light space transform projects the scene pretty far away from the camera, we're lacking the resolution near the edges of the shadow.

Or it could be the bias.

Let's rule out the bias.

...

Huh, that's a lot better.

Above I set the bias_min and bias_max to 0.0.

But you can still see that there's some "artifacting", and some odd tearing on the side of the cuboid.

My guess is this is related to the resolution.

Yeah, the learnopengl article on shadow mapping talks about this in the PCF section:

Because the depth map has a fixed resolution, the depth frequently usually spans more than one fragment per texel. As a result, multiple fragments sample the same depth value from the depth map and come to the same shadow conclusions, which produces these jagged blocky edges.

You can reduce these blocky shadows by increasing the depth map resolution, or by trying to fit the light frustum as closely to the scene as possible.

Yeah, I figured as much.

But it goes on to explain PCF:

Another (partial) solution to these jagged edges is called PCF, or percentage-closer filtering, which is a term that hosts many different filtering functions that produce softer shadows, making them appear less blocky or hard. The idea is to sample more than once from the depth map, each time with slightly different texture coordinates. For each individual sample we check whether it is in shadow or not. All the sub-results are then combined and averaged and we get a nice soft looking shadow.

So let's see how easy that is. Seems like it might be a shader-only change, which I like.

...

Eh. It's kinda better.

But! Those artifacts on the side of the cuboid are worse now.

I think maybe we need to choose a better frustum.

...

Choosing a tighter depth for the light space projection improves things quite a bit,

as well as fixing a couple little finicky bugs in the shader, and also playing with

the values for min_bias and max_bias:

But check it out - you can see the borders of the shadow map now - it presents as a big jaggy line on the bottom left corner, and our plane is cut off by the ends of the shadow map to the left. This is because that area is beyond the far plane of the light space transform's frustum.

The fix for this should be easy - if the input clip coords of the fragment position in

light space is greater than 1.0 (which denotes it's outside of light space), we return

0.0 for the shadow:

That's much better! We're getting close to shipping this.

There's still that big jaggy in the lower left, though.

I'm guessing this is another border problem. We should probably check the other dimensions.

Lastly, let's use multiple shadow maps🔗

Here's the moment of truth! Let's set up a scene with more lights, that way we can see multiple shadow maps in action!

...

Aaaaaand. It's not working. The lighting is there, but the shadows are not.

Whelp! That's a wrap for now.

Sat 8 Feb, 2025🔗

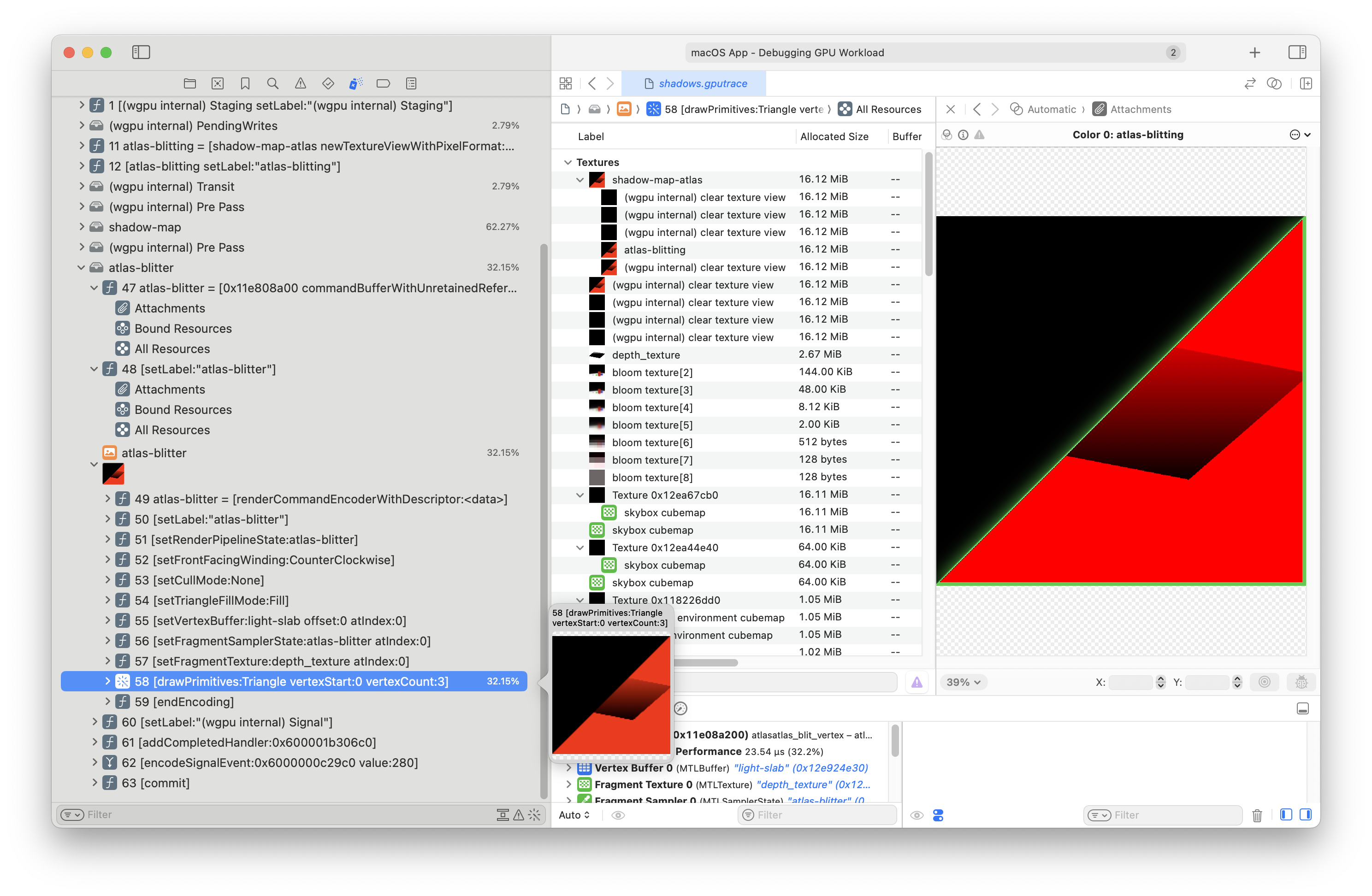

Finishing up shadow mapping with multiple shadow maps🔗

I have shadow mapping with multiple shadow maps compiling. I don't have it working, though.

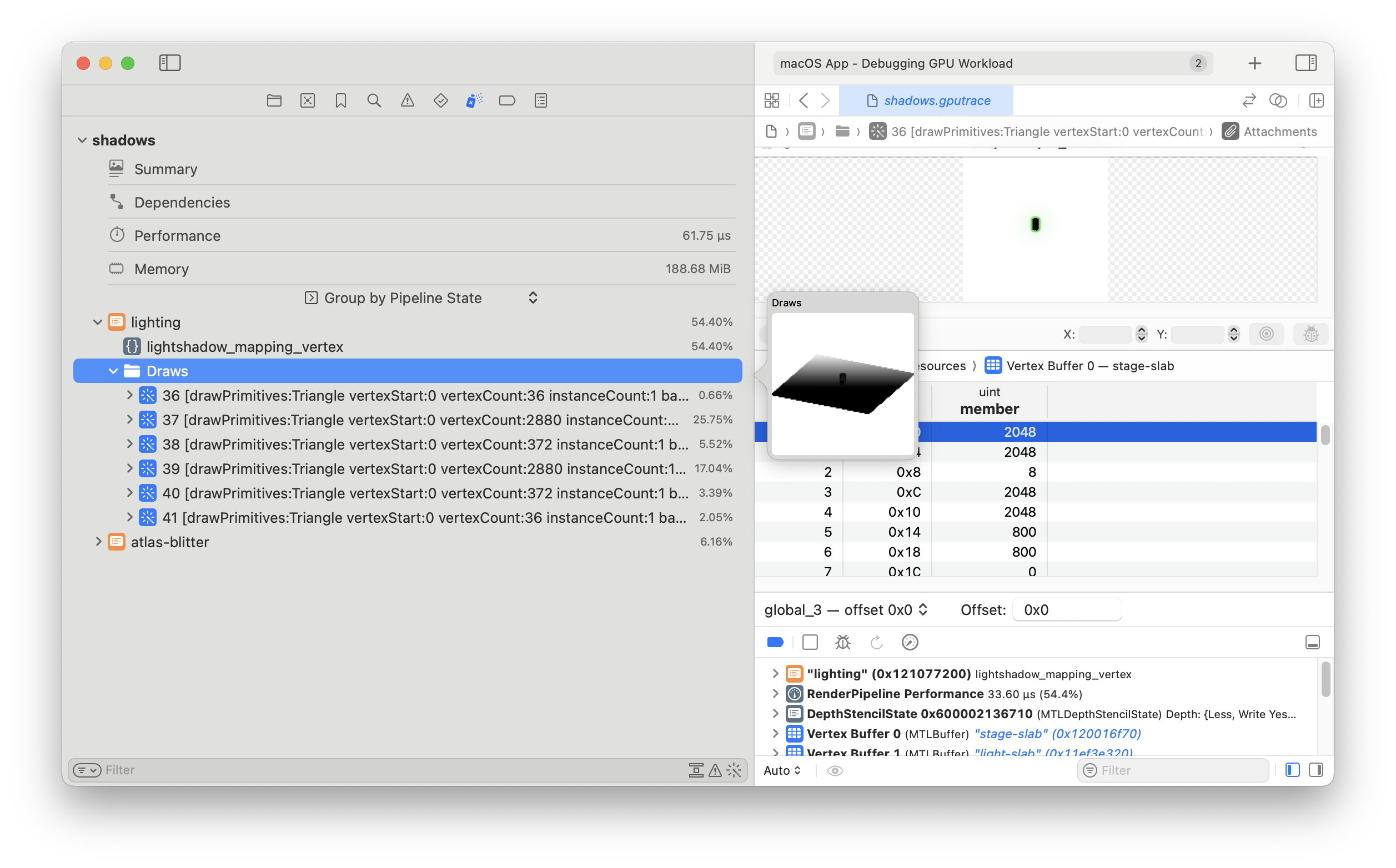

I'm going to debug using GPU traces.

From the GPU trace file it looks like generating the shadow map depth texture is correct, but blitting it to the shadow map atlas isn't working.

The blitter includes some kind-of funky math for figuring out what clip coords to emit in the vertex shader in order to blit to the correct subsection of the atlas. I bet that's what's going on.

To make sure, I'll change the shader to blit to the entire texture...

You can already see that we're not blitting the whole frame:

But hey - this programmatic GPU tracing is really helpful.

...

Well I found (one of) the culprit(s) inside the atlas blitter:

pass.draw(0..3, 0..1);

So it's not drawing a full quad, and it's not sending over the id of the AtlasBlittingDescriptor as

the instance.

After changing those I'll check the math in the shader.

...

It's now drawing the full frame. Now I'll enable drawing to the subsection of the atlas.

...

So we're back to a fully black frame. I'll run it on the CPU and see what's up.

...

Ha! That's it. Here's the print:

atlas_blitting_desc_id: Id(39)

atlas_blitting_desc: AtlasBlittingDescriptor { atlas_texture_id: Id(null), atlas_desc_id: Id(null) }

thread 'light::cpu::test::shadow_mapping_sanity' panicked at /Users/schell/.cargo/registry/src/index.crates.io-6f17d22bba15001f/crabslab-0.6.3/src/lib.rs:39:6:

index out of bounds: the len is 63 but the index is 4294967295

All those (null) means that I simply didn't write those pointers to the slab...

...

Alright, after updating the slab correctly before each blit, I now see some funky coords.

Here's my unit test:

// Inspect the blitting vertex #[derive(Default, Debug)] struct AtlasVertexOutput { out_uv: Vec2, out_clip_pos: Vec4, } let mut output = vec![]; for i in 0..6 { let mut out = AtlasVertexOutput::default(); crate::atlas::atlas_blit_vertex( i, shadows.blitting_op.desc.id(), &light_slab, &mut out.out_uv, &mut out.out_clip_pos, ); output.push(out); } panic!( "clip_pos: {:#?}", output .into_iter() .map(|out| out.out_clip_pos) .collect::<Vec<_>>() );

And that outputs the following print:

clip_pos: [

Vec4(

-1.0,

-1.0,

0.5,

1.0,

),

Vec4(

0.5625,

-1.0,

0.5,

1.0,

),

Vec4(

0.5625,

-1.0,

0.5,

1.0,

),

Vec4(

0.5625,

-1.0,

0.5,

1.0,

),

Vec4(

-1.0,

-1.0,

0.5,

1.0,

),

Vec4(

-1.0,

-1.0,

0.5,

1.0,

),

] Which should be "[bottom left, bottom right, top right, top right, top left, bottom left]", but it's obviously degenerate.

This is the AtlasTexture in question (the one we're blitting into):

AtlasTexture {

offset_px: UVec2(0, 0),

size_px: UVec2(800, 800),

layer_index: 0,

frame_index: 0,

modes: TextureModes { s: ClampToEdge, t: ClampToEdge }

}And this is the atlas descriptor:

AtlasDescriptor { size: UVec3(1024, 1024, 4) }I can pop these into a smaller unit test.

...

Turns out the conversion from clip space to texture coords was wrong.

I had this:

let input_uv = (clip_pos + Vec2::splat(1.0)) * Vec2::new(0.5, -0.5);

When what I meant was this:

let input_uv = (clip_pos * Vec2::new(1.0, -1.0) + Vec2::splat(1.0)) * Vec2::splat(0.5);

I just didn't realize I needed to flip Y first.

But wait, there's more!

It also turns out that the conversion from texture coords back to clip space was wrong!

I had:

uv * Vec2::new(2.0, 2.0) - Vec2::splat(1.0)

When what I wanted was this:

(uv * Vec2::new(2.0, 2.0) - Vec2::splat(1.0)) * Vec2::new(1.0, -1.0)

So really I guess this is the same bug but in two places. In both cases I had the "flip Y" thing wrong in one way or another.

...

WOOOOT!

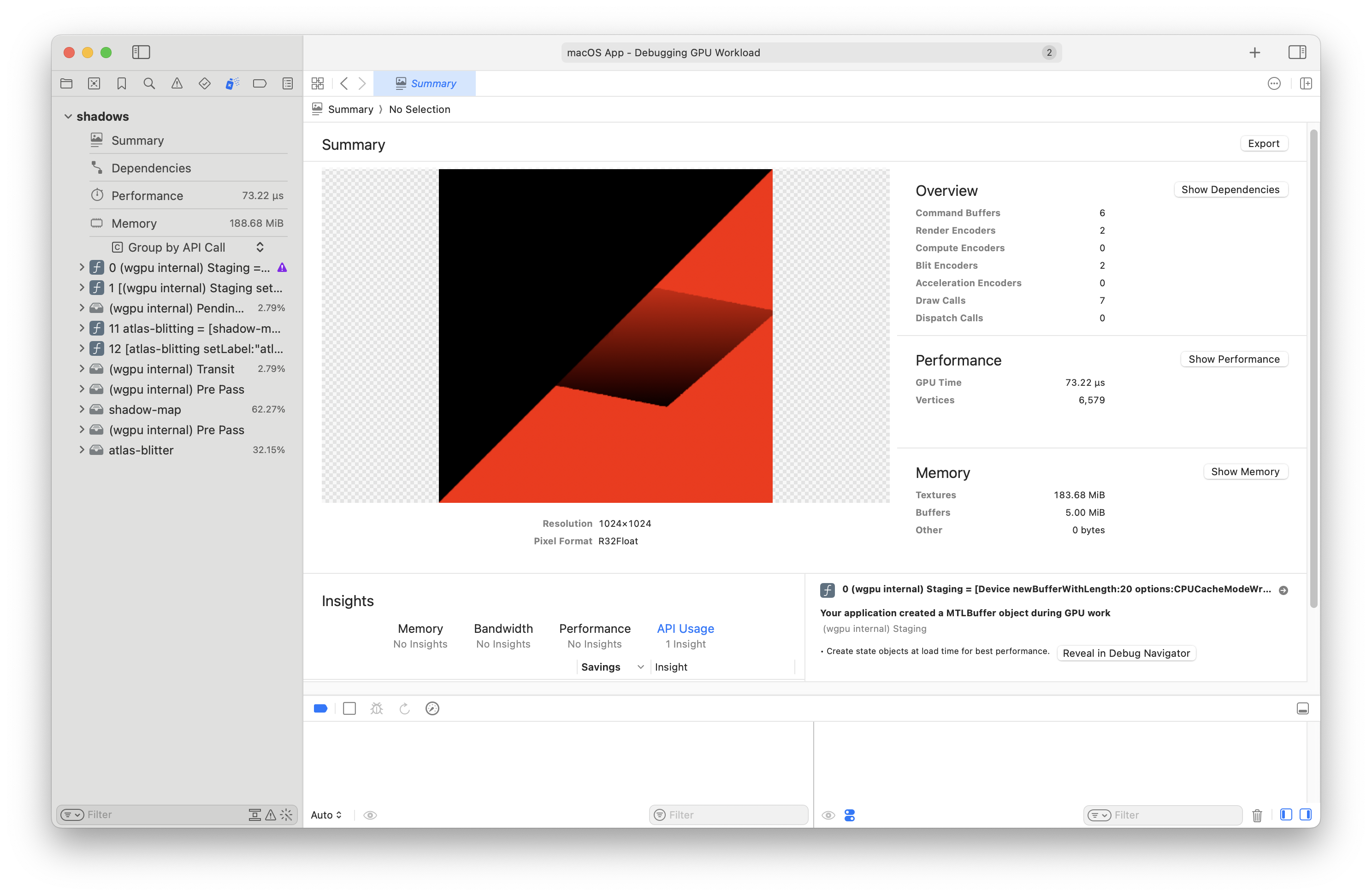

That worked. Now it looks like the shadow map atlas is populated:

...

Now, shadows are still not being rendered, but at least I know this setup is correct.

Thur 6 Feb, 2025🔗

It's Waitangi day 🇳🇿 🏝️!

craballoc now has HybridWriteGuard🔗

I added HybridWriteGuard to

craballoc.

It provides an alternative way of modifying values that might be more familiar to people.

Continuing the multiple shadow map update🔗

I'm still working on supporting multiple shadow maps per Renderlet in the forward shader.

The approach is to store the shadow maps together in a texture atlas, and to store their

descriptors on the lighting slab.

Continuing from yesterday are things to remember to circle back to:

Ensure lights and shadow maps are stored on the light slab.

Use the

AnyliticalLightBundlestruct

Store and invalidate the lighting bindgroup

It's being recreated each frame

Support configuring the

Atlas's inner texture format.Shadow maps are 32-bit depth

...

It looks like I can't copy to Depth32Float textures...

Copying to textures with format Depth32Float and aspect All is forbidden

Related WebGPU spec on depth formats.

But from the spec it looks like I can bind another texture type as the depth, though, so I can try that.

Actually it seems that it might be easier to use a separate depth texture for the shadow update, then copy that to the atlas separately.

...

UGH

Source format (Depth32Float) and destination format (R32Float) are not copy-compatible (they may only differ in srgb-ness)

Ok, so it looks like I can't even copy the depth texture.

I feel like I ran into this during occlusion culling as well. Maybe not, but similar.

...

Looks like someone made a nice TextureBlitter utility in wgpu for this.

I'll see if I can use that.

...

Turns out I can't use the built-in TextureBlitter, because I need to blit to a sub-section of the target texture,

so I'll have to write my own blitter.

Wed Feb 5, 2025🔗

Things to remember when adding support for multiple shadow maps:

Ensure lights and shadow maps are stored on the light slab.

Store and invalidate the lighting bindgroup

Tues Jan 27, 2025🔗

Fixing shadow mapping peter panning🔗

Yesterday I worked on addressing the shadow acne in Renderling's shadow mapping feature.

Today I'll be addressing "peter panning", which is when the shadow of an object doesn't seem to line up with the object itself - called as such because of how Peter Pan's shadow tends to misbehave in the Disney movie.

This should be relatively easy to fix by using front-face culling during the shadow map update. We'll see.

...

Ok! First thing - I didn't realize that the red cuboid in the scene was actually floating above the plane. So there really wasn't any peter panning going on. But I fixed it anyway.

Now at least for this scene, the bias isn't needed.

I've set the default bias to be 0.0.

I also added some emissive cylinders to show the light direction:

Mon Jan 27, 2025🔗

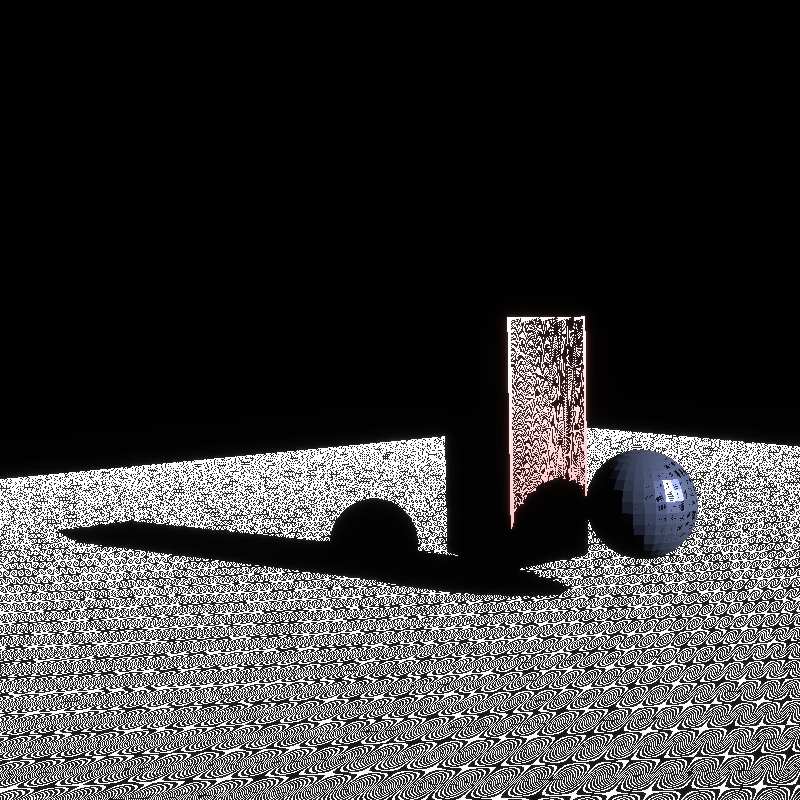

Fixing shadow mapping acne🔗

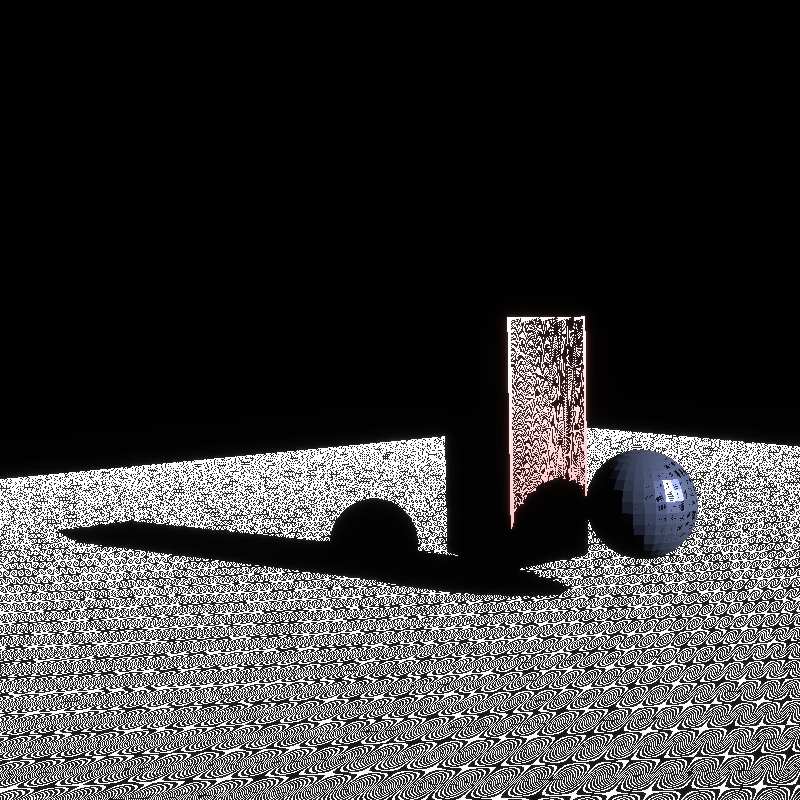

I left off last night being able to render some shadows, finally:

...and as you can see, there's some pretty bad "shadow acne".

Shadow acne is a Moiré-like pattern that appears when the angle between the light source and the surface is small, and that is exasperated by a small shadow map size. The smaller the size of the shadow map, the more likely to see acne.

There's a pretty easy and well known fix, which is to include a bias that is proportional to the angle.

...

After adding some configurable bias, the acne goes away:

Sun Jan 26, 2025🔗

The shadow mapping saga continues 2🔗

Yesterday I left off knowing that the PBR fragment shader's shadow calculations

seem fine, so today I'm going to look at the wgpu linkage to determine why the

shadow is not displaying.

I'm waiting for a facepalm moment and expecting that I'll find a buffer that has been invalidated or something.

...

I can't see that anything is off-kilter.

I'm going to nuke the shaders and try again.

...

Nothing.

Frustrating!

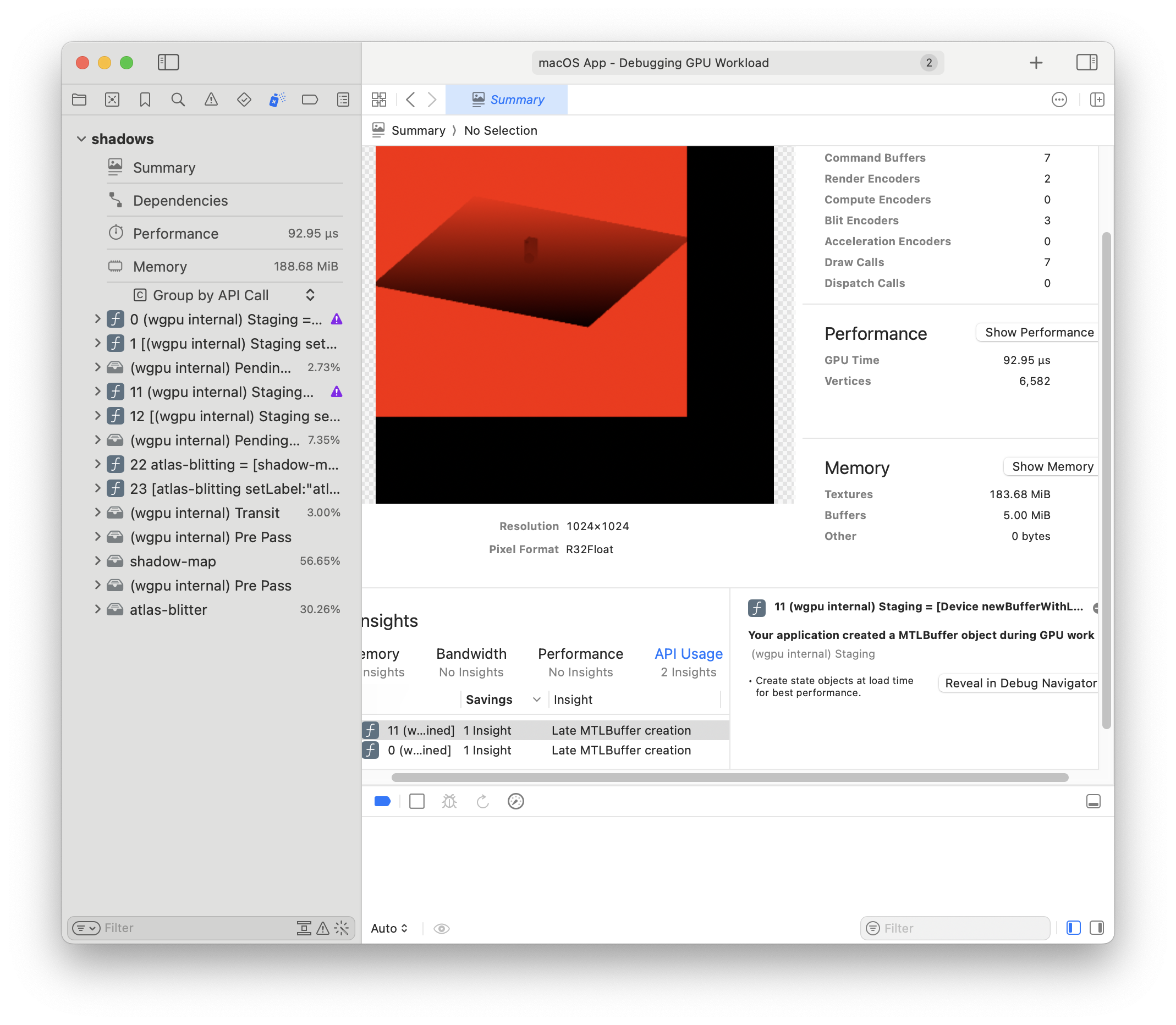

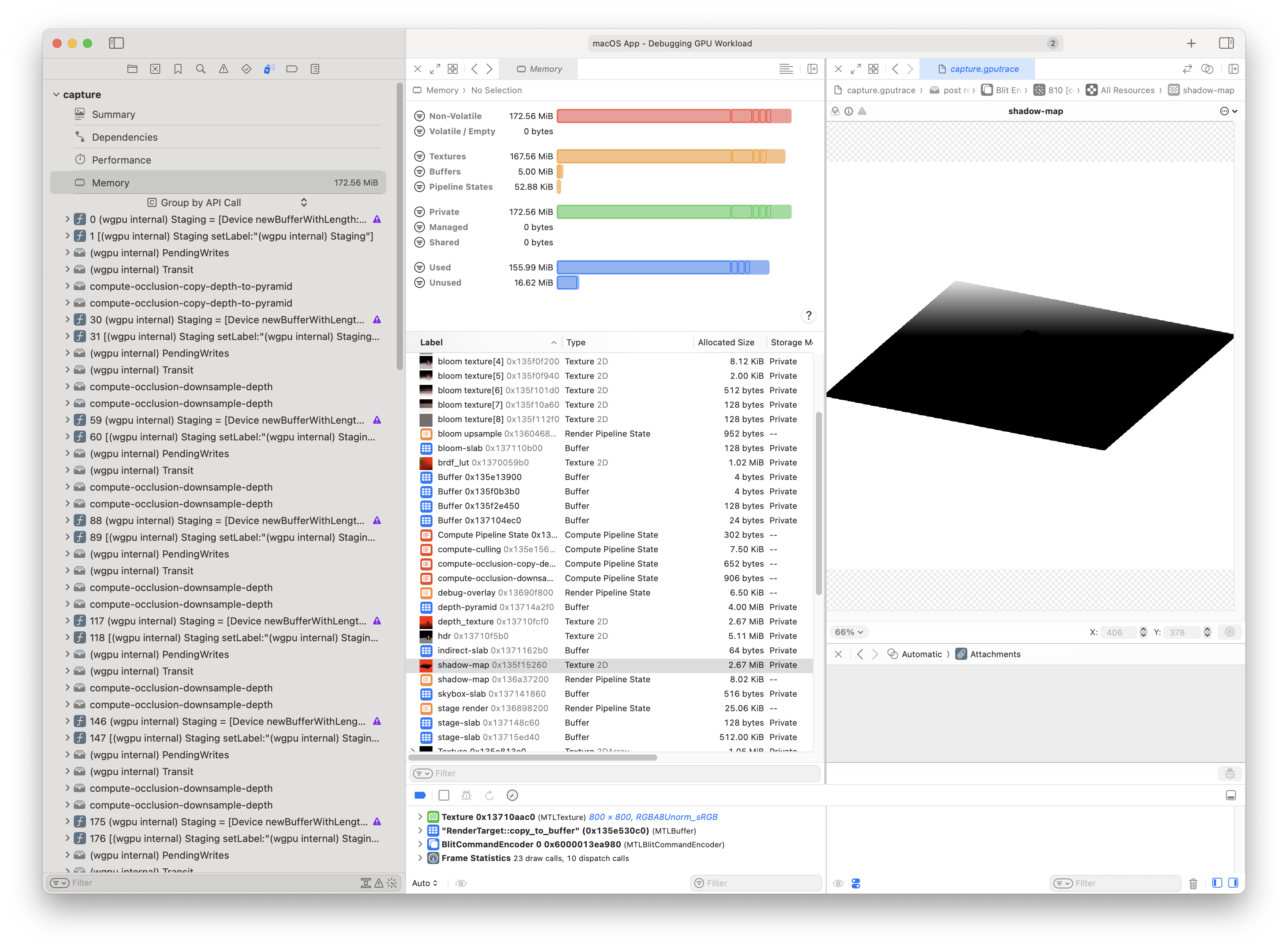

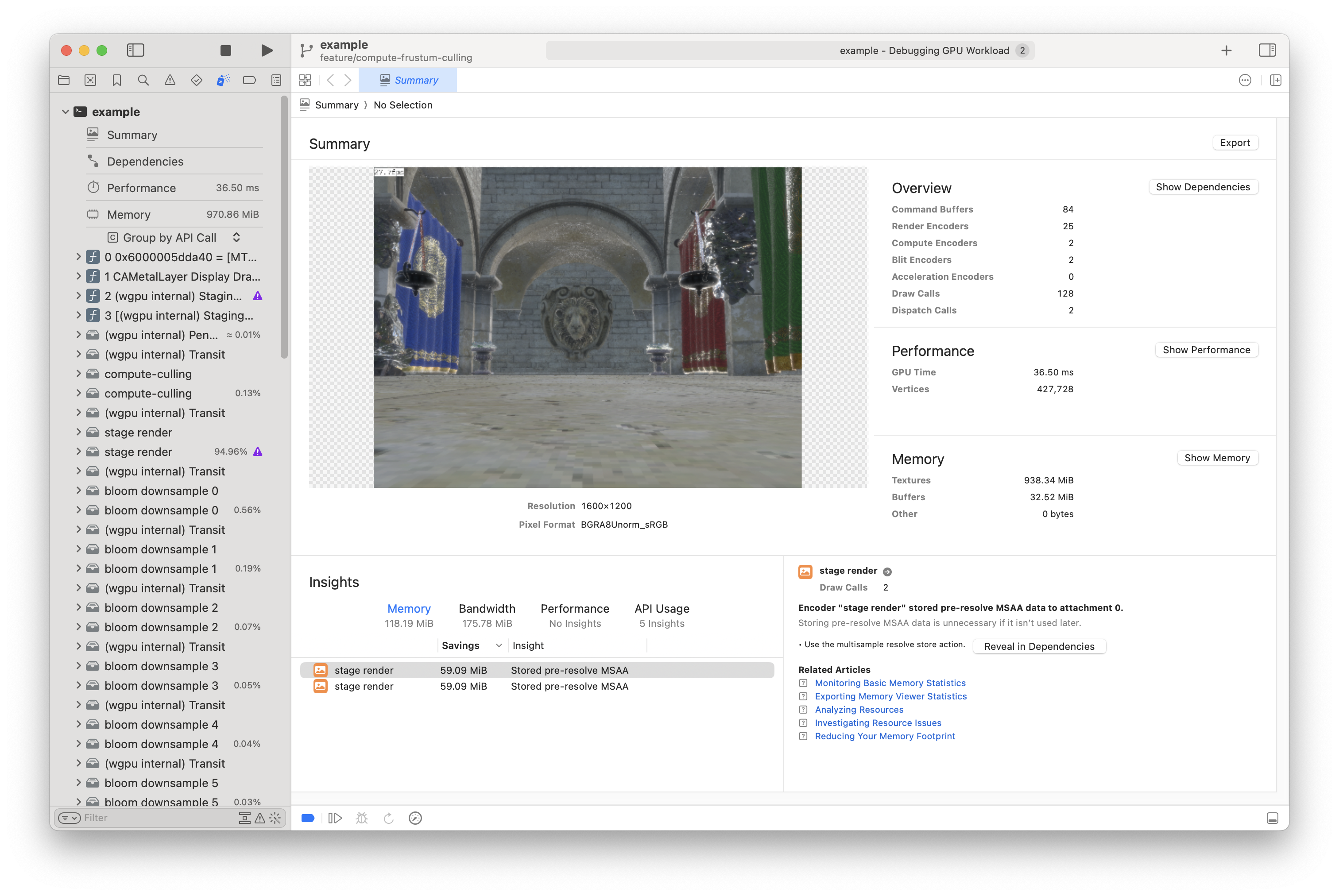

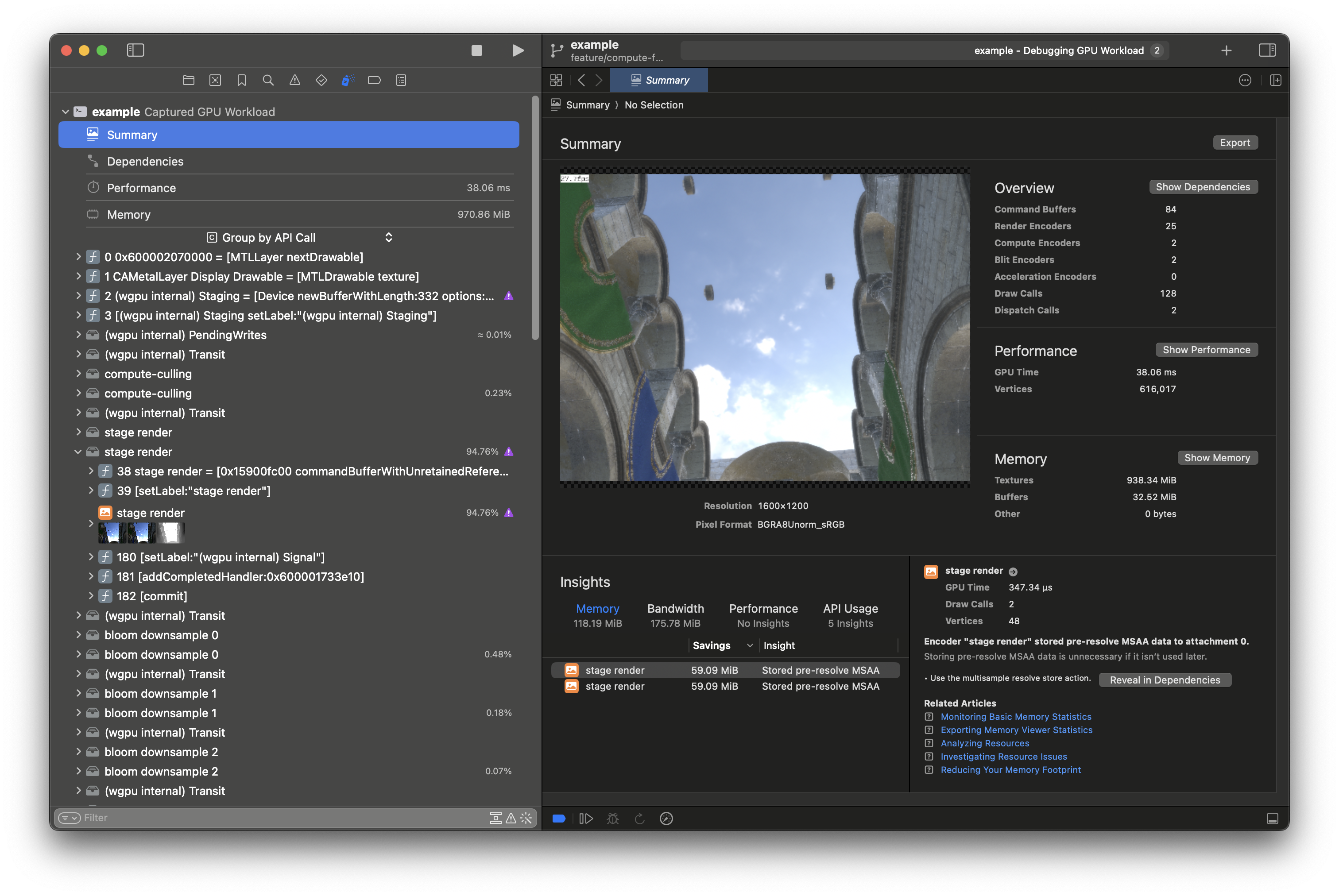

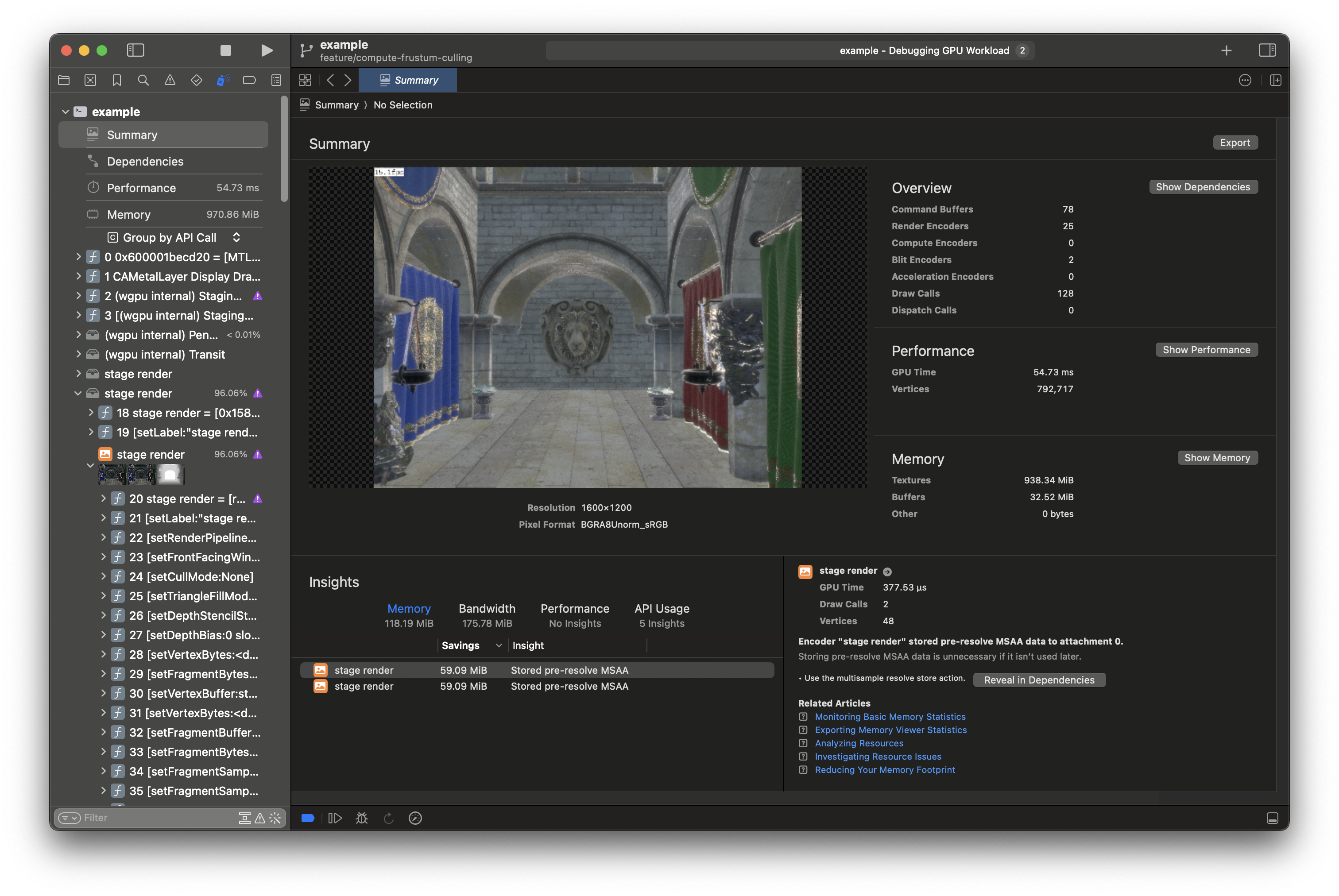

I guess I'll integrate shadow maps into the example app, capture a GPU frame in Xcode and cross my fingers that I notice something.

...

Well, that is some kind of fruitful. I can see that the shadow map depth texture is empty.

That is - it's all 1.0, everywhere.

The only place it gets cleared is in the ShadowMap::update function.

...

I was able to capture a GPU frame programmatically using this function:

pub fn capture_gpu_frame<T>(ctx: &Context, f: impl FnOnce() -> T) -> T { let m = metal::CaptureManager::shared(); let desc = metal::CaptureDescriptor::new(); desc.set_destination(metal::MTLCaptureDestination::GpuTraceDocument); desc.set_output_url(workspace_dir().join("test_output").join("capture.gputrace")); unsafe { ctx.get_device() .as_hal::<wgpu_core::api::Metal, _, ()>(|maybe_metal_device| { if let Some(metal_device) = maybe_metal_device { desc.set_capture_device( metal_device.raw_device().try_lock().unwrap().as_ref(), ); } else { panic!("not a capturable device") } }) }; m.start_capture(&desc).unwrap(); let t = f(); m.stop_capture(); t }

And with the gputrace I can clearly see that the shadow map is being created correctly and it has the value I expect at the point I expect.

This programmatic capture is a game changer!

Since the shadow map depth texture is fine, it could be one of two things:

sampling the texture is somehow borked

the calculation of finding the uv coordinates used to sample is borked

To disprove 2 I'll rewrite the fragment shader to write these coords as the color.

It should show roughly the fragment's distance from the light source, where darker

means closer.

Hrm. It's all black, with no shading at all. This could mean that the fragment position in light clip-space is being miscalculated.

...

I think I've tracked it down to this line:

let light_space_transform = light_slab.read_unchecked(lighting_desc.shadow_map_light_transform);

It seems that line is causing the shader to crash.

This can happen, as the name of that function suggests, Slab::read_unchecked doesn't check the

given Id to see if it's Id::NONE, which is u32::MAX. So trying to read with that index would

predictably crash.

So if lighting_desc.shadow_map_light_transform is Id::NONE, that means it hasn't been updated

since updating the shadow map.

...

With the new capture functionality I should be able to read the value of the Id.

...aaaaand yup! I can see there are 3 light-slab buffers and two of them are only 4 bytes!

Those 4 bytes correspond to the Id::NONE value written as the pointer to the shadow mapping

light transform.

Now I just need to figure out why there are so many light slabs here.

...OOF! 🤦

Looks like Stage creates its own Lighting object, which contains the lighting-slab, then in

render_with (a temporary function just for fleshing out this feature) binds that slab, not

the one used by the external ShadowMap.

Finally, rendered shadows🔗

Let's stop here for now.

Sat Jan 25, 2025🔗

The shadow mapping saga continues🔗

I've hooked up shadow mapping end to end. Everything is connected. The shadow map looks good, so why isn't anything happening in my PBR shader?

Well it's because my test has lighting turned off. lol 🤦. This is why I keep the facepalm emoji close at hand.

...

Ok - even after that it looks like my analytical lighting is borked.

The scene_cube_directional test, which tests directional lighting, is failing.

I'll have to fix that test before continuing.

...

Ok - it was because my shader was doing something stupid. I had changed the shader

for debugging purposes to calculate the color as shadow * everything_else, and

since shadow is 0.0 when there is no shadow everything was coming up dark.

After updating the shader (returning it to normal, without shadows) it works as expected.

...

So now let's look at the scene with analytical lighting to ensure the directional light is working.

It's working.

...

Fixing the lighting calculation shows that the shadow has no effect, meaning it's returning 0.0 everywhere.

No shadow.

So I'll run the calculation on the CPU over each fragment position and look at the generated images.

...

That's tough, because I'd have to save the world positions of each fragment to a GBuffer and read it.

Instead, I've placed another object in the scene which should be in the shadow of the block.

I know its position and so I can calculate the shadow at that position and it should come out to 1.0.

Ok, I can see that the conversion of the fragment position in light space to the shadow mapping

sampling coordinates is incorrect.

This code, coming from learnopengl.com is assuming the OpenGL projection matrix which assumes

a depth range of -1.0 to 1.0 and it also assumes that the texture space origin is the lower

left corner.

After correcting for that, the sampling coords in pixels become 406.20013, 363.07574, which seems

right on the money if you look at the depth image.

But now it looks like the closest depth at that point (the sample) is coming up 1.0, 1.0, 1.0, 1.0,

which would be at the very back of clip space, which is wrong, it should be coming up somewhere in the

middle - so 0.5.

So I think my CPU sampling is off.

Yup! It is. I've now improved CPU sampling a bit.

And now the test passes that assertion - turns out it was indeed the transformation of the frag position in light space into the sampling coordinate.

...

But I'm still not seeing a shadow.

That could mean that there's something wrong further up in the shader. It could also mean that the bindings are not set up correctly.

...

I hadn't updated the PBR fragment shader to read the shadow mapping light transform from the descriptor. We'll see if that helps (it should).

...

That didn't seem to do it. There must be more items on the bug stack.

...

So I'm now trying to find values to run the fragment shader with, and it's really making me feel like the fragment shader should be writing to a g-buffer. That would make this debugging a lot easier. Of course, it's not easier to write that g-buffer code and do the debugging. But that's another thing I should put on my list...

...

After getting the vertex info from the point on the top of the green sphere and running the

fragment shader with that, it looks like the value being returned from shadow_calculation

is 1.0, which is what I expect.

That point is indeed in shadow.

So then that leaves CPU side things, I think.

I'll take a look at the wgpu linkage.

Mon Jan 20, 2025🔗

I'm starting to think I should separate the buffers by concern.

With wgpu's "downlevel default" resource limits we're limited to 4 bind groups

per shader stage.

I think I could split Renderling's main bindgroups into (roughly):

Geometry slab (vertex, index, bounding volumes etc)

Material slab and atlas (textures descriptors from the atlas, material descriptors)

Lighting slab (lights, shadow maps)

...

And now that I think of it, I could use an Atlas to store shadow mapping texture data.

This would allow sampling from actual textures, as opposed to the current situation where

if I want more than one shadow map I'd have to copy the depth texture to a storage buffer.

When using Atlas, the textures would be stored in a

texture array, which means we could have more than one, and at different sizes - and which

would negate the separate compute step!

One complication I see is that point light shadow maps use cube maps, and sampling from our atlas doesn't yet support cube map sampling, so I'll have to write that.

But actually - if I wrote support for cube map sampling from the Atlas, I could store the

skybox and IBL data in the same way! This would really save on texture bindings and clean

up the shaders.

Sat Jan 18, 2025🔗

Back to shadow mapping.

The aformentioned craballoc part-out session

sparked some bug fixes to scheduling and improvements to the

CPU/GPU synchronization API.

This in turn, I think, fixed the shadow mapping problem I was seeing before,

where the shadow map's depth texture only showed a sliver of data.

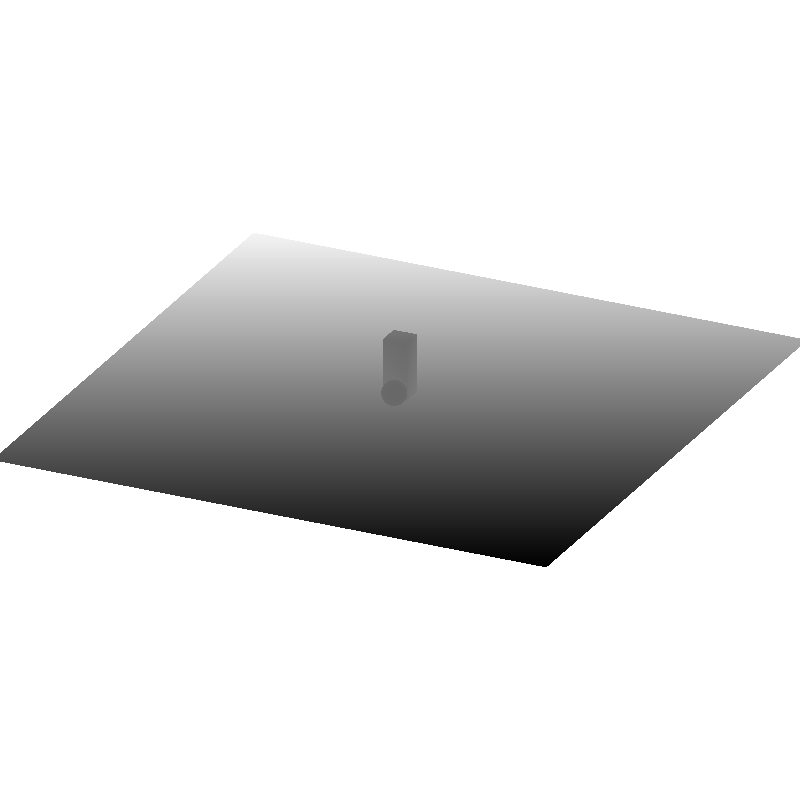

It seems we have perfect data on the GPU and can move on:

So now that we have the shadow mapping depth values, we should be able to use it in the PBR shader.

How to bind the shadow map?🔗

The most common method to bind the shadow map to the PBR shader is to assume there's only one, and bind the depth texture as a sampling source.

But this limits us to one shadow source. That's not what renderling is about.

It would be nice to bind an array of shadow map depth textures, and then index into it, but WebGPU doesn't support arrays of textures.

So I have to think about copying the depth buffer to a storage buffer (possibly just for lights) which I could then "sample" from, since WebGPU doesn't provide arrays of textures to bind to.

So I have to choose:

set the number of shadow maps statically at compile time with a descriptor set and binding.

run shading once per shadow map (I don't know how that would work)

use a texture array of shadow map depths and each shadow map is the same size

use a compute pass to copy the depth to a storage buffer and index into it to sample

With a storage buffer for lights we could store the shadow map's depth buffer at any size, and reference it from the slab.

But we would lose sampling conveniences.

...

For now I'm just going to bind one shadow map statically. I can worry about this later after I've proven the rest of the method.

Sun 12 Jan, 2025🔗

I wrote a 2024 wrapped article. Check it out!

Parted out SlabAllocator🔗

I also parted out the SlabAllocator from renderling into its own crate, now called

craballoc. I'm now using it for other GPU projects.

LA fires🔗

I also worked on a short article on the LA fires, as I'm from Pasadena and have friends and family living in Altadena. I'm not sure if I'll publish it, or if I do I might not publish it here. It might make more sense to host it at my personal site zyghost.com.

Either way, my heart goes out to everyone there. We left California because of these fire events and although I think it was the "right" decision for our family, it's not so easy for others to take that leap.

Bug fixes🔗

I'm currently babysitting a bug fix through CI:

nlnet contract updates🔗

I re-read my contract with NLNet and it looks like I actually have until May 10th, 2025 to complete my work!

This means I can likely complete shadow mapping and at least start tackling light tiling and be paid for it!

Fri 27 Dec, 2024🔗

Shadow mapping debugging session 3🔗

Xcode debugging doesn't seem to reveal anything interesting.

Now I'll bind a render attachment to the shadow map update and see what happens.

Thu 26 Dec, 2024🔗

I hope you all are having a great holiday break!

Shadow mapping debugging session 2🔗

Welcome to another installment of debugging the shadow mapping shader.

I'm going to collect and compare the world position as calculated from the vertex shader and from the shadow mapping shader.

...⏱️

Ok, those are all the same! Well, that's good, I guess.

This means the bug is after calculating world position. I'll just go along and assert some things.

The light's id is correct.

All the renderlet's camera_id fields are set to the correct camera.

And the light we're using is the one we expect to use.

And the light's parent transform is what we expect.

The cameras used to calculate shadow_mapping_projection_and_view, which is

the light transform - are what we expect.

The light transforms themselves are also what we expect.

In the test case we're taking the light_projection and light_view as

calculated by DirectionalLight::shadow_mapping_projection_and_view.

We've already asserted that the results are what we expect.

The calculated world positions for each vertex are equal.

All the calculated values, including the resulting view+projection matrices are the same...

...

I'll wire this into an app and then use Xcode debugging tools... ...oof.

Mon 23 Dec, 2024🔗

Shadow mapping debugging session 1🔗

I'm working on shadow mapping, hoping to finish it up before the end of the year so I can claim another milestone on my grant project. If I can manage it, it would mean I hit 6/7 of the milestones - only missing light tiling, which I will carry over into the new year (with funding or not).

Currently, I've got a separate depth texture used as the shadow map, I've calculated the light transform that shows the scene from the light's point of view, and I'm rendering with my usual rendering pathway but using the light transform as the camera to verify that transformation.

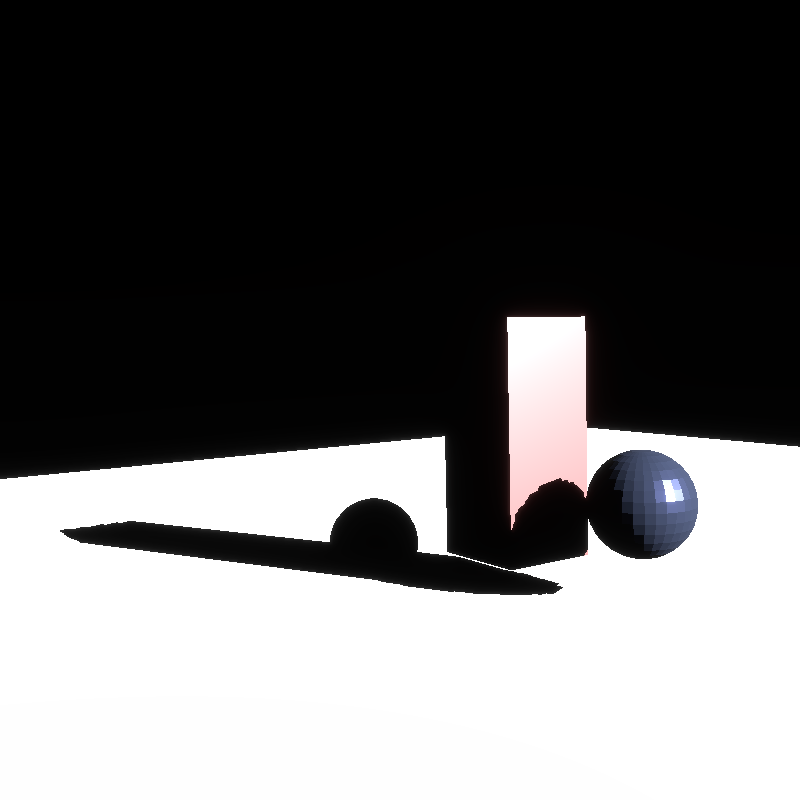

This is what I see:

The problem is that when I apply this transformation to shadow mapping, the depth appears completely black.

This is a classic example of how things go wrong in a graphics project, lol.

I have a feeling it's rendering, but it's all black because depth in NDC is not linear - the resolution decreases the further from the camera you are.

...⏱️

Hrm. Even after linearization and normalization, nothing.

The depth texture is all zero. Maybe it's being cleared?

...⏱️

Ok, so it looks like some of the historical tests that assert depth are broken. So somewhere along the line of these changes I broke the depth buffer.

Time for a git bisect.

git bisect says it was commit

19c6db194b7ce8afa129b9d88fbe3c1542e5b3f0,

which is a giant checkpoint commit. I often do checkpoints in WIPs because I'm

frantically coding in my free time and get interrupted and lose context on what

I was working on.

This devlog is an attempt to allay that problem, really.

It could be the change here https://github.com/schell/renderling/pull/145/commits/19c6db194b7ce8afa129b9d88fbe3c1542e5b3f0#diff-07be3c3837d7379336a0625534f3c01c4a8c7870d14037b351555aeed2e97670L683-R689.

...⏱️

Well, that was one problem, but another problem is that the depth of the light transform's frustum is coming out very small.

...⏱️

After the fixing the mistake above, if I don't linearize the depth, the depth texture is fine. So for the sake of finishing this milestone I'm going to log a warning and make a TODO to fix depth linearization and move on.

Now - I've been doing this in a regular Stage rendering in order to verify

the light transform.

That's done, so now I can "update" the shadow map and I should see the same

depth texture.

But my shadow map's depth texture is blank.

There's probably something wrong with the pipeline setup, or with the shadow

map's vertex shader.

I'll start by debugging the vertex shader.

Here is the function's signature:

/// Shadow mapping vertex shader. #[spirv(vertex)] #[allow(clippy::too_many_arguments)] pub fn shadow_mapping_vertex( // Points at a `Renderlet` #[spirv(instance_index)] renderlet_id: Id<Renderlet>, // Which vertex within the renderlet are we rendering #[spirv(vertex_index)] vertex_index: u32, #[spirv(storage_buffer, descriptor_set = 0, binding = 0)] slab: &[u32], #[spirv(storage_buffer, descriptor_set = 0, binding = 1)] light_id: &Id<Light>, #[spirv(position)] out_clip_pos: &mut Vec4, ) {...}

So first I'll get the slab and I'll run the vertex shader on the CPU to see if we get any NaN values or something of that ilk:

let slab = futures_lite::future::block_on(stage.read(&ctx, None, ..)).unwrap(); let mut clip_positions = vec![]; for hybrid in doc.renderlets.values().flatten() { let renderlet = hybrid.get(); for index in 0..renderlet.get_vertex_count() { let mut out_clip_pos = Vec4::ZERO; crate::light::shadow_mapping_vertex( hybrid.id(), index, &slab, &gltf_light.light.id(), &mut out_clip_pos, ); clip_positions.push(out_clip_pos); } } log::info!("clip_positions: {clip_positions:#?}");

Hrm. No NaNs, I see a lot of values like this:

Vec4(

0.0072851786,

-0.0029473465,

6.3410006,

1.0,

),

Vec4(

-0.17068025,

-0.21936357,

9.806452,

1.0,

),

Vec4(

0.17068014,

-0.48623937,

5.5299783,

1.0,

),

Vec4(

0.17068014,

0.21936369,

3.5116858,

1.0,

),

Vec4(

-0.17068025,

-0.21936357,

9.806452,

1.0,

),

Vec4(

0.17068014,

0.21936369,

3.5116858,

1.0,

),

Vec4(

-0.17068025,

0.4862395,

7.78816,

1.0,

),Curious though, as these values don't seem to be in clip space? I wonder how many vertices are in clip space?

I'll do:

let ndc = out_clip_pos.xyz() / out_clip_pos.w; if ndc.distance(Vec3::ZERO) <= 1.0 { clip_positions.push(out_clip_pos); }

Huh! Zero positions are in clip space. So that's a problem.

...

I'm thinking this is probably because in my verification of the light transform I'm only transforming the input vertices by the light transformation, whereas in the shadow mapping vertex shader I'm transforming the input vertices into world space and then transforming that again by the light transformation. So I'm simply not done with the build-out of this vertex shader. This is the cost of losing context between development sessions.

It turns out I simply had not used the same function for determining the light transform in my shader.

...

Ok, it also turns out that for the step where I verify the light transformation, I was changing all the renderlet's camera ids to a new one based on the light's POV - but I forgot to change them back! So many bugs on top of each other. Ok, now that I have that sorted I can see something in my depth, but it's hardly anything:

From the numbers it looks like the transformations are correct, and I can't find an obvious discrepancy between the shadow mapping vertex shader and the normal vertex shader. I'll take a look at the pipeline set up and see if there's anything obvious there that I missed. I think as a last resort I could use a pass-through fragment shader to try to "see more".

The fact that I can see this sliver, though, makes me think there's still a problem with clip space. Somehow most of the scene is getting clipped in the shadow mapping vertex.

I'll have to spend another day debugging this...

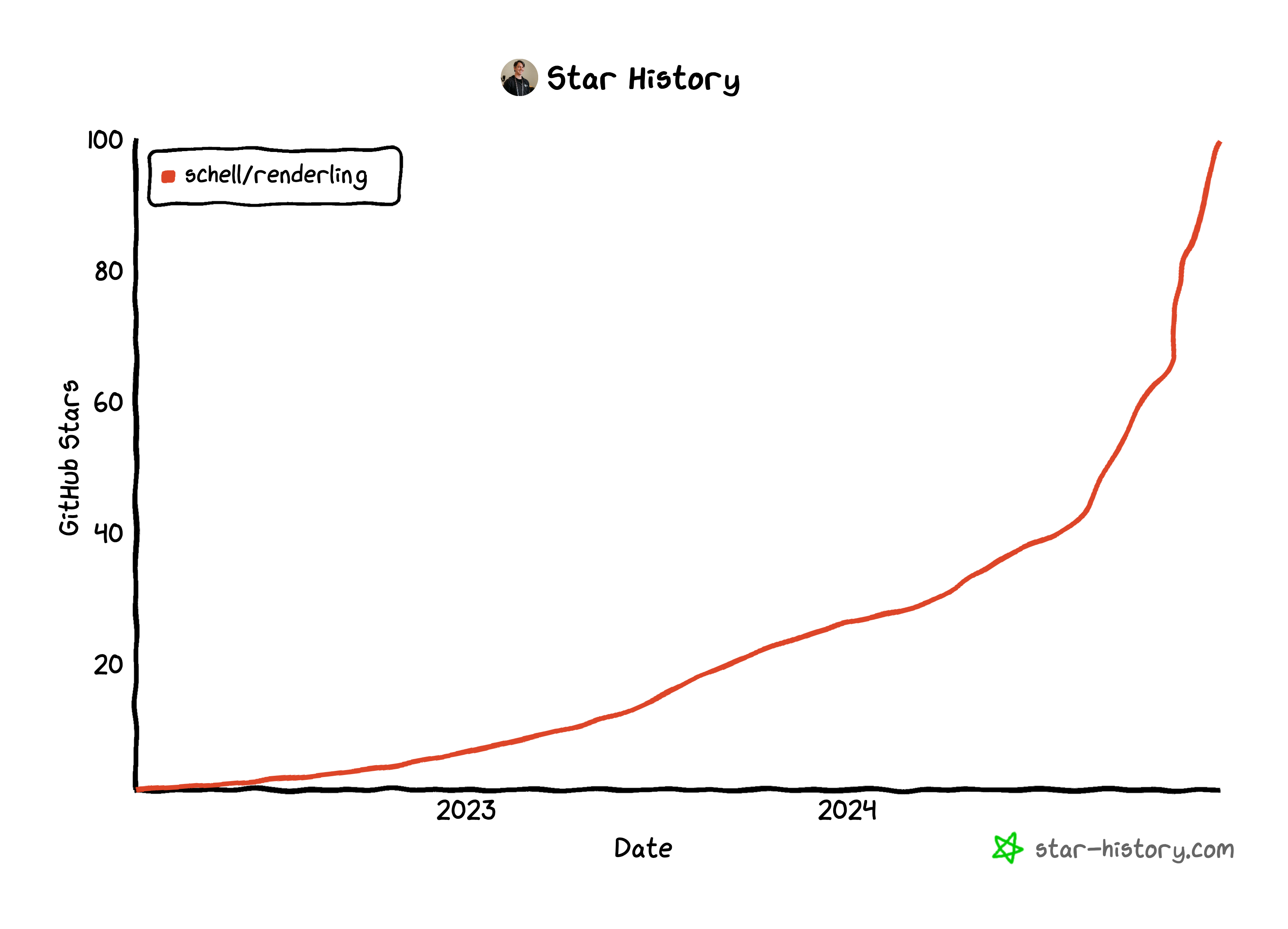

100 github stars!🔗

The project hit 100 github stars.

Here's the current graph:

Wed Dec 18, 2024🔗

nlnet update - SPIR-V Atomics in wgpu have landed! 🚀🔗

All the work adding support for atomics in the SPIR-V frontend of naga in wgpu has been finished!

https://github.com/gfx-rs/wgpu/issues/4489

Ongoing shadow mapping work🔗

I'm still working on shadow mapping.

Nothing too exciting.

No big debugging sessions.

I am thinking I'll do a recap and walkthrough of the feature once it's finished, which I'll link here. I think that will be easier to follow than my usual stream-of-consciousness live-blogging.

Sun Dec 8, 2024🔗

Folks trying out renderling on discord - renderling mentions around the web🔗

User Animats mentioned us on the r/rust_gamedev subreddit:

Renderling->WGPU

Rendering is a new renderer.

Pro:

Con:

Summary:

Technically interesting but not ready for use. Worth following progress.

They get a lot correct, but a few misunderstandings.

No bindless mode.

Well, renderling is mostly bindless apart from not being able to upload endless textures. We're limited to one giant texture array, then we only bind that one texture and sample from sub-textures stored on the slab. So at least for normal PBR rendering we're "bindless". Of course this doesn't include situations like rendering shadow maps or updating IBL probes or generating mip-maps, etc. Those all take their own textures that are separate from the atlas.

No punctual lights yet.

We definitely do support punctual lights, with the caveat that you have to hook them up yourself. In fact I'm currently implenting shadow mapping for directional and spot lights.

I should make this more apparent.

I should also make using analytical lighting easier.

Does not support general asset creation/destruction.

I'm not sure what this means.

If you "create" an asset by using Stage to create Hybrid values on the CPU+GPU, you can drop those

values at any time to "destroy" them. An asset will be made up of a bunch of these values and so dropping

them will "destroy" the asset automatically.

Of course - I should make this much more explicit in the documentation.

Technically interesting but not ready for use. Worth following progress.

Yup! And thank you! 🙇

Discord

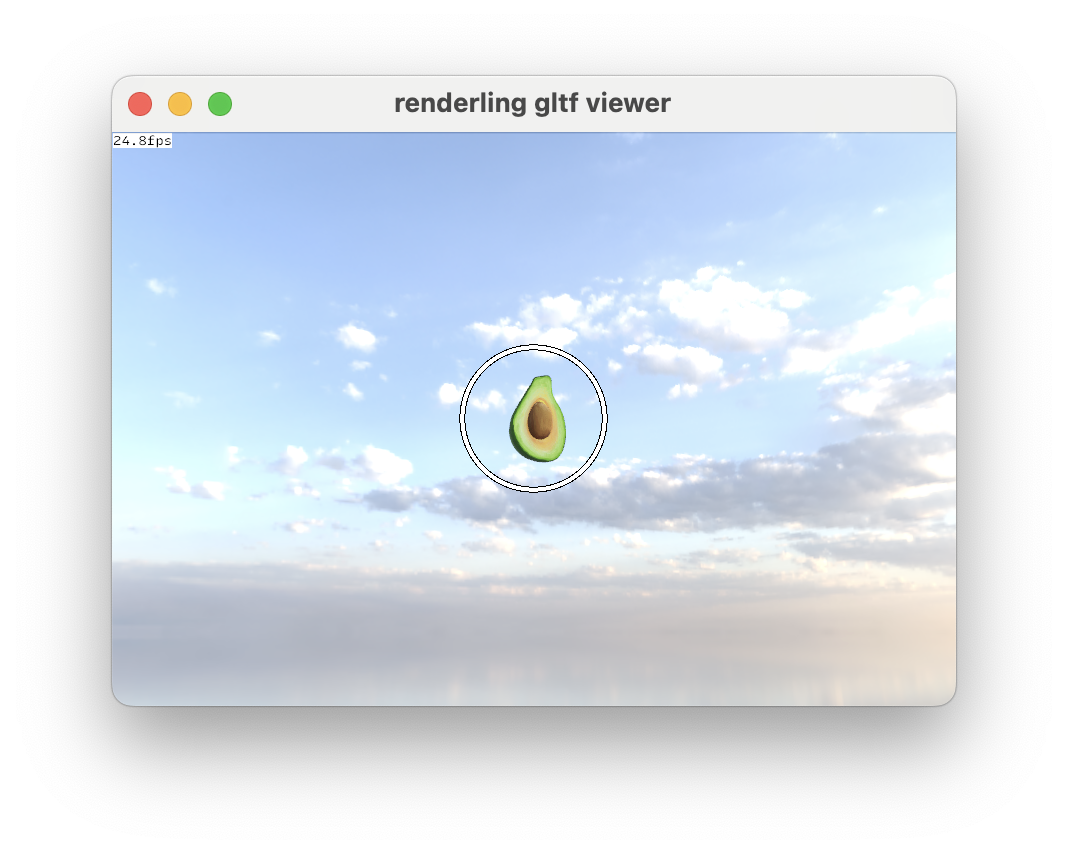

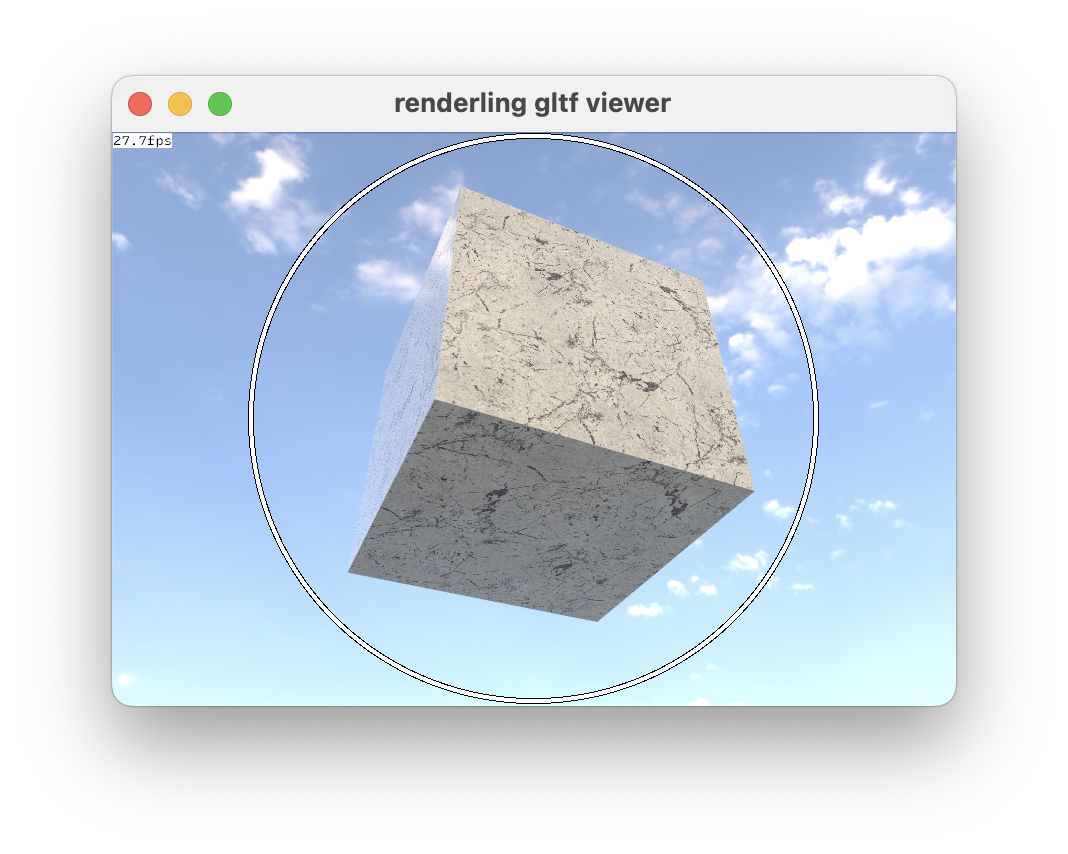

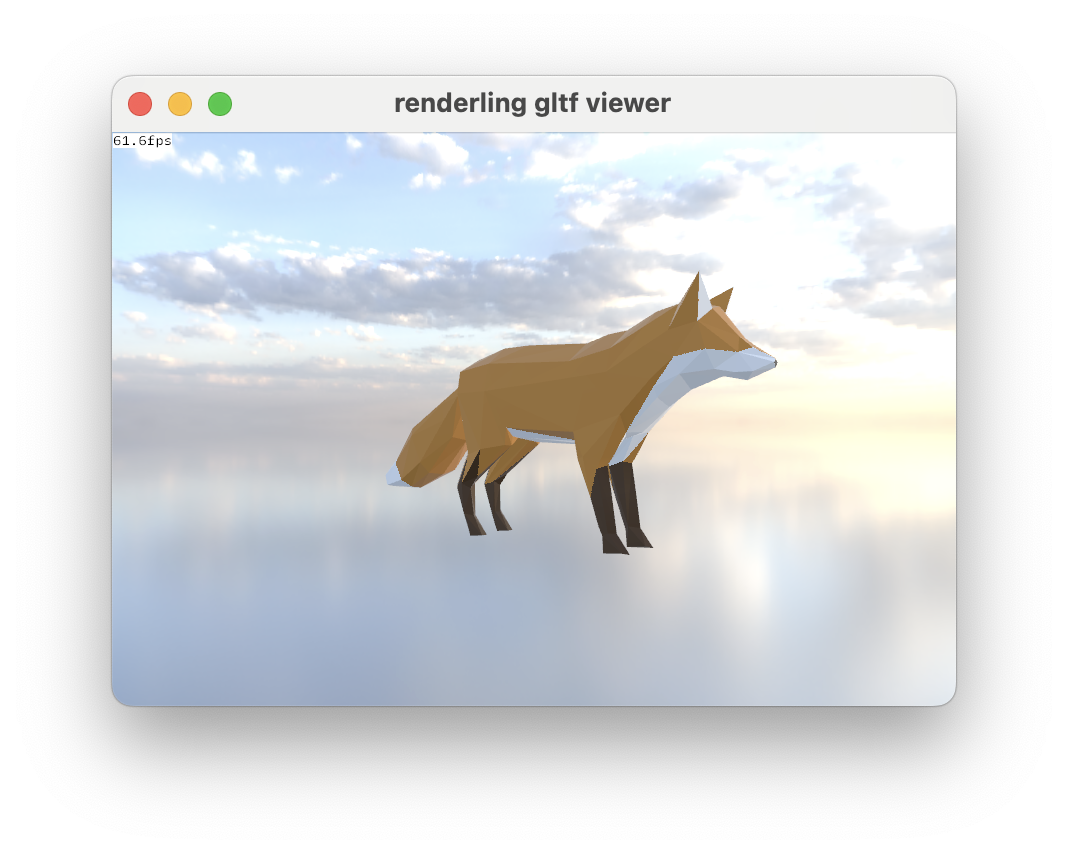

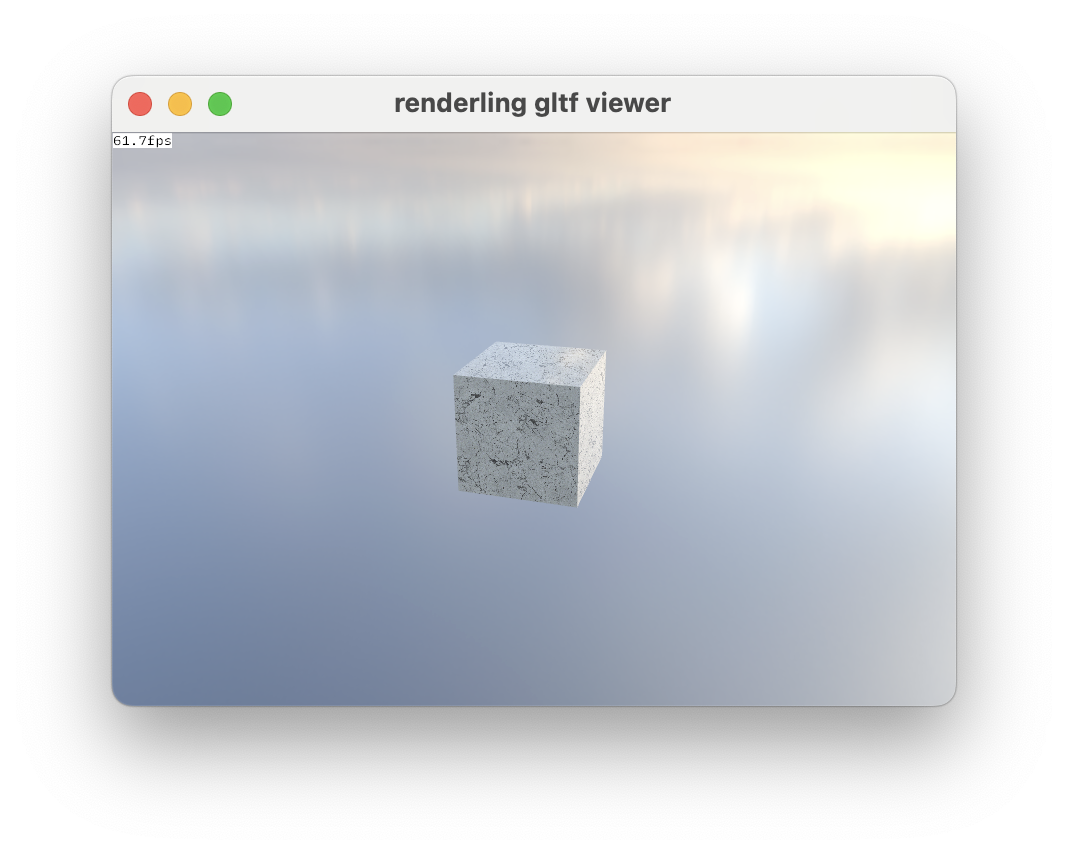

User ValorZard mentioned us on Discord, where they are running the example glTF viewer.

That has sparked a deep dive into CI to better support Windows.

Shadow mapping progress 2🔗

I've made more progress on shadow mapping, but still nothing exciting to post.

NLnet updates - wgpu atomics and re-application for 2025🔗

I've reapplied to NLnet for 2025. 🤞.

Jim Blandy has been reviewing my PR to support OpAtomicCompareExchange and

I'm working on his suggestions.

Sat Dec 7, 2024🔗

Shadow mapping progress 1🔗

I fixed a bug where cameras loaded from glTF files were taking the wrong node transforms. It was a one-liner caused by using the "camera index" to get the node transform instead of the "node index".

I have the initial setup of shadow mapping running. Nothing significant yet.

Wed Nov 27, 2024🔗

Shadow mapping kickoff ⚽🔗

I've read the shadow mapping tutorials at learnopengl a few times now. I really love that site, it's such a great resource.

The work for shadow mapping will kick-off this week as I get time in the morning.

Sun Nov 24, 2024🔗

Back to atomics work part 2 - NLnet updates🔗

I put up a PR that provides support for OpAtomicCompareExchange.

See yesterday's notes for more info.

It's a workable solution, but it predeclares a couple types for every module that comes through the SPIR-V frontend. I think that's fine, but it's not the most elegant solution. A more elegant solution would be to add more type-upgrade machinery, but I think that could get out of hand pretty quickly.

Next up - shadow mapping!🔗

Next on the docket is shadow mapping. I'll be using learnopengl's shadow mapping tutorial for the initial implementation.

Sat Nov 23, 2024🔗

The ecosystem is heating up - renderling mentions around the web🔗

Until now I've been the only one talking about Renderling, but this week that changed!

Below the user Animats talks about Renderling on HN and Reddit, and generally says nice things about the project.

Bevy, Rend3, and Renderling, the next level up, all use WGPU. It's so convenient.

User Animats, on Hacker news - comment on article "What's Next for WebGPU"

I've been looking at Renderling, a new renderer. See my notes...

User Animats, on Reddit r/rust_gamedev - post "The My First Renderer problem "

User Animats, on Reddit r/vulkan - post "Approaches to bindless for Rust"

Other developments - Nvidia🔗

I also have a scheduled meeting with someone at Nvidia. We'll be talking about how the Rust community uses the GPU. Roughly.

Back to atomics work - NLnet updates🔗

I'm back working on the last round of atomic support in the naga frontend.

See my WIP PR for atomic compare exchange at https://github.com/gfx-rs/wgpu/pull/6590.

Initially I thought the problem was going to take some new type-upgrade machinery, similar to the existing machinery. But now I think the problem might be a bit more like this texture sampling issue https://github.com/gfx-rs/wgpu/issues/4551, in that WGSL and SPIR-V differ in their parameters and return results.

I fixed that ticket by adding a step inline to provide the downstream code with what it expected in SPIR-V.

I'm hoping I can do the same thing with OpAtomicCompareExchange.

notes/deep dive on supporting OpAtomicCompareExchange

note that the spec is for

atomicCompareExchangeWeak, but SPIR-V also hasOpAtomicCompareExchangeWeakand that spec says:Has the same semantics as OpAtomicCompareExchange

So I think it's fine and I won't worry about it.

So - Rust-GPU and SPIR-V expect the return value of this op to be the same as the underlying atomic's value.

WGSL, however, expects this to be a struct like this:

struct __atomic_compare_exchange_result{ old_value : T, // old value stored in the atomic exchanged : bool // true if the exchange was done }

...and then the WGSL spec goes on to say:

Note: A value cannot be explicitly declared with the type __atomic_compare_exchange_result, but a value may infer the type.

So we don't have to define this result type, I don't think. Instead, we should be able to access it with the dot operator.

It would be nice to find an example of WGSL's atomicCompareExchangeWeak being used in the wild...

That helps!

It looks like these examples all access the struct values using the dot operator.

Ok - on to mapping between the two calls.

WGSL and SPIR-V all take the same parameters (in a slightly different order), so we're good there.

It's really just that WGSL returns whether or not the value was updated. This could be determined at the call site by comparing the result with the comparator (based on the specs - if they are equal, the atomic was updated), so I'm guessing WGSL does this as an optimization to avoid a costly comparison?

Anyway, I think the only thing we need to do is use the dot operator on the result, inline. So indeed this is just like the texture sampling issue I linked above. I might actually ship this today!

Wed Nov 20, 2024🔗

I have become a Rust-GPU maintainer🔗

I'm now a maintainer of the Rust-GPU compiler!

Ergonomics, stability, developer experience and integration with wgpu will be my focus on this project.

Temporarily setting aside occlusion culling🔗

I'm putting occlusion culling aside while I finish up my NLnet grant work. I've applied for a grant for next year and we'll see how that goes 🤞.

For the remainder of the year I'll be working on the last bit of atomics work and shadow mapping. If I have time I'll put some work into improving documentation and creating a sample app.

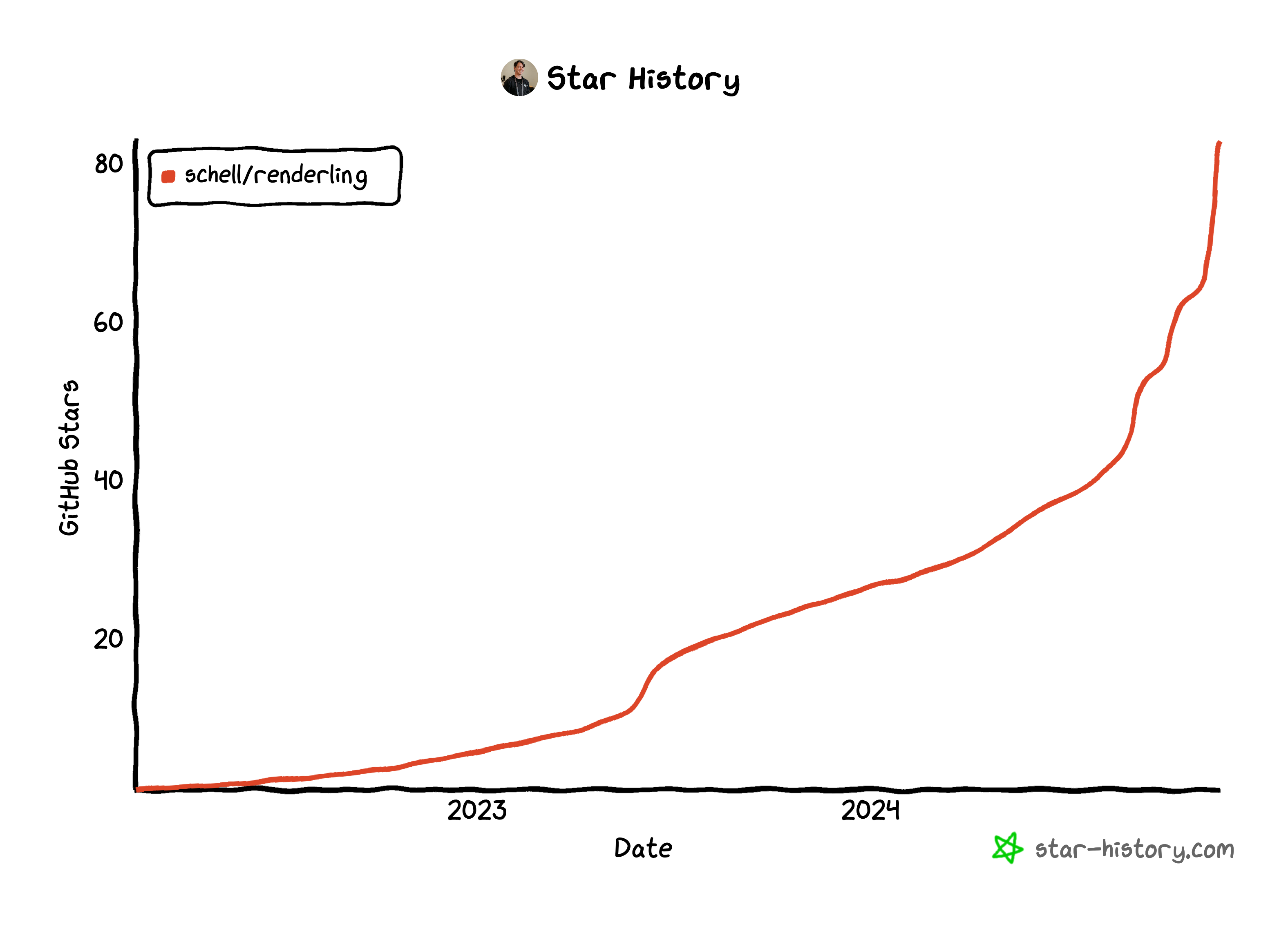

Renderling growth 2024🔗

Wow! Renderling has grown a lot this year!

Measuring by the number of stars on the github repo we've grown by 219%!

That's a hockey stick if I ever saw one 😊.

☕☕☕

Sat Nov 2, 2024 & Sun Nov 3, 2024🔗

More burnout avoidance while tackling occlusion culling🔗

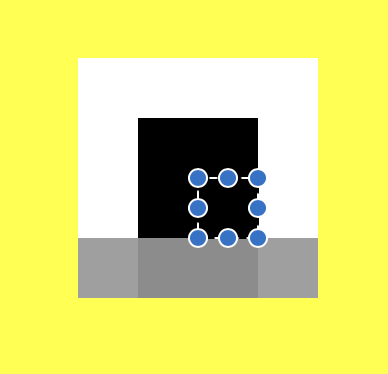

I've taken a small hiatus this past week, only poking around a little by writing a debugging overlay shader that displays bounding volumes, and then trying it out on different models to check their bounds.

As you can see, only two have visible bounds, which means the rest either have a boundary that surpasses the NDC cube, none at all, or something else is going on.

You can also see that the framerate is really low!

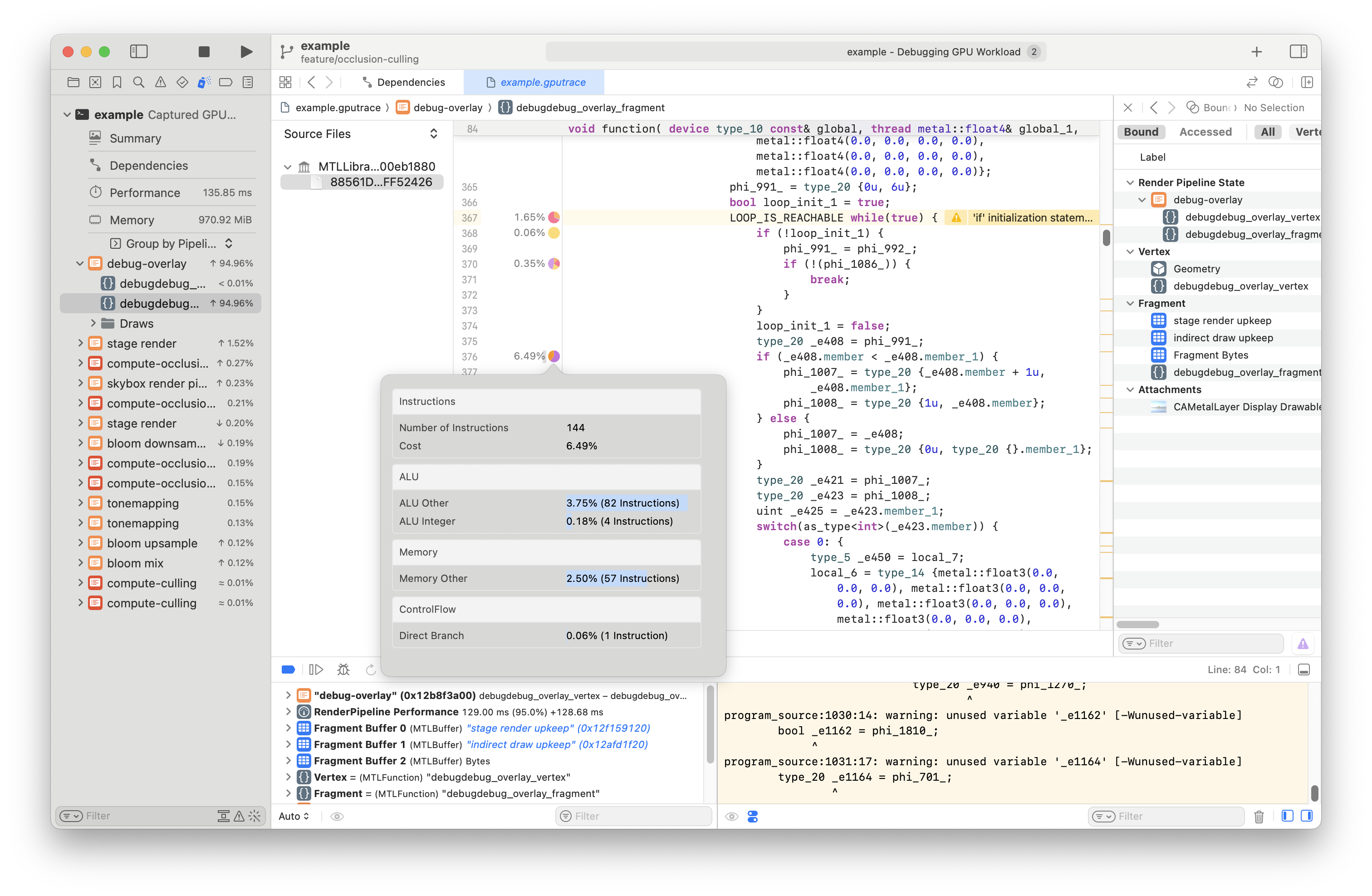

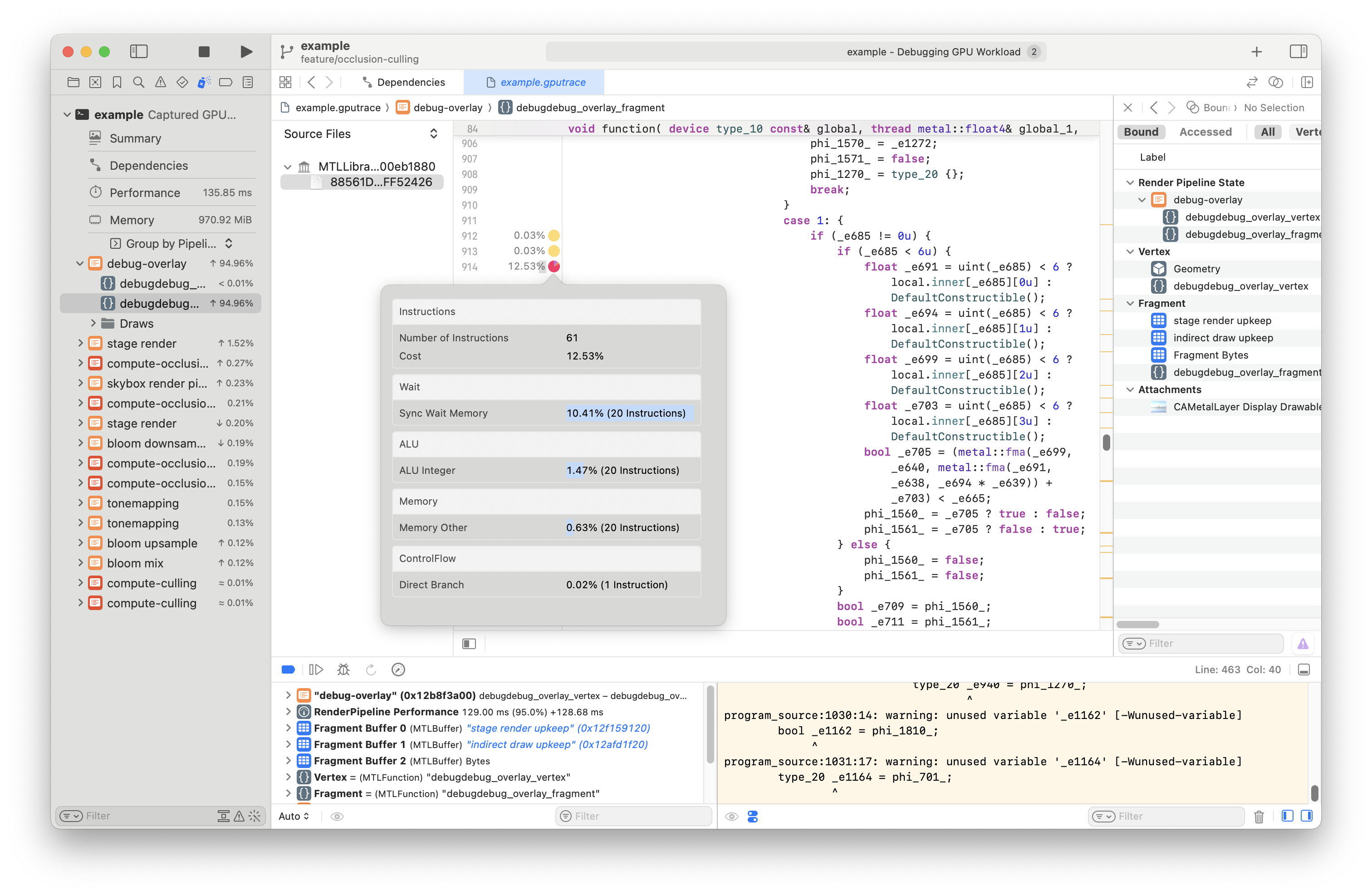

When profiling in Xcode using the metal frame-capture machinery, the profiling tells me that the debug overlay fragment shader is responsible for 98% of the frame time.

It's not totally surprising, though, given that it loops over every draw

call, reading that call's Renderlet and then projecting it and possibly coloring

the fragment based on its proximity to the bounding sphere.

But I guess it is surprising given that most of these models only have one or two draw calls. So that loop is not very long.

Now I'm reading about shader optimization from https://developer.apple.com/documentation/xcode/optimizing-gpu-performance/ to see what I can do to gain some insight. I can see from my frame capture that the "occupancy" is low in the debug overlay shader. I think that means that the difference invocations of the shader are hitting different branches.

Deeper into shader profiling with Xcode on occlusion culling🔗

Following https://developer.apple.com/documentation/xcode/optimizing-gpu-performance/#Optimize-shaders-with-per-line-shader-profiling-statistics I can see my shader with weights attached! Pretty cool.

I mean, it's not my Rust code, but it's easier to read that SPIR-V 👍.

I can see there's an inner function that's taking ~60%-90% of the time

slice. This is how naga constructs its shaders. It always makes an inner

function and then calls that from the main.

Inside that function are the cost centers I'm interested in. There's a number of them, the bigger ones from 5%-12%.

In the second case it's actually pretty hard to see what the conditional is about. I really wish I had a source map to get back to my Rust code...

Kinda thrashing but guided by profiling on occlusion culling🔗

I'm thrashing a bit, but removing two of the conditionals in the debug overlay

fragment shader got the cost down to 50%. I'll remove as many more conditionals

as I can, and I'll use

Slab::read_unchecked

on the slab items that I know will always be populated and I'll see what

happens...

...on a side note, my Rust shader compilation times are at around

46seconds... ...it's hurting a bit. I need

https://github.com/Rust-GPU/rust-gpu/pull/21 to land to speed up

these dev cycles...

Slab::read_unchecked to the rescue, occlusion culling🔗

That really sped it up! Changing a few calls to read_unchecked changed

the shader execution time -68%. The example app is now usable! ☕☕☕lol.

But can we go further? Even though the total frame time went from 120ms to

38ms, that's not fast enough for real-time. We need it to get down to

16ms.

...

These Xcode profiling tools are pretty cool. But I can't help but want GPU flamegraphs. I wonder how difficult it would be to transform Metal flamegraphs into SPIR-V into Rust...

...I'm not going to think very hard about that!

...⏱️

So, more profiling.

I can see that the last cost center in my debug overlay fragment shader is this line:

float _e685 = uint(_e680) < 8 ? local_1.inner[_e680][0u] : DefaultConstructible();

where

struct DefaultConstructible { template<typename T> operator T() && { return T {}; } };

Climbing up the tree of all those variables and their types (_e680, local_1) is pretty

difficult, and my intuition says that it's a big read from the slab... ...so I'll thrash

a bit and hazard a guess that it's from this line in my shader:

let PbrConfig { atlas_size: _, resolution: viewport_size, debug_channel: _, has_lighting: _, has_skinning: _, has_compute_culling: _, light_array: _, } = slab.read_unchecked(Id::new(0));

Which upon inspection, I see that we're doing this read_unchecked on a pretty big struct

and then ignoring all the fields except resolution, which I can replace with some pointer

math, and only read the one field.

The sad bit is that crabslab used to generate offset identifiers for each field of a struct

automatically in the SlabItem derive macro, but I removed that because of compilation times.

It didn't add a ton, but I was trying to reduce compilation times by any means necessary.

On occlusion culling and reading as little as possible🔗

Before replacing the big PbrConfig read with a smaller read of just the

resolution: viewport_size, the frame time was about 35ms...

...and after it looks to be ~31ms, so that's a possibly significant reduction,

about 11%. Let's see what happens if I go further. I can write a macro that would make

this a lot easier...

...fortunately I have a lot of this work in git, I just have to resurrect it.

...⏱️

So now I've replaced the PbrConfig read and also the Renderlet read with a few smaller

reads.

...aaaaaand Xcode crashed. I reflexively didn't look at the crash report, it happens often enough 😭.

The changes brought down the frame time to ~33ms. Really not much.

The cost centers look the same, pretty much.

I'm going to thrash a little more and change the type of loop from a for _ in 0.._ to loop,

though I'm starting to think that the bulk of the cost is in calculating the

projected bounding sphere of the renderlet. This will be my last optimization

attempt before moving on. I think another optimization down the road may be to

do 2-pass occlusion culling where the first pass calculates a visibility buffer

that includes this information.

So that didn't end up with any gains.

I did, however, stumble into an odd bug where different inline annotations seem to change the results of the debug overlay shader.

Sat Oct 26, 2024🔗

Occlusion culling and avoiding burnout🔗

I'm just coming back to this after an intense day job work week in which I didn't touch renderling at all.

I'm quite focused on my burnout level, and I really don't want to cross the threshold for too long, if at all.

I've burned out on side projects before, and so I like to think that I can feel the burnout coming. Essentially, when I don't feel like hacking on a project I have to back off and not force myself. I have to forget all the blogs I've read that insist "grit and persistence" are the surest means to success. Even if that's true, my body is telling me to take a break, so I have to heed that warning.

So today doesn't have much of an update.

I fixed an issue where the MSAA sample count wasn't being updated, which was causing the occlusion culling depth pyramid (aka the "HZB") to be invalidated each frame.

But even after all the debugging and bug fixing, there's still something fundamentally wrong with the algorithm. The Objects' visibility are still unstable like in the video I posted below.

I think maybe I need to expand the bounding spheres of the objects a bit, but I don't want to just thrash around.

Tomorrow or later today (or whenever I feel rejuvenated) I'll add a layer of debug rendering so I can see what's going on...

Fri Oct 18, 2024 & Sat Oct 19, 2024 & Sun Oct 20, 2024🔗

Pre-debugging occlusion culling results🔗

Sometimes it's hard to write about failure, so I'll let the video do the talking:

As you can see the frame rate is worse! It's at ~6FPS now.

I could just dive right in at this point, trying to figure out why it's so slow, but it's also obviously incorrect in that it's culling the wrong things.

So I'll take some time to pick apart my occlusion culling shader and verify its different steps first.

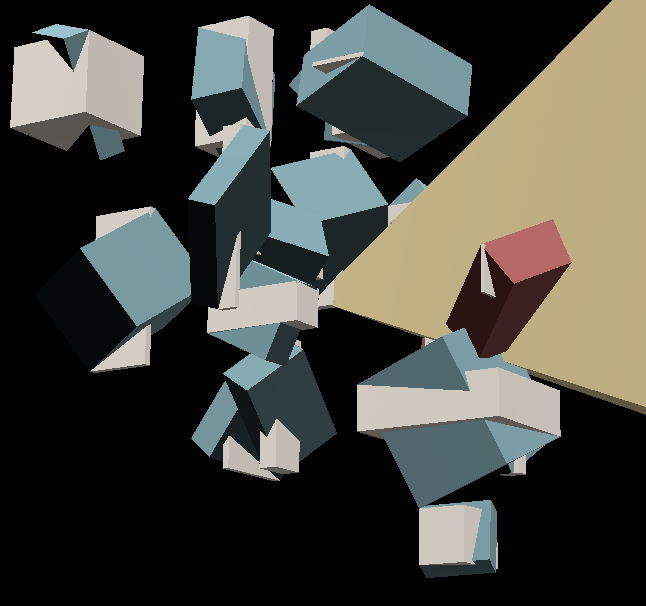

Debugging occlusion culling🔗

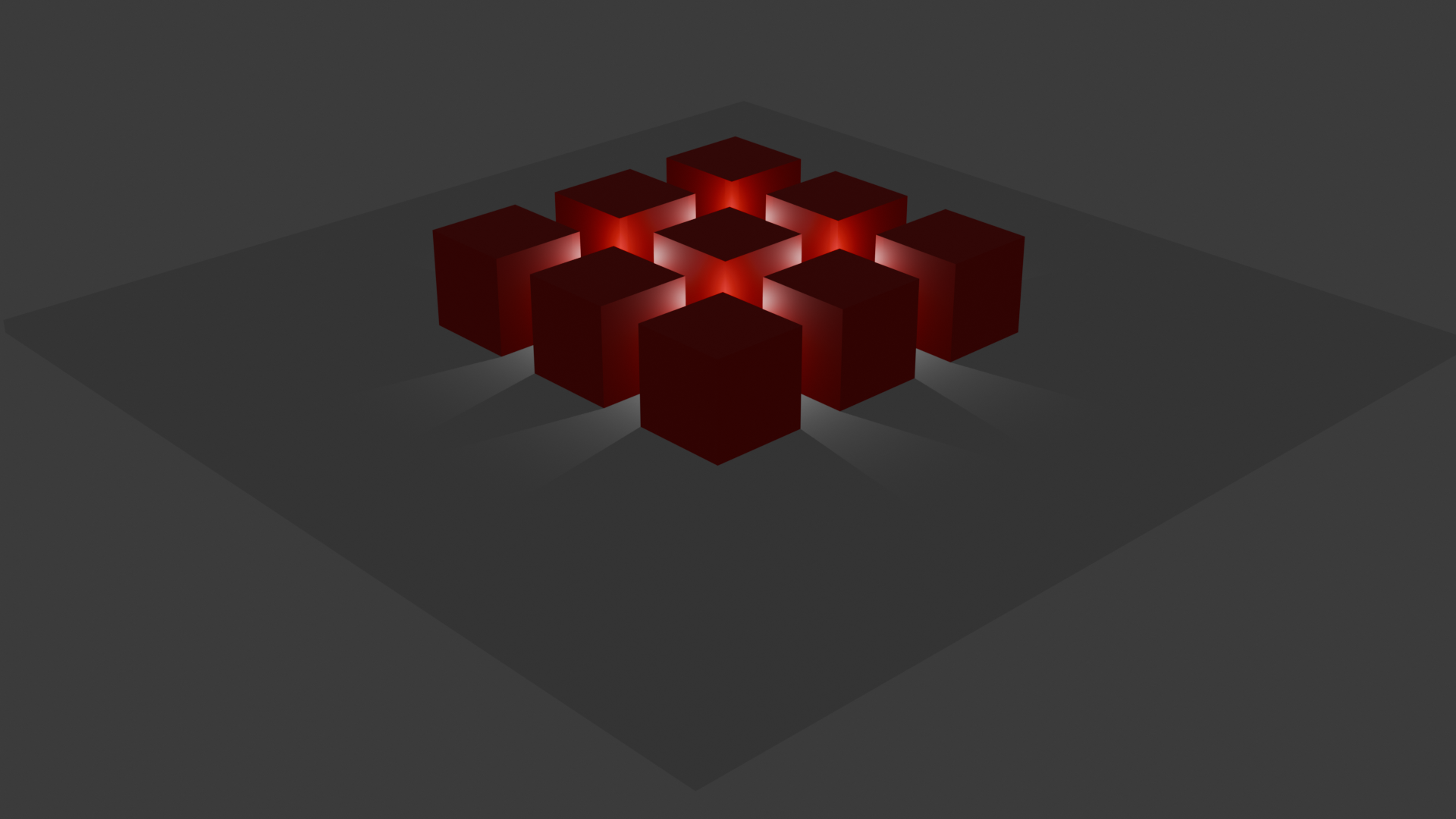

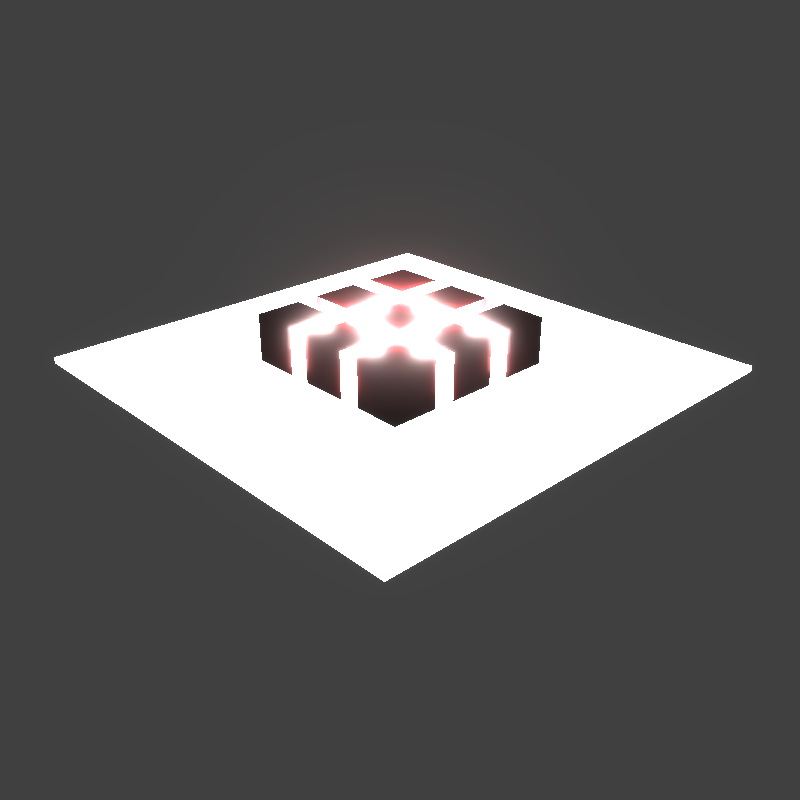

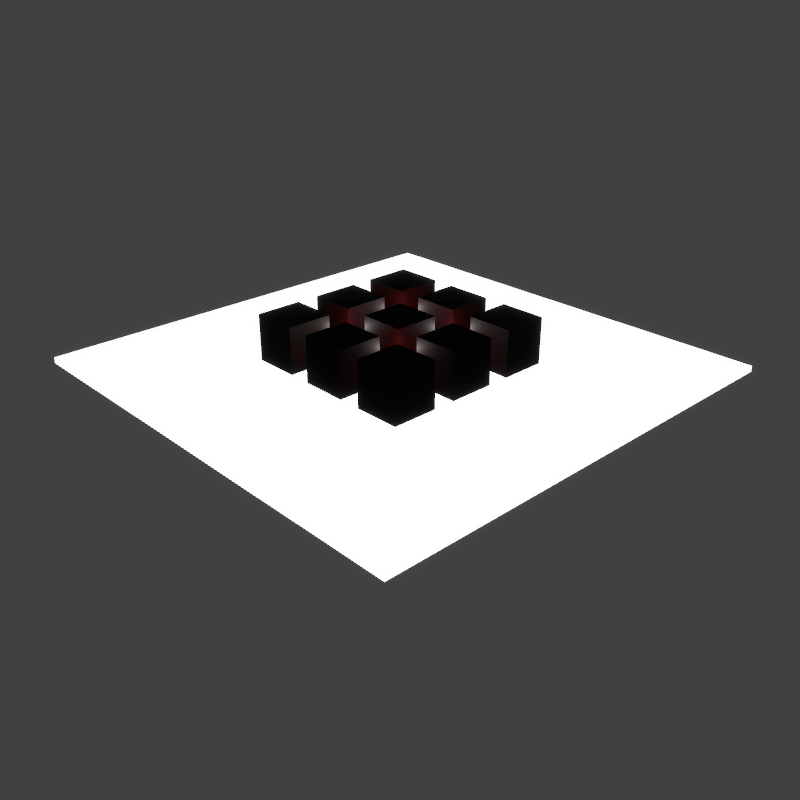

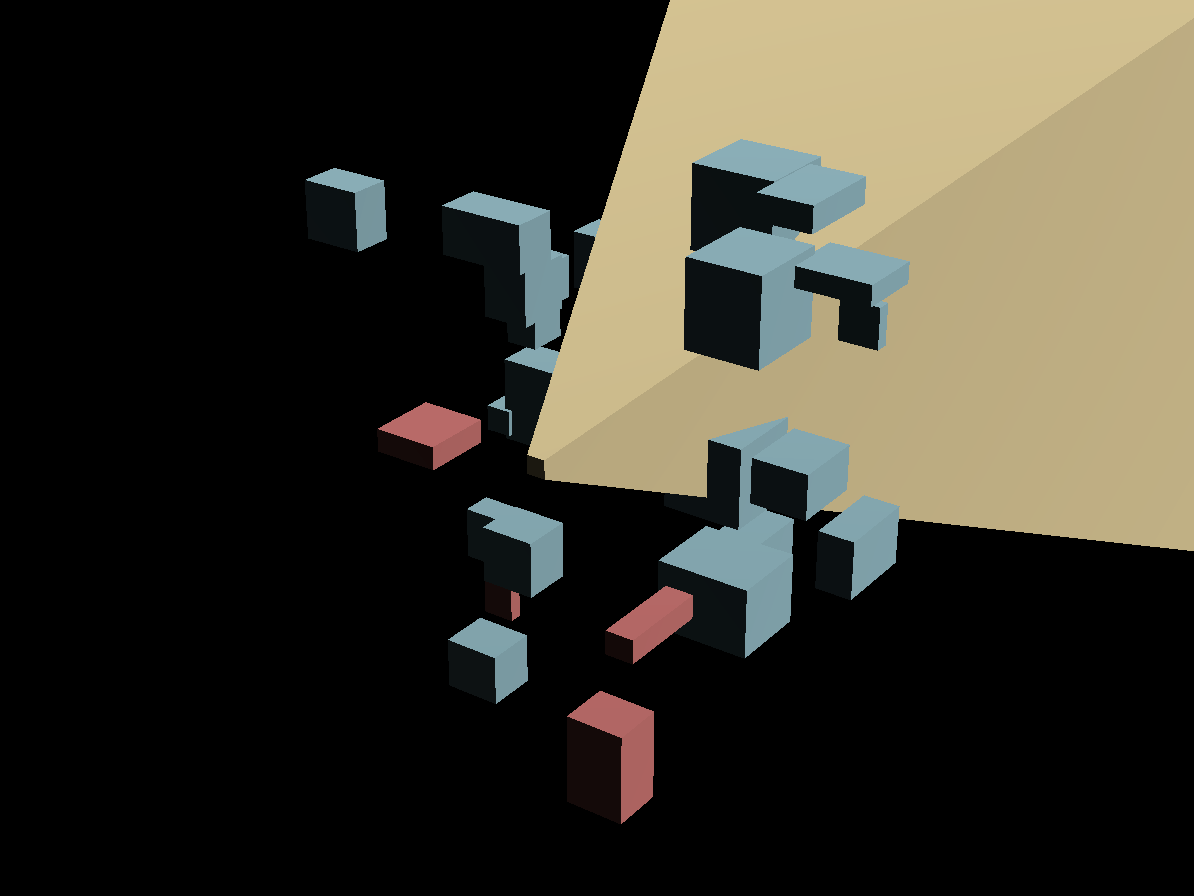

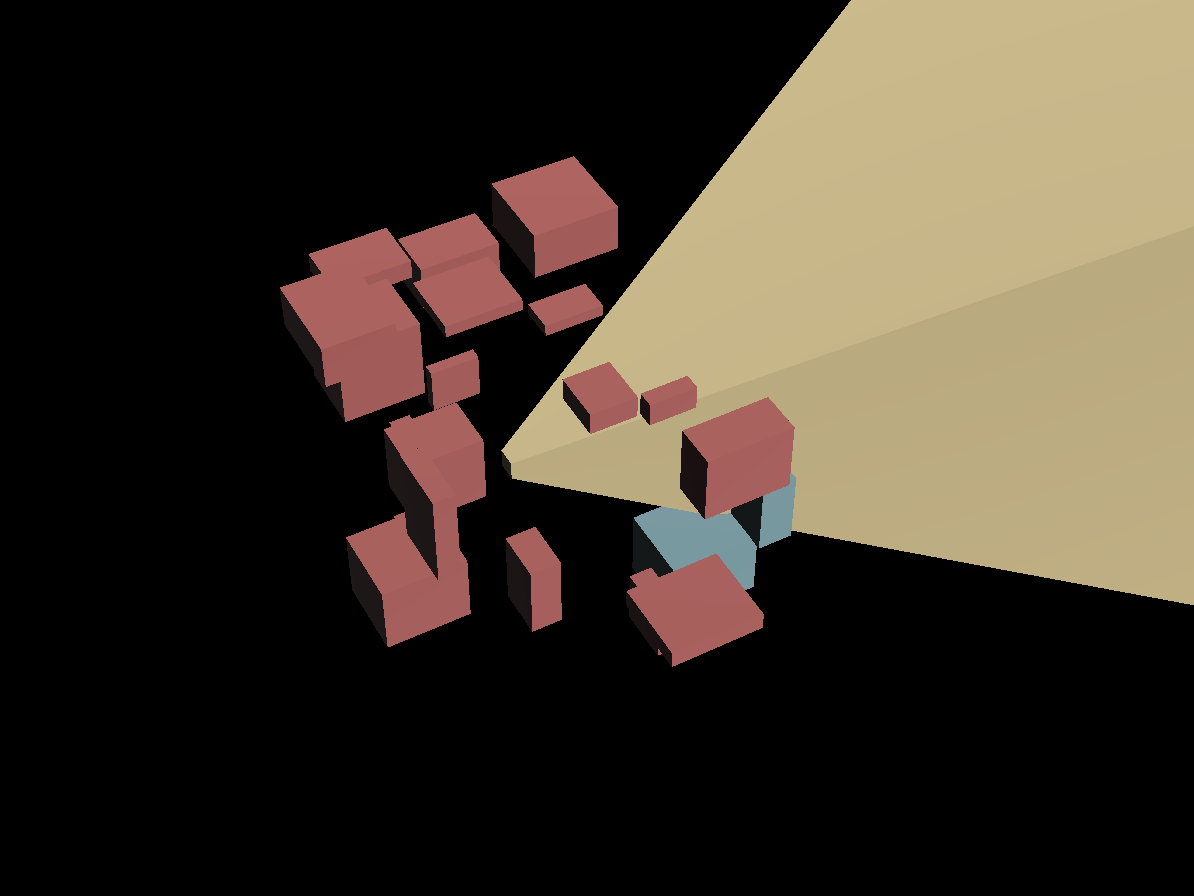

First we'll build the scene. We'll need some little cubes, we'll put them in the corner.

Then we'll add a floor that occludes the little cubes.

Then we'll add a green cube in the middle.

Then we'll add a purple cube that occludes the green one.

You should still be able to see the two little cubes at the top.

Then we'll extract the depth buffer and the hierarchical z-buffer

Everything looks in order. Now we can start running the shader on the CPU...

HZB cull shader debugging on the CPU, gathering buffers🔗

This is the type of the function that computes culling:

#[spirv(compute(threads(32)))] pub fn compute_culling( #[spirv(storage_buffer, descriptor_set = 0, binding = 0)] stage_slab: &[u32], #[spirv(storage_buffer, descriptor_set = 0, binding = 1)] depth_pyramid_slab: &[u32], #[spirv(storage_buffer, descriptor_set = 0, binding = 2)] args: &mut [DrawIndirectArgs], #[spirv(global_invocation_id)] global_id: UVec3, )

In order to call this from the CPU we'll need those three buffers, so I have to read those from the GPU in my test.

// The stage's slab, which contains the `Renderlet`s and their `BoundingSphere`s let stage_slab = futures_lite::future::block_on(stage.read(&ctx, Some("read stage"), ..)).unwrap(); let draw_calls = stage.draw_calls.read().unwrap(); let indirect_draws = draw_calls.drawing_strategy.as_indirect().unwrap(); // The HZB slab, which contains a `DepthPyramidDescriptor` at index 0, and all the // pyramid's mips let depth_pyramid_slab = futures_lite::future::block_on( indirect_draws .compute_culling .compute_depth_pyramid .depth_pyramid .slab .read(&ctx, Some("read hzb desc"), ..), ) .unwrap(); // The indirect draw buffer let mut args_slab = futures_lite::future::block_on(indirect_draws.slab.read(&ctx, Some("read args"), ..)) .unwrap(); let args: &mut [DrawIndirectArgs] = bytemuck::cast_slice_mut(&mut args_slab); // Number of `DrawIndirectArgs` in the `args` buffer. let num_draw_calls = draw_calls.draw_count();

This is why I love using rust-gpu. I just don't know how

I would do this kind of debugging in GLSL or WGSL, etc.

HZB cull shader debugging on the CPU, naming and dispatch🔗

Here's the source of the cull shader so you can follow along.

So, usually the compute cull shader gets called like this:

compute_pass.dispatch_workgroups(num_draw_calls / 32 + 1, 1, 1);

What I'm going to do is put in some logging and just call the compute_culling

function with these buffers and assert some values.

Starting with the gid (x of invocation id), renderlet id and bounding sphere.

Before that, we need to know the names of the renderlets:

id: Id(1054), name: yellow_cube_top_left

id: Id(2018), name: yellow_cube_top_right

id: Id(2982), name: yellow_cube_bottom_right

id: Id(3946), name: yellow_cube_bottom_left

id: Id(4130), name: floor

id: Id(5094), name: green_cube

id: Id(6058), name: purple_cube

Now we can match renderlet id to the name.

HZB cull shader debugging on the CPU, printing all the things🔗

Ok, I've added a ton of print statements to the shader function. Let's run it.

gid: 0

renderlet: Id(1054) // yellow_cube_top_left

renderlet is inside frustum

center_ndc: [-0.8047378, 0.8047378, 0.99766433]

screen space bounds center: [0.0976311, -0.9023689, 0.99766433]

screen space bounds radius: 1.0756001

screen max dimension: 128

renderlet size in pixels: 275.35364

selected mip level: 8

mip (x, y): (0, -0)

thread 'cull::cpu::test::occlusion_culling_debugging' panicked at /Users/schell/.cargo/registry/src/index.crates.io-6f17d22bba15001f/crabslab-0.6.1/src/lib.rs:38:6:

index out of bounds: the len is 21863 but the index is 4294967295

Whaaaa! Panic! Well that's definitely a problem, lol. Let's see...

The backtrace tells me it was this line of the shader:

let depth_in_hzb = depth_pyramid_slab.read_unchecked(depth_id);

And depth_id is determined by the mip_level and the x and y of the mip.

Well - right off the bat we know that mip_level is out of bounds. We only have 7 mips, and it wants index 8!

Working up the chain we can see that renderlet size in pixels: 275.35364 is

obviously wrong, as the image is only 128x128 pixels.

Let's just go top down and mentally sanity check these values...

center_ndc: [-0.8047378, 0.8047378, 0.99766433]This seems correct - it's the top left, near the back. That's where we put the top-left yellow cube so that tracks.screen space bounds center: [0.0976311, -0.9023689, 0.99766433]This doesn't make sense. We're looking to putcenter_ndcinto screen space, which has an x and y range of [0, 1] and the origin at the top left.I think it's this:

(center_ndc.y + 1.0) * -0.5Instead I think we should do

1.0 - (center_ndc.y + 1.0) * 0.5...

After that change we get this output:

gid: 0

renderlet: Id(1054)

renderlet is inside frustum

center_ndc: [-0.8047378, 0.8047378, 0.99766433]

screen space bounds center: [0.0976311, 0.0976311, 0.99766433]

screen space bounds radius: 1.0756001

screen max dimension: 128

renderlet size in pixels: 275.35364

selected mip level: 8

mip (x, y): (0, 0)

thread 'cull::cpu::test::occlusion_culling_debugging' panicked at /Users/schell/.cargo/registry/src/index.crates.io-6f17d22bba15001f/crabslab-0.6.1/src/lib.rs:38:6:

index out of bounds: the len is 21863 but the index is 4294967295

It still panic'd, but the screen space center of the top-left yellow cube looks correct.

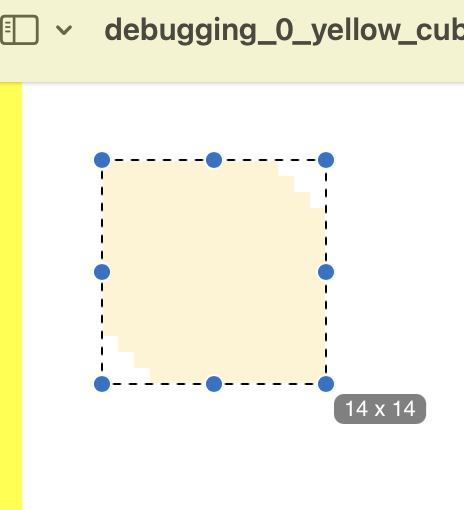

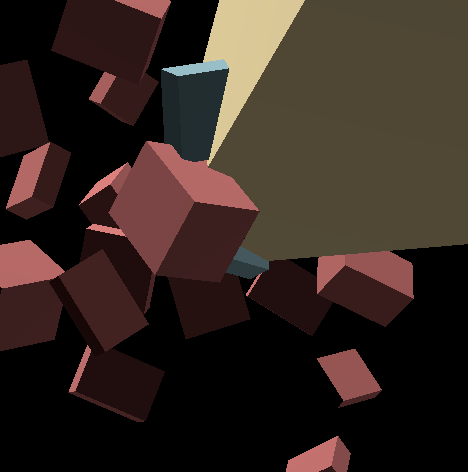

But the radius seems wrong. The cube is definitely more than 2 pixels in width. Let's open the frame in preview (macOS):

Yeah, 14px.

So I see what's going on here. I'm not correctly projecting the sphere onto the "screen plane".

...

After a good while of poking around I came up with a function on BoundingSphere

to project into pixel-space:

pub fn project_onto_viewport(&self, viewproj: Mat4, viewport: Vec2) -> (Vec2, Vec2) { fn ndc_to_pixel(viewport: Vec2, ndc: Vec3) -> Vec2 { let screen = Vec3::new((ndc.x + 1.0) * 0.5, 1.0 - (ndc.y + 1.0) * 0.5, ndc.z); (screen * viewport.extend(1.0)).xy() } // Find the center and radius of the bounding sphere in pixel space, where // (0, 0) is the top-left of the screen and (w, h) is is the bottom-left. let center_clip = viewproj * self.center.extend(1.0); let center_pixels = ndc_to_pixel(viewport, center_clip.xyz() / center_clip.w); let radius_pixels = Vec2::new( (self.radius / center_clip.w) * viewport.x, (self.radius / center_clip.w) * viewport.y, ); (center_pixels - radius_pixels, center_pixels + radius_pixels) }

And for our top-left yellow cube, that gives us:

sphere_aabb: (

Vec2(

5.106697,

5.106697,

),

Vec2(

19.886864,

19.886864,

),

)...which is correct!

So now we've got the correct projection 👍.

HZB cull shader debugging on the CPU, projecting the bounds as an AABB+depth🔗

All that leaves for us to figure out - is the depth of the "front" of the bounding sphere.

In this shader we already have the camera, which contains the frustum.

We can use the frustum to determine the normals "into camera" and "out

from camera" - they're simply the first three components (xyz) of the

frustum planes. We can use those to figure out the locations of the "front"

and "back" of the sphere in our sphere projection function.

...

So, after adding that into the projection function, said function returns an AABB, where xy components are in pixels and z is in NDC (depth).

Now I think things are good-to-go! We get this output from our shader:

gid: 0

renderlet: Id(1054)

renderlet is inside frustum

znear: [0, 0, -1, 9.949975]

zfar: [-0, -0, 1, 90.00072]

sphere_aabb: Aabb {

min: Vec3(

5.106697,

5.106697,

0.99745977,

),

max: Vec3(

19.886864,

19.886864,

0.9978464,

),

}

screen max dimension: 128

renderlet size in pixels: 20.902311

selected mip level: 4 8x8

center: [12.49678, 12.49678]

mip (x, y): (0, 0)

depth_in_hzb: 1

depth_of_sphere: 0.99745977

I've printed out the znear and zfar of the camera's frustum just to be certain.

Let's run this for the rest of the draw calls...

[2024-10-19T19:45:34Z INFO renderling::cull::cpu::test] name: yellow_cube_top_left

gid: 0

renderlet: Id(1054)

renderlet is inside frustum

znear: [0, 0, -1, 9.949975]

zfar: [-0, -0, 1, 90.00072]

sphere_aabb: Aabb {

min: Vec3(

5.106697,

5.106697,

0.99745977,

),

max: Vec3(

19.886864,

19.886864,

0.9978464,

),

}

screen max dimension: 128

renderlet size in pixels: 20.902311

selected mip level: 4 8x8

center: [12.49678, 12.49678]

mip (x, y): (0, 0)

depth_in_hzb: 1

depth_of_sphere: 0.99745977

[2024-10-19T19:45:34Z INFO renderling::cull::cpu::test]

[2024-10-19T19:45:34Z INFO renderling::cull::cpu::test] name: yellow_cube_top_right

gid: 1

renderlet: Id(2018)

renderlet is inside frustum

znear: [0, 0, -1, 9.949975]

zfar: [-0, -0, 1, 90.00072]

sphere_aabb: Aabb {

min: Vec3(

108.11314,

5.106697,

0.99745977,

),

max: Vec3(

122.8933,

19.886864,

0.9978464,

),

}

screen max dimension: 128

renderlet size in pixels: 20.902311

selected mip level: 4 8x8

center: [115.50322, 12.49678]

mip (x, y): (7, 0)

depth_in_hzb: 1

depth_of_sphere: 0.99745977

[2024-10-19T19:45:34Z INFO renderling::cull::cpu::test]

[2024-10-19T19:45:34Z INFO renderling::cull::cpu::test] name: yellow_cube_bottom_right

gid: 2

renderlet: Id(2982)

renderlet is inside frustum

znear: [0, 0, -1, 9.949975]